Affiliate links on Android Authority may earn us a commission. Learn more.

Optimizing Compiler – the evolution of ART

Published onApril 30, 2015

The language of Android is Java and Java is slightly different to some of the other popular mainstream programming languages in that it compiles to an intermediate code (often known as bytecode) and not to the native machine-code of the target platform. Therefore to run a Java program on a platform you need a run time environment.

Prior to Android 5.0, Dalvik was Android’s runtime environment. It used a Just-In-Time (JIT) compiler which compiled portions of the bytecode every time the program was run, just in time for it to be used. However that all changed with Android 5.0 Lollipop and the release of ART.

The Android Runtime (ART) brought lots of improvements to app performance, garbage collection, and development/debugging, by moving away from Dalvik’s just-in-time (JIT) code compilation to mixed ahead-of-time (AOT) compilation. ART was originally offered as a developer option in KitKat, but officially replaced Dalvik as the default compiler with the launch of Android Lollipop.

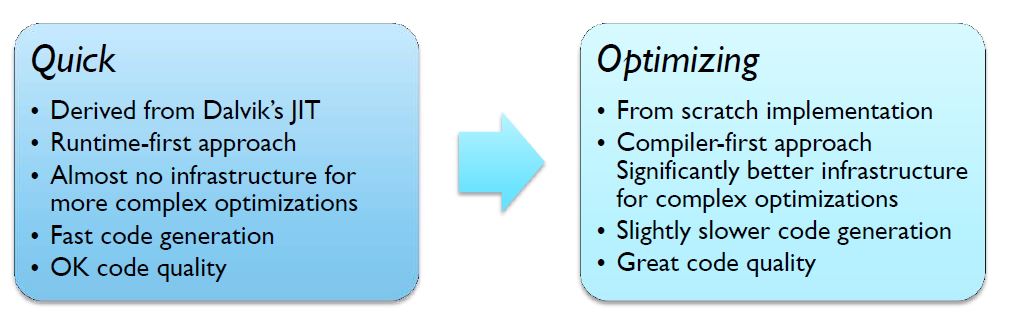

However, to facilitate a snappy move over from Dalvik to ART, Android Lollipop makes use of a compiler known as ‘Quick’, which is really an AOT version of the Dalvik JIT compiler.

While offering some improvements over Dalvik, Quick isn’t at the cutting edge of compiler technology. To improve things further, ARM and Google are working closely together on a new ‘Optimizing’ compiler for Android, which features more up to date technologies, including fully optimized support for ARM’s AArch64 backend. The new compiler will also allow new optimizations to be easily added in future releases.

The Optimizing compiler optimizes for both AArch32 and AArch64 (32 and 64-bit) separately, depending on the platform. ARM are doing a lot of the work on AArch64, while Google is developing the AArch32 backend.

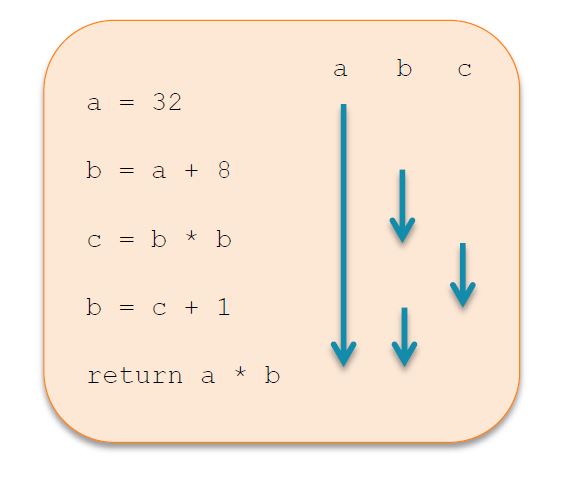

Unlike Quick, Optimizing is being completely rebuilt from scratch in order to produce superior code quality through a range of optimizations. This is accomplished by changes to the Intermediate Representation (IR), instead of using two levels IR like in Quick, Optimizing uses just one. By applying IR transformations progressively, Optimizing should be better at eliminating dead code, can add in constant folding, and global value numbering.

Another major improvement comes in the form of improved register allocation. Quick has a very simple algorithm, which targets speed rather than complexity, but this results in lots of registers being spilled to the stack. Optimizing moves over to Linear Scan Register Allocation, which is slightly slower at compile time, but offers better runtime performance. The technology minimizes register spills by performing ‘liveness analysis’ to better asses which registers are in active use at any time. With less registers to save on the stack and better use of the available registers, there is less code to execute, and that means greater performance.

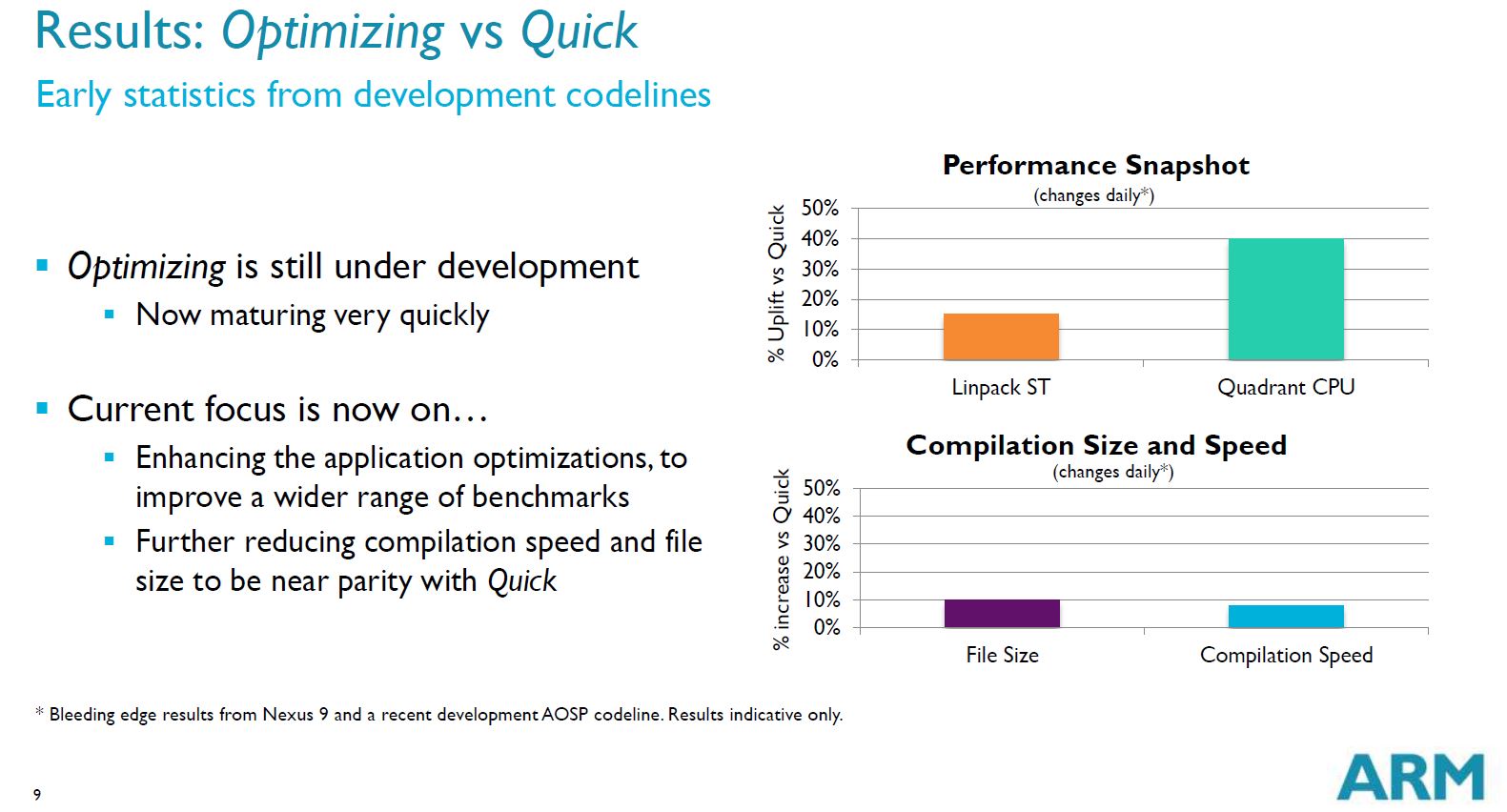

Development of Optimizing is still ongoing, but it already shows significant improvements in performance, up to 40 percent in one benchmark. The only drawback is an 8 percent increase in compilation speed and a 10 percent increase in file size, owing to additional metadata used by the compiler. Although these could be reduced in the future.

If all of this has you wondering when you’ll be able to benefit from Optimizing, the answer is sooner than you may think. Optimizing is now the default compiler for apps in the AOSP branch, although Quick is still used for some methods and compiling the boot image. Patches to support and optimize specific architectures, such as Cortex-A53 or Cortex-A57, are also in the works.

We’ll hopefully hear much more about plans for Optimizing at Google I/O 2015, which will be taking place from May 28th to 29th in San Francisco.