Affiliate links on Android Authority may earn us a commission. Learn more.

OpenVX: everything you need to know

OpenVX is an API enabling software developers to add hardware accelerated computer vision capabilities to their programs. OpenVX 1.0 was announced in October 2014, and now the Khronos Group has announced OpenVX 1.1. Here is everything you need to know.

OpenVX who?

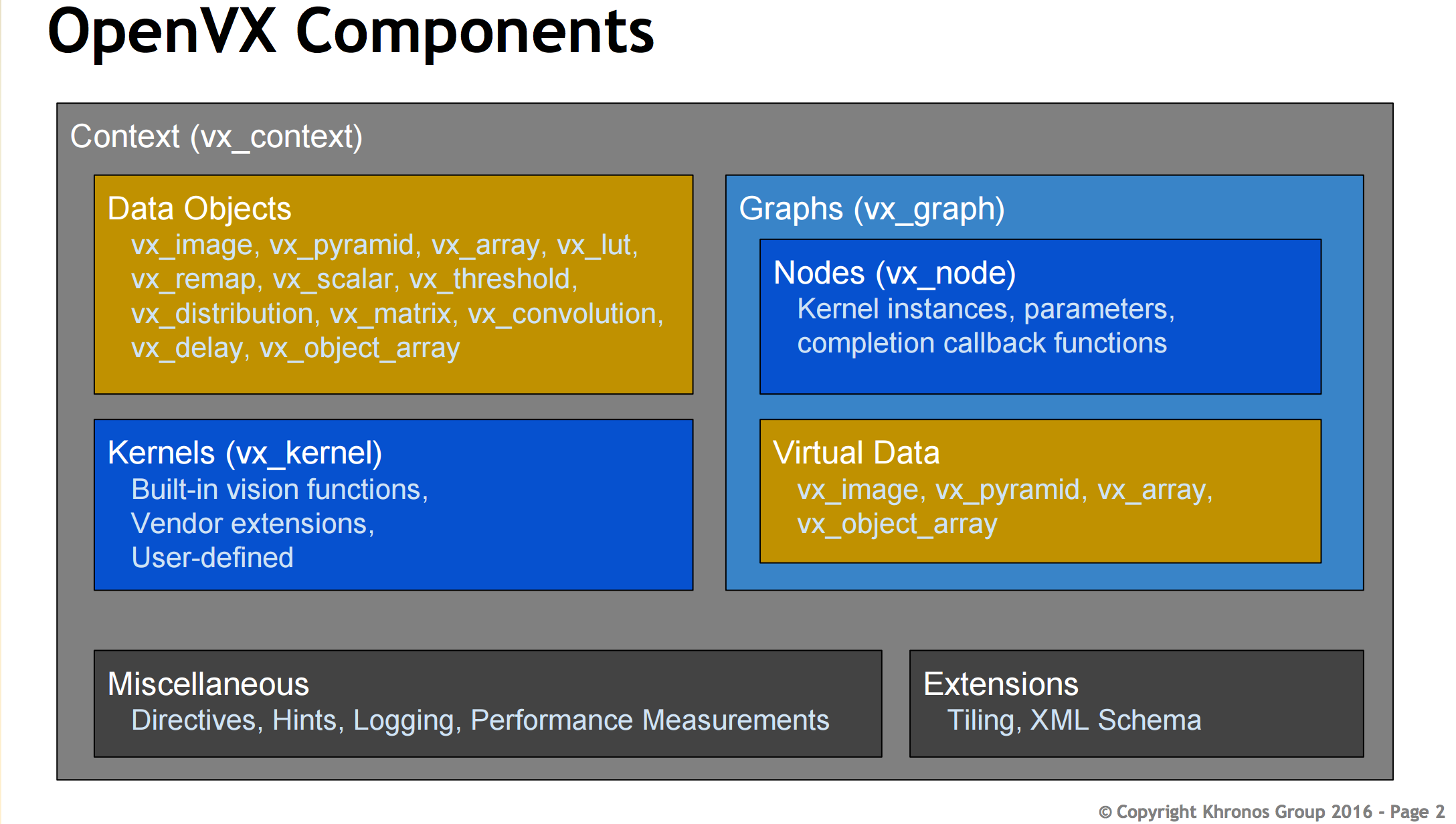

OpenVX offers something truly unique and beneficial to the world of mobile computing. The idea is that OpenVX can speed up “computer vision” applications while still being easy to use and has cross platform support. Khronos claims that vision processing on just the CPU is too expensive, while the GPU is made for this exact purpose. There are also special dedicated chipsets like ISPs (Image Signal Processor) that handle functions like processing the images you take on your phone’s camera.

The problem is, there is no industry standard for developing for each of these chips. OpenVX wants to change that without too much CPU and GPU overhead. The official OpenVX material can be found here.

What is computer vision?

Computer vision is simply a field of study that includes methods for getting, analyzing and understanding images as well as Nth-dimensional data from the world to get symbolic or numerical information. It is common practice to perceive this data as a geometric shape, physics, learning theory, or statistics.

Computer vision has important applications in AI. For instance, a robot could perceive the world and understand what is happening through different sensors and cameras. Some other real world examples include self driving cars, as they have a bunch of sensors working together to make sure everything goes smoothly, or medical image analysis. Think of it as a system of cameras and sensors that are able to perceive the world and get data that can be used by either humans or the system itself.

How does it work?

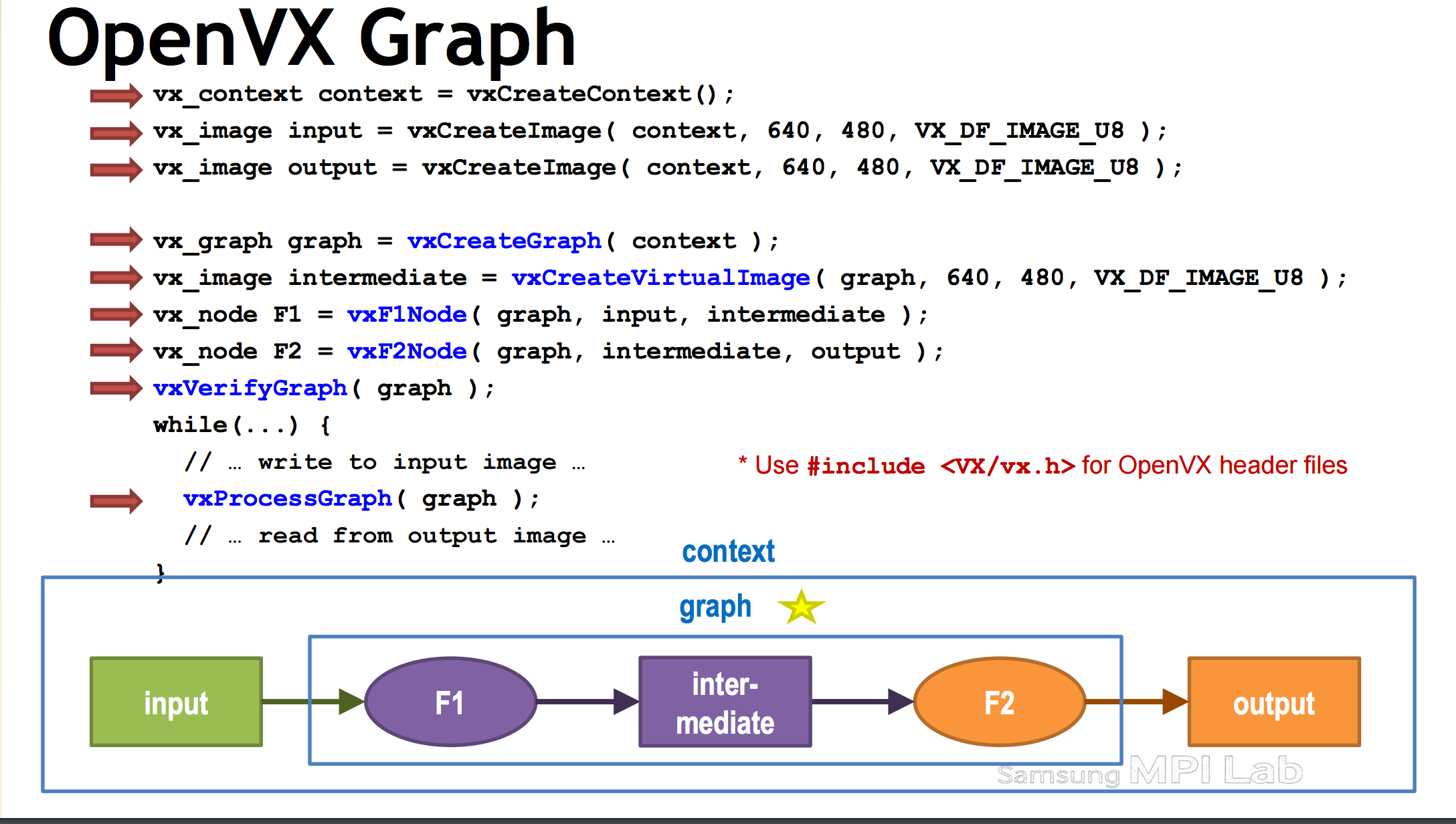

vx_gragh graph = vxCreatGraph( context );

and nodes can be created by:

vx_node F1 = vxF1Node(. . .);

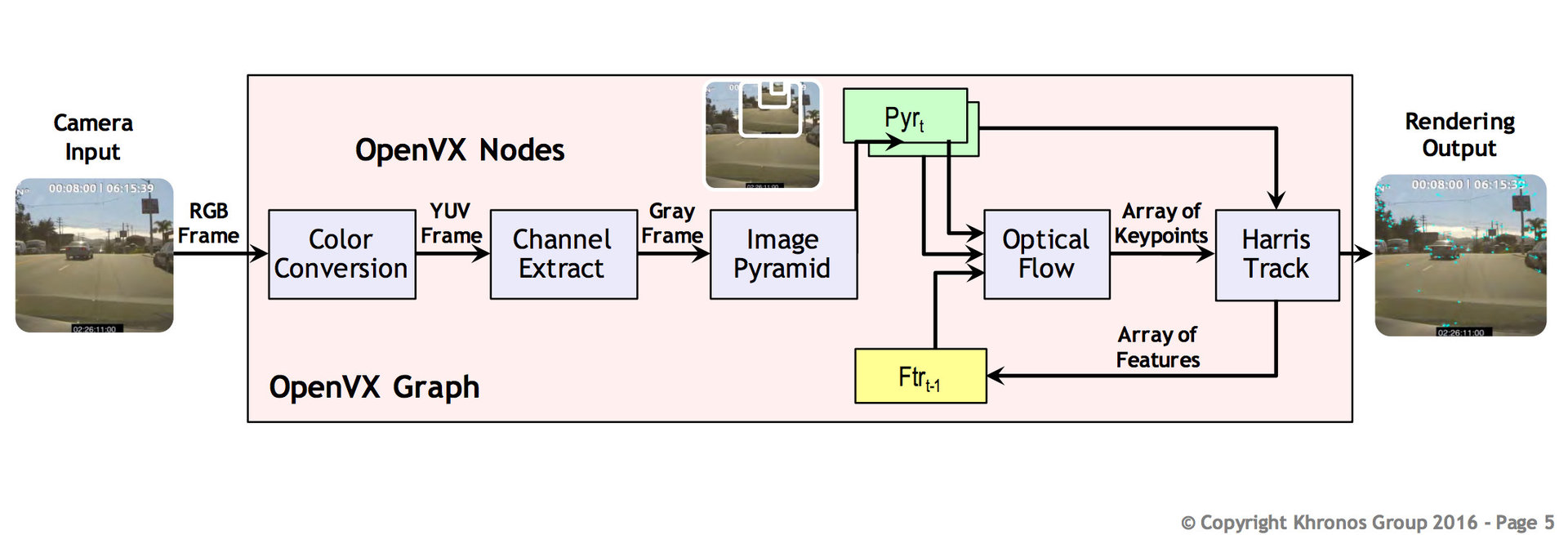

The graph is the main component in OpenVX. Using graphs enables the ability to show the computer vision problem of any implementation, as all of the operations in the graph are known ahead of the graph being processed. This allows for the nodes to be ran as many times as needed, cutting back on compile time significantly. A graph would then execute these nodes, in no particular order, and the desired result will be achieved if done correctly.

An example on how a graph could be used is if you want to take a colored RGB photo and convert it to grayscale. Graphs with the correct nodes would allow you to do this without too much difficulty. This function would also be spread out to the hardware, depending on what is most efficient or has the most power, depending on the task at hand.

The first is graph scheduling – OpenVX intelligently executes the graph on multiple chips for better performance or lower power consumption. OpenVX is also able to use already allocated memory instead of using new memory to save room for other applications and the system to use. Instead of running a whole subgraph, OpenVX is able to make it one node for less kernel launch overhead.

The last key aspect is data tiling. This is like taking an image and splitting it up into smaller parts that render independently. It acts like Cinebench if you have ever run that test on your PC, although on a more random basis. This enables potentially shorter load times and better memory allocation. A scenario in which this could be beneficial is if some of the image was pre rendered before it is actually needed. This will not always be the case, but it can definitely help out.

Coding convention and how to use OpenVX

#include <VX/vx.h>

OpenVX also has a robust error management system. “Vx_status” will return a status like “VX_SUCCESS”, this basically acts as a boolean variable that can be used to throw exceptions to tell you what may be going wrong, if programmed that way.

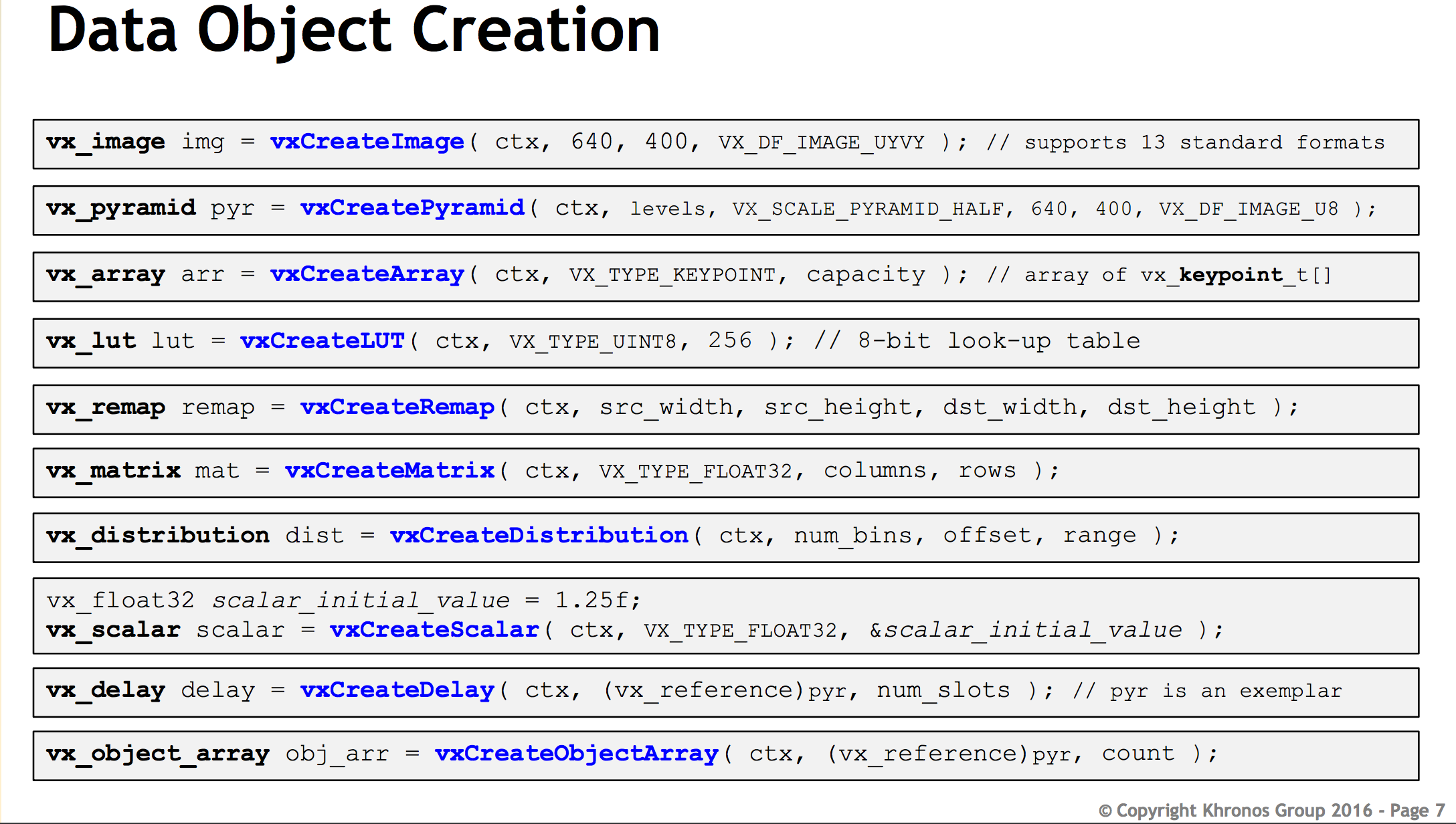

OpenVX also has its own data types including 8 and 16 bit ints along with rectangles, images and keypoints. OpenVX has object oriented behavior although C is not the best for that. An example of code that utilizes this methodology is:

vx_image img = vxCreateImage( context, 640, 400, VX_DF_IMAGE_RGB );

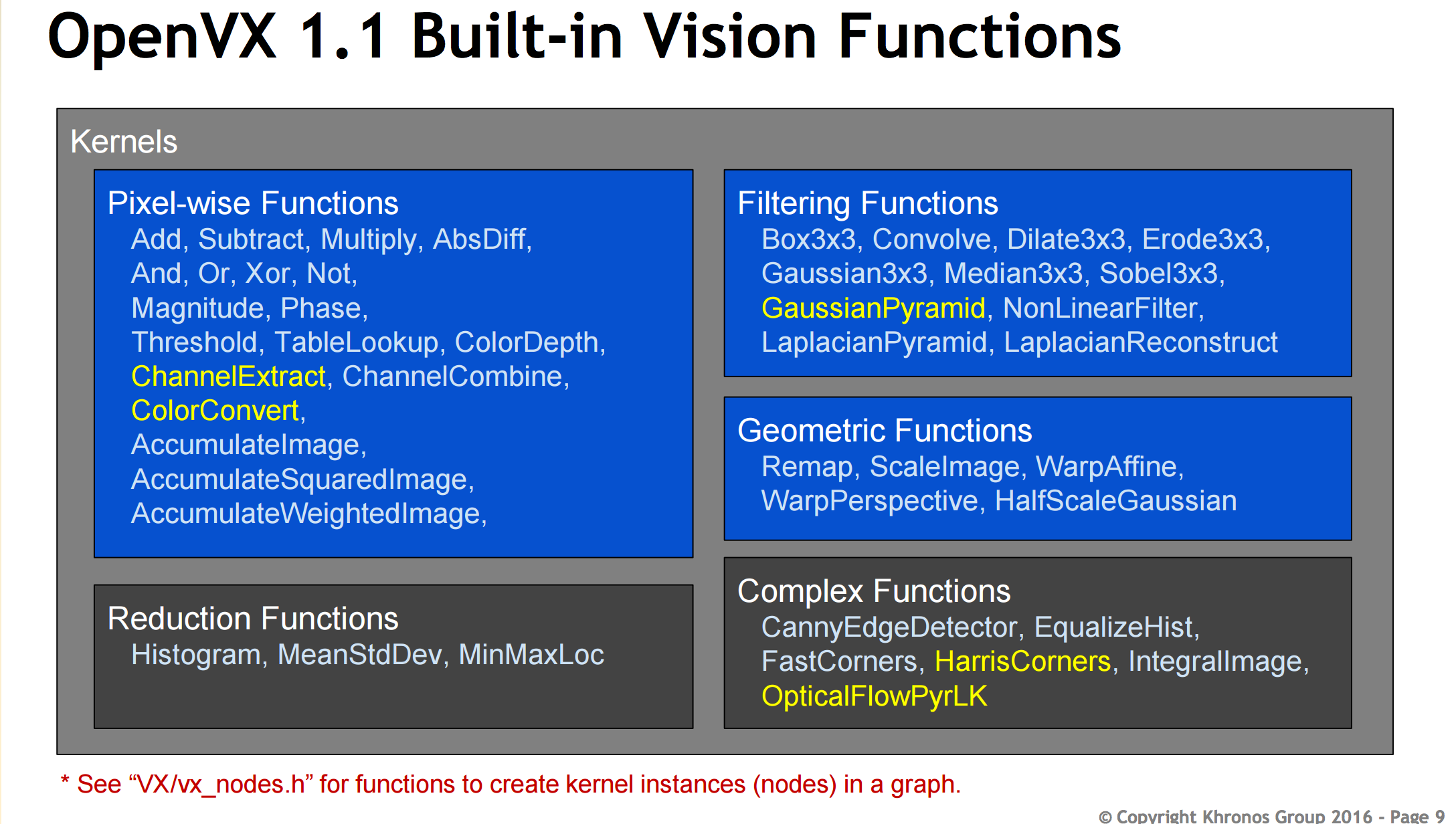

There are many vision functions that can be used to manipulate the image on screen. These include histograms, gaussian pyramids and many more functions which can be found on the image above.

What does this mean for Android?

With OpenVX, Android could distribute its load more evenly across the hardware to better optimize battery life and performance, and with Android now supporting Vulkan, we could see a huge jump in performance and possible battery life improvements. Companies are already working on OpenVX 1.1 implementations so we could see results very soon. However, there is no word on Qualcomm’s status on the matter. This means it might be a while before we see something on the Android front.

Wrap up

OpenVX was built as a C API with object oriented design that enables a graph-based execution model with other functions allowing for relatively easy implementation and development while offering performance gains and battery gains depending on the workload. This could be a huge win for Android and mobile in general.

Stay tuned to Android Authority for more OpenVX development content. Does OpenVX look intriguing? Let us now in the comments!