Affiliate links on Android Authority may earn us a commission. Learn more.

2017 was the year Google normalized machine learning

Published onJanuary 2, 2018

2017 was a hell of a year for a multitude of reasons. In tech, this was officially the year we saw artificial intelligence engines leading consumer product lines. Most notable was the role AI played in Google’s portfolio.

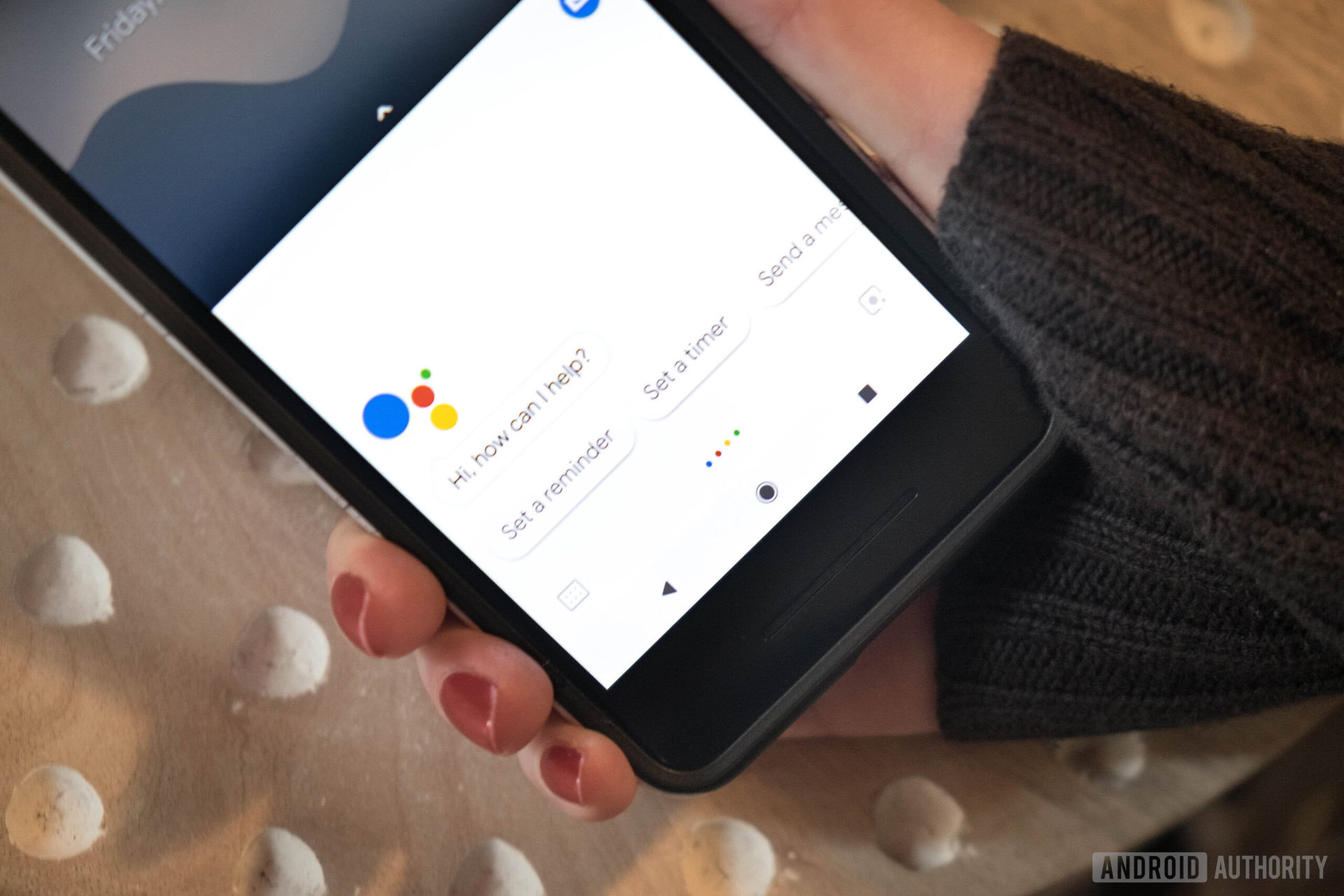

Many products launched with Google Assistant built in, but it wasn’t until the end of 2017 that we realized the role AI would play in driving Google’s marketing. From its smartphones to its laptops and smart speakers, even its new earbuds, Google Assistant and its contextual abilities quickly became the reason to bring home a Google product.

Google Assistant has come so far

It was Forbes that initially pegged 2017 as “The Year of Artificial Intelligence.” Not only did we have advancements from Amazon’s Alexa and Microsoft’s Cortana, but Google Assistant grew ten-fold in its abilities right as it became available on more devices.

Google Assistant grew ten-fold in its abilities as it became available for more devices

At present, Google Assistant can do a lot. It can predict traffic on your way to work and offer up calendar reminders mere hours before you’re needed somewhere. It can control playback on your television (through the Chromecast), turn off the lights around your house, and recognize people in your photos based on your contacts. It will even remind you to share those pictures with the people in them if you forget.

The backbone of Google Assistant is its machine learning engines, which help other Google products study acoustics in a room to deliver the best sound (a la the Google Home Max) or start filming video at the right moment (the main selling point of Google Clips). Machine learning is what helps the Pixel 2 identify any music playing in the background. It drives the Pixel Buds’ ability to translate foreign languages on the spot. It’s also how Gmail offers pre-filled replies and how YouTube knows what to suggest for you to watch. Machine learning is the fuel for Google’s consumer products.

In 2017, we saw more of the capabilities of machine learning. Google’s DeepMind AI, called AlphaGo Zero, is more robust than what consumers are using. It beat two of the world’s best players at the strategy game Go. It learned to program itself and how to recognize specific objects in photos. Remember the fence-removal demonstration at Google I/O? That was possible through machine learning.

There’s also Google’s open-source TensorFlow, a significant part of the software library that runs its machine learning capabilities. It actively fuels some of Google’s existing AI abilities, like language detection and image search. Google has even created chips called Tensor Processing Units (TPUs), designed to process TensorFlow APIs in a more efficient manner.

Another chip introduced in 2017 was the Image Processing Unit (IPU) inside the Pixel 2, which Google had partnered with Intel to develop. Because of it, the Pixel 2 can perform like other flagships without so much added hardware and optics.

At the Google I/O 2017 keynote, Sundar Pichai made it clear the company’s forward trajectory was to prioritize artificial intelligence above all else. Look no further than its current product lineup for evidence of this: The Pixel 2 and 2 XL, the trio of Google Home products, and the forthcoming Google Clips are all bonded by an artificial intelligence engine based on machine learning experiments.

The year ahead

Last year, more competition got into machine learning. Samsung tried its hand with Bixby on the Galaxy S8 and Note 8, though its debut was rather sloppy. Bixby’s lead developer just recently left the company, which makes its future a little uncertain.

Soon, the question will be 'what's your assistant?'

Amazon is also slowly encroaching on Google’s space with its Alexa platform. It’s compatible with more third-party services and available on a broader variety of devices than Google Assistant. It comes baked-in on some Android smartphones, too, including the HTC U11, but it’s unclear if that kind of native integration will have much of an impact on Alexa’s overall market share.

Machine learning has officially become a part of our lives. The year ahead is only going to see it permeate more gadgets. Its way of reproducing will be through the ecosystem of connected devices; through our smartphones, the speakers in our house, and even the thermostats on our walls. Soon, people won’t ask which phone you use, but rather which assistant.