All the latest

AI news

I moved and Gemini helped in surprising ways — but the final touch is missing

Mitja Rutnik13 hours ago

0

I asked ChatGPT to turn me into a Muppet, and it did not disappoint

Matt HorneApril 12, 2025

0

I have seen Google's AI version of The Wizard of Oz, and I'm still in shock

C. Scott BrownApril 9, 2025

0

I tried out Gemini's latest feature and you should too

Mitja RutnikApril 5, 2025

0

From dinner plans to DIY: Here's how our team really uses AI

Matt HorneApril 1, 2025

0

I tested Gemini's new Deep Research version and it's surprisingly good

Mitja RutnikMarch 31, 2025

0

I gave Gemini my search history, and now I'm scared by how well it now knows me

Ryan HainesMarch 29, 2025

0

This dumb Gemini limitation makes me want to switch back to Google Assistant

Rita El KhouryMarch 26, 2025

0

I hate Gemini in my Gmail, so here's how I turned it off

Hadlee SimonsMarch 23, 2025

0

Gemini Live's multi-language skills have blown my socks off

Rita El KhouryMarch 22, 2025

0

Google One AI Premium is now free for college students: What you'll get, and how to sign up

Pranob Mehrotra8 hours ago

0

Gemini's best trick is now rolling out to all Android phones for free! (Updated)

Adamya Sharma9 hours ago

0

Samsung phones could soon let you ditch Gemini for this rising AI assistant

Adamya Sharma22 hours ago

0

ChatGPT just made it easier to access your old generated images

Ryan McNealApril 16, 2025

0

OpenAI may be turning ChatGPT into a social media platform

Rushil AgrawalApril 15, 2025

0

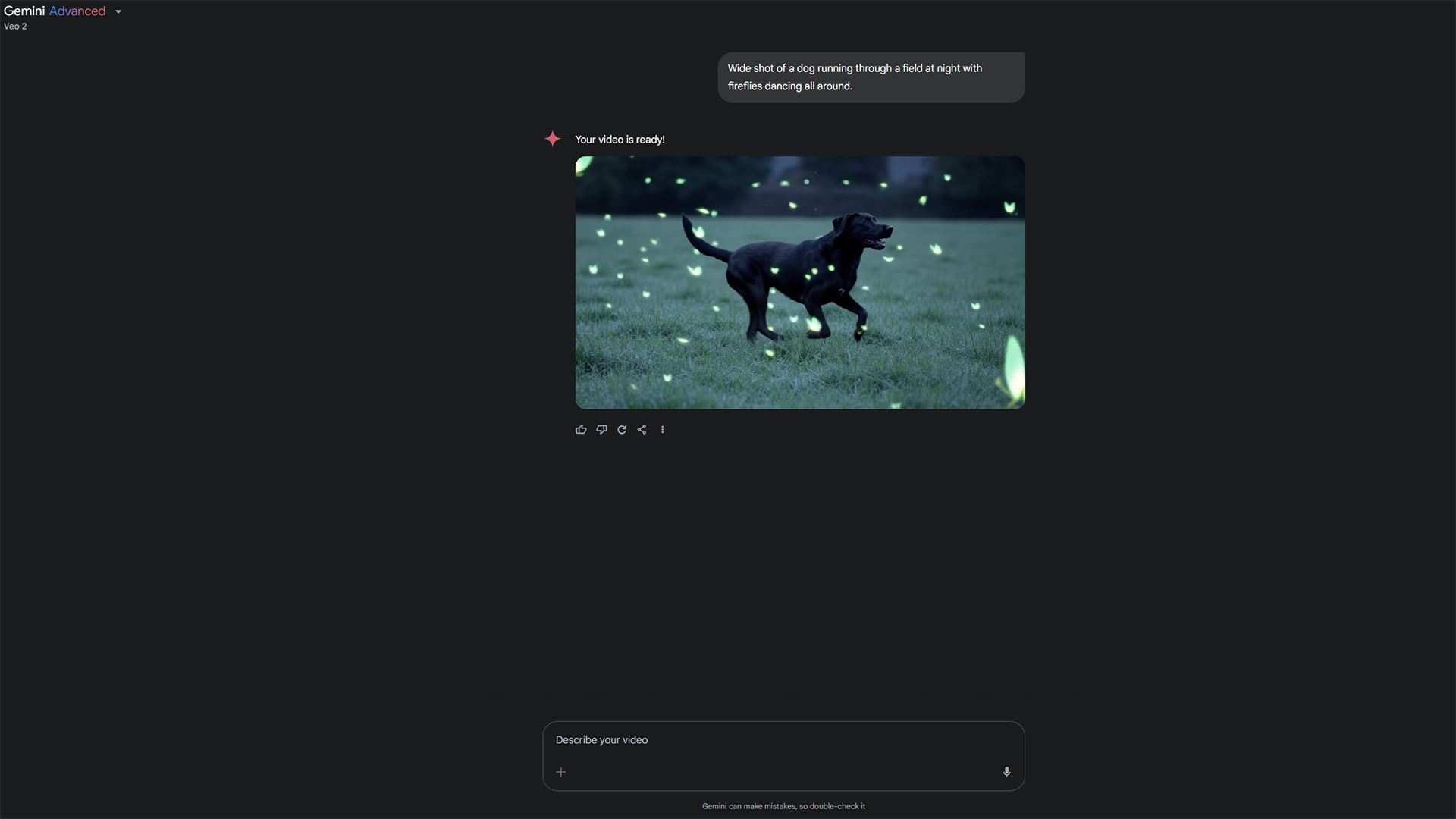

Don't want to pay for Gemini Advanced? You might still be able to generate videos (APK teardown)

C. Scott BrownApril 15, 2025

0

Google may add a Circle to Search-like feature to Gemini Live, and we tried it out (APK teardown)

Aamir SiddiquiApril 15, 2025

0

Gemini could soon be getting a new trick to automate your future (APK teardown)

Aamir SiddiquiApril 15, 2025

0

Forget Live Translate: Google is now using Pixel phones to talk to dolphins

Adamya SharmaApril 14, 2025

0

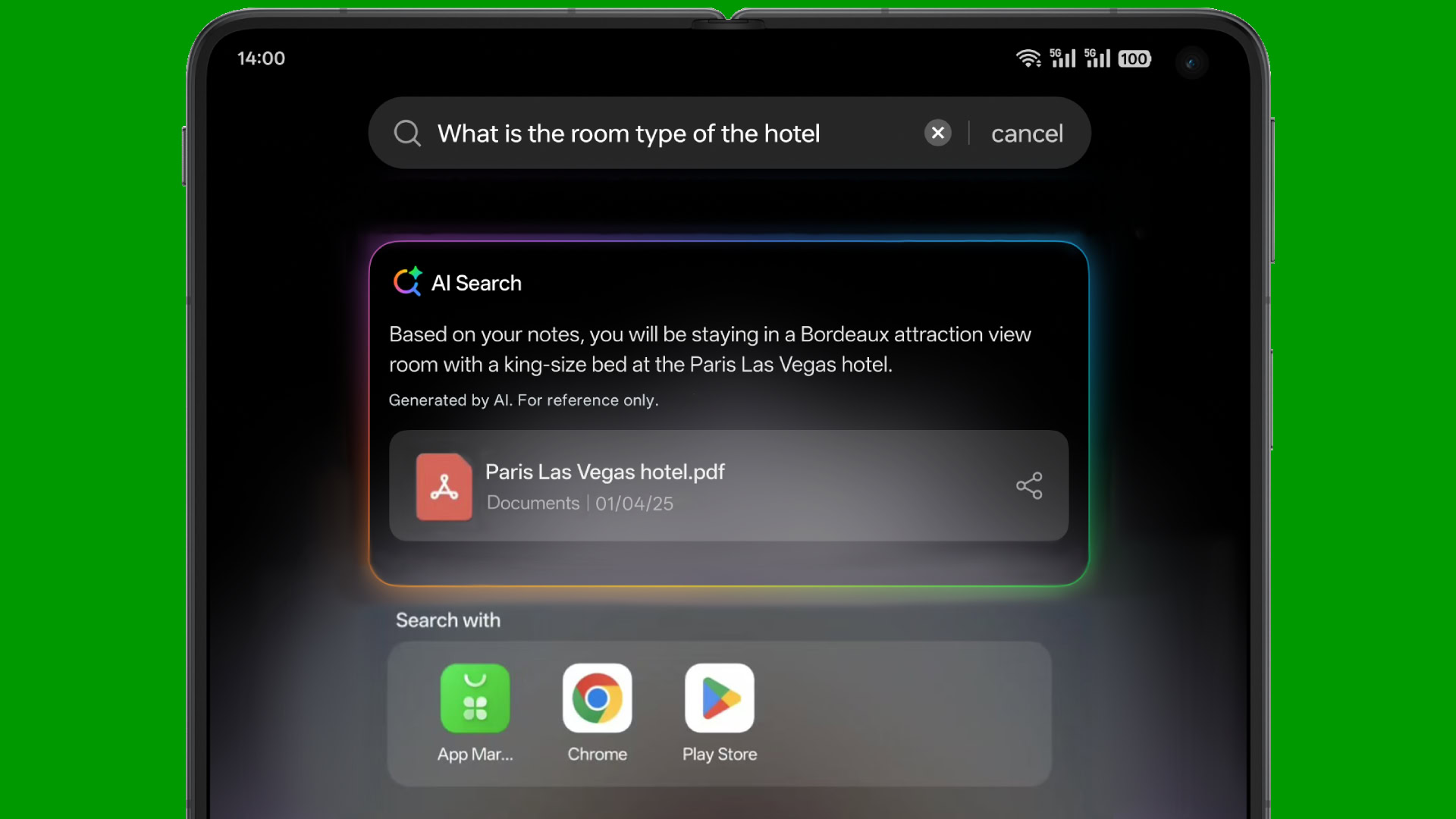

I wish Samsung's Galaxy AI search worked the way OPPO's does

C. Scott BrownApril 11, 2025

0