Affiliate links on Android Authority may earn us a commission. Learn more.

Actually, Android IS optimized - Gary explains

Published onJanuary 20, 2017

One of the comments I see repeatedly beneath my “Gary explains” videos is, “but Android isn’t optimized.” This is especially true if the video is about performance or mentions iOS in any way. At the root of this comment is the idea that Apple devices are highly optimized because Apple controls the hardware, the software and the ecosystem. Whereas Android is perceived to be a jumble of components from a disparate group of manufacturers and OEMs. Surely, Apple’s solution must be better optimized?

Somewhere lurking behind the whole optimization thing is a latent need for some people to explain why it seems that Apple products are perceived to be “better” (by some) and why (at the moment) Apple is winning the performance race. If only Android was better optimized then all their problems and insecurities would go away.

The first thing we need to recognize is that this idea actually has its foundations in the battle between the Mac and PC. It was the same then. Apple controlled the hardware and the software, as a result (according to Apple) “it just works.” Whereas Microsoft only controlled the software, the hardware came from Dell, HP, IBM, whoever. And inside those Dell, HP, IBM, whatever PCs was a CPU from either Intel or AMD, a GPU from either ATI (now AMD) or NVIDIA, a hard disk from etc. Apple used this idea in its marketing campaigns. And to some extend it was actually true. The last 20 years of Windows was all about the right drivers and the dreaded blue screen of death.

Fast forward to today and we have a similar situation. Apple controls the hardware and the software for the iPhone (just like the Mac) but Android is akin to Windows and the PC. Google provides the OS, but the hardware comes from a large group of OEMS including Samsung, Sony, LG, HTC, even Google itself. The SoCs come from Qualcomm, Samsung, MediaTek, HUAWEI. The CPUs in the SoCs come from ARM, Qualcomm or Samsung, while the GPUs come from either ARM or Qualcomm, etc.

When you also consider that Android smartphones come in a huge variety from sub $150 low-end phones with small screens, under powered CPUs and little storage to premium flagship devices with price tags 4 or 5 times higher than those at the low-end. This means that, if you pick the wrong device, it is easy to get a bad Android experience.

But is it true? No. Android is optimized and I can prove it!

Java vs C

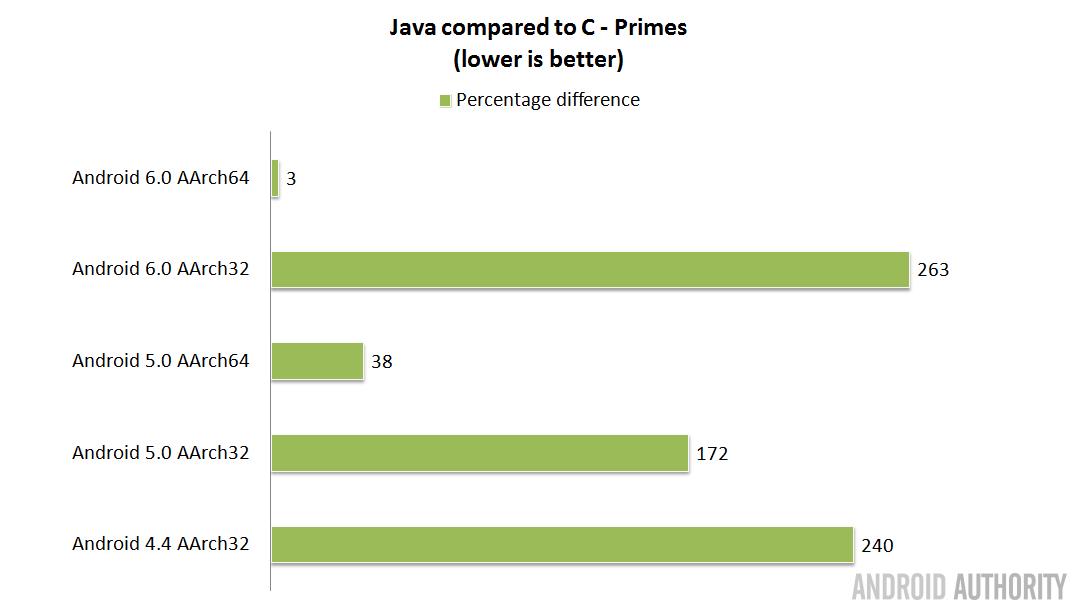

The default language for Android is Java. It is a fact that Java apps are slower than apps written in C/C++ that are compiled to native machine code, however the real-world speed difference isn’t very much as a typical app spends more time waiting for user input or waiting for network traffic than actually doing any intensive calculations. If you want to know more about the speed difference between Java and C then please see Java vs C app performance – Gary explains.

The first rung on the “Android isn’t optimized” ladder is the idea that iOS apps are faster because they don’t use Java. Taking into account what I just said about “real-world speed”, it is also worth noting that large parts of Android are actually written in C and not Java! Plus many (if not all) of the CPU/GPU intensive apps and games for Android are also written in C. For example anything that uses one of the popular 3D engines like Unity or the Unreal Engine will in fact be a native app and not a Java app.

The conclusion? Firstly, that while Java is slower than native apps, the real-world speed difference isn’t huge. Secondly, that the Android Java VM is improving all the time and now contains some very sophisticated technology to speed up Java execution. Thirdly, that large parts of Android including the Linux kernel are written in C and not Java.

Hardware acceleration

The next question is this: does Apple add special instructions to its chips to speed up certain operations? Also, if it does then why doesn’t Qualcomm or Samsung. Apple holds an ARM architectural license which allows it to build ARM compatible CPUs using its own engineers and technologies. ARM require that any such CPU is 100% compatible with the relevant instruction set architecture. To verify this process ARM runs a suite of compatibility tests on their processors and the results are verified by ARM. However the tests, as far as I know, can’t and don’t check for any extra instructions, specific to just that processor.

This means that theoretically if Apple found it was always performing certain types of operation then it could add hardware to its processors to perform those tasks in hardware rather than software. The idea here is that tasks performed in hardware are faster than the software equivalents. A good example is encryption. The ARMv7 instruction set did not have any instructions for performing AES encryption in hardware, all encryption had to be handled in software. However the ARMv8 instruction set architecture has special instructions for handling AES in hardware. This means that AES encryption on ARMv8 chips is much faster than those on ARMv7 chips.

It is conceivable that Apple has added other instructions to its hardware that perform certain tasks in hardware and not software. However there is no proof. Analysis of the binaries produced by Apple’s public compilers and even a look at the source code compilers themselves (as they are open source) doesn’t reveal any new instructions.

But that isn’t the whole story. A second way that Apple could add hardware boosts to its processors is by adding special hardware that needs to be programmed and executed in a similar way to how a processor uses a GPU or a DSP. In other words the compiler and more importantly the iOS SDK is written in such a way that certain types of functions are performed in hardware by setting up some parameters and then getting the hardware to process it.

This is what happens with a GPU. An app loads its triangle information into some area of memory and tells the GPU to work on it. The same process is true for a DSP or an ISP. You can find out more here: What is a GPU and how does it work? – Gary explains.

For example, and this isn’t a real world example, just an illustration, let’s imagine that Apple’s engineers discovered that the SDK was always needing to reverse a string, so that “Apple” became “elppA”. It is easy enough to do in software, but if it could make a special hardware unit that could work on buffers of say 16 bytes long and reverse them in maybe only one or two clock cycles. Now whenever a string needs reversing it can happen in hardware in the fraction of the time. The result is that the overall performance of the processor will increase. A real world example wouldn’t be strings, but things like facial recognition, machine learning, or object detection.

This means two things. First of all, the ARM architecture already has a set of complex instructions, known as NEON, which can work on data in a parallel fashion. These Single Instruction, Multiple Data (SIMD) operations use a single instruction to perform the same task, in parallel, on multiple data elements of the same type and size. Second, mobile processors already contain discrete hardware blocks that perform specialist operations: the GPU, the DSP, the ISP, etc.

The conclusion? That other ARM processors including those from Qualcomm, Samsung, MediaTek and HUAWEI already have the ability to shift work from the software and into hardware. For example, Qualcomm provides developers with its Hexagon DSP SDK which allows apps to directly use the DSP hardware found in Snapdragon processors. Although the Hexagon DSP started out as a Digital Signal Processor, it has expanded beyond audio processing and can be used for image enhancement, augmented reality, video processing and sensors.

System integration

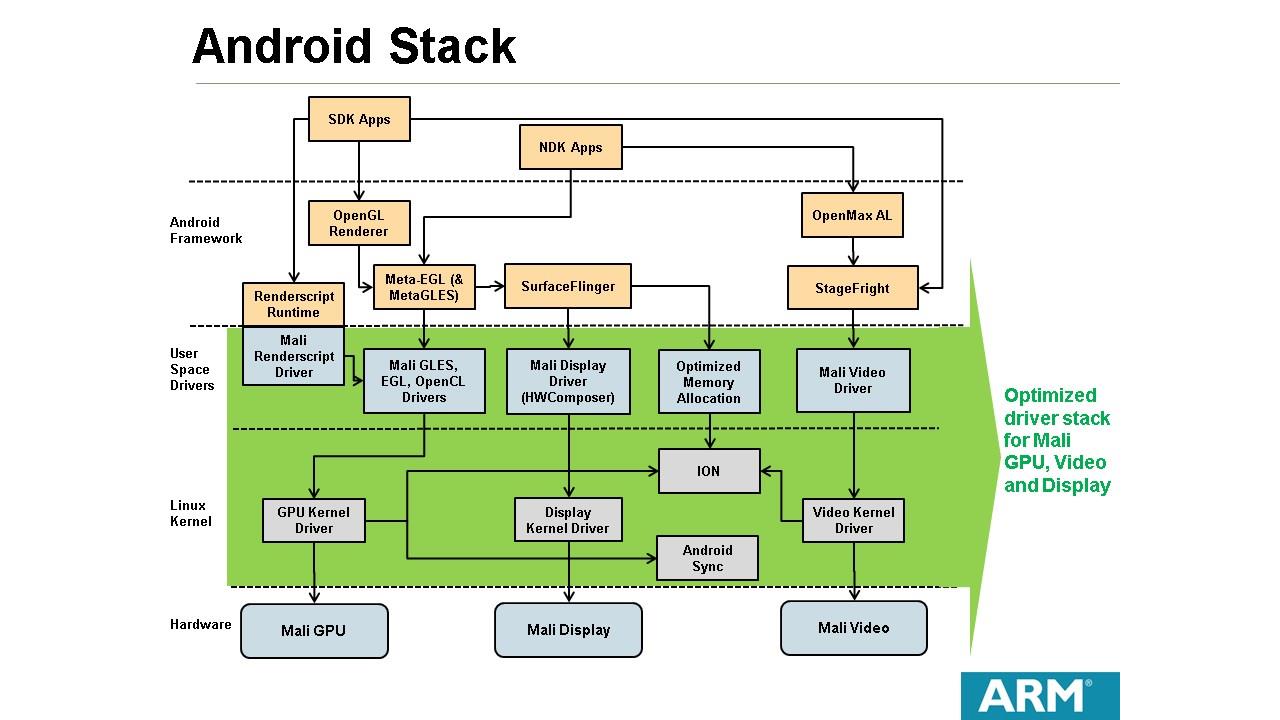

One key aspect of optimization is to ensure that the key components work well together, that the overall system is integrated. It would be pointless having a very fast GPU if the CPU communicated with it over a serial bus using slow and unoptimized drivers. The same is true of the DSP, ISP and other components.

It is in the interest of SoC manufacturers like Qualcomm and CPU/GPU designers like ARM to guarantee that the software drivers needed to use their products are optimized. This works in two ways. Firstly, if ARM licenses a CPU/GPU design to a SoC manufacturer like MediaTek then the manufacturer can also license the software stack that goes with it. That way operating systems like Android can be supported by the SoC. It is in ARM’s interest and the SoC manufacturer’s interest to make sure that the software stack provided for Android is fully optimized. If it isn’t then it won’t take long for the OEMs to notice which will lead to a significant drop in sales.

Secondly, if a SoC manufacturer like Qualcomm uses its own in-house CPU or GPU design then it must develop the software stack to support Android. This software stack is then made available to the smartphone OEMs that buy Qualcomm’s processors. Again, if the software stack is sub-optimal then Qualcomm will see a dip in sales.

The conclusion? The bottom line is that companies like Qualcomm and ARM don’t just make hardware, they also write a lot of software!

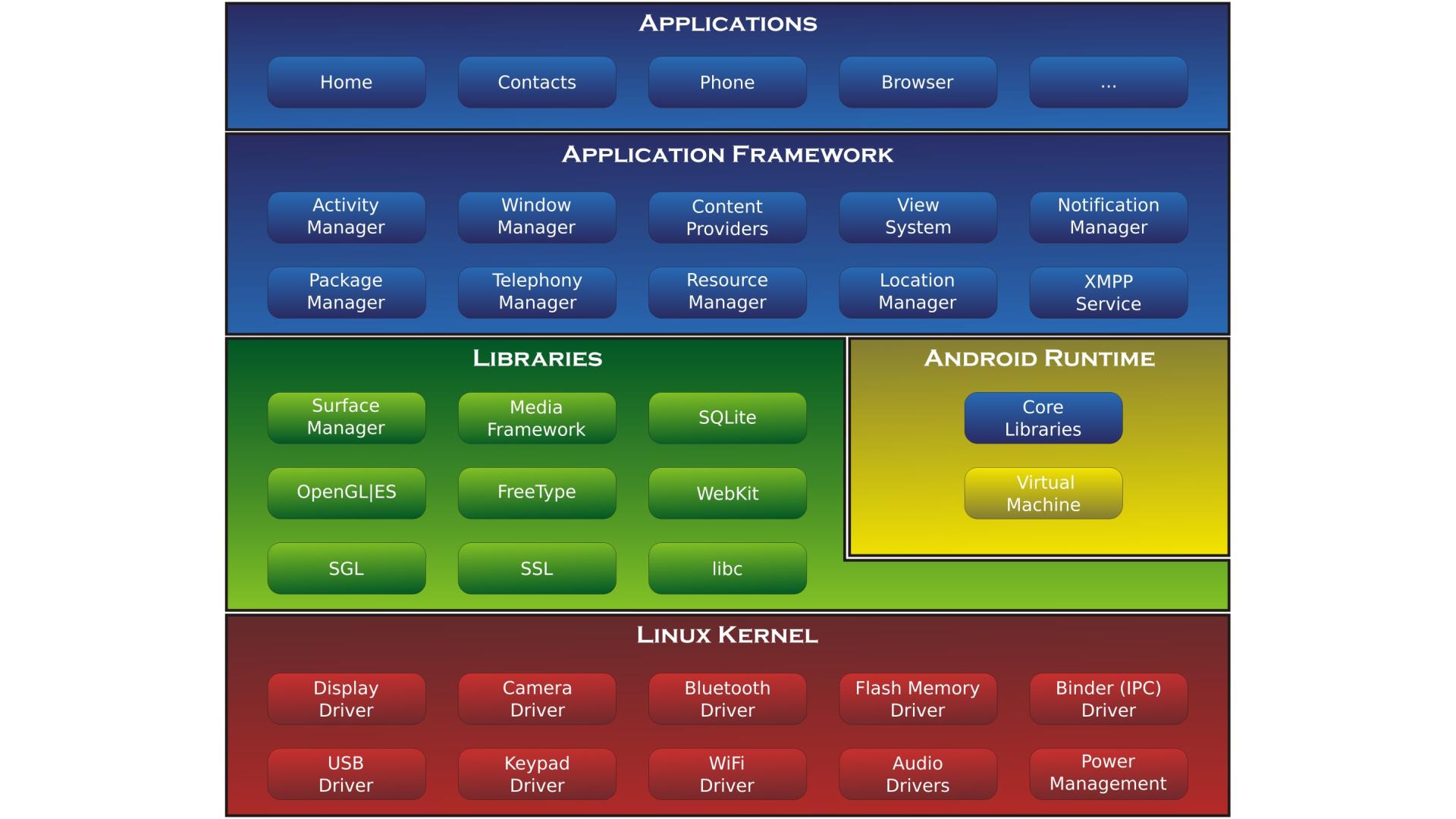

The operating system

But what about Android itself, its internals, subsystems and frameworks, are they unoptimized? The simple answer is no. The reasoning is this. Android has been in development since before 2008. It has grown and matured substantially in those years, just look at the differences between Android 2.x and Android 7! It has been implemented on ARM, Intel and MIPs processors, and engineers from Google, Samsung, ARM, and many others have contributed to its success. On top of that the core of Android is open source which means the source code is available for anyone on the planet to examine it and modify it.

With all those engineering eyes looking at the code then it is unlikely that there are any significant code-level optimizations that have been over looked. By code-level optimizations I mean things that can be changed in small blocks of code where slow algorithms are used or the code doesn’t have good performance characteristics.

But there is also the issue of system wide optimizations, how the system is put together. When you look at Google’s track record in search and advertising, when you look at the infrastructure behind YouTube, when you consider the complexity of Google’s cloud business, it would be absurd to suggest that Google doesn’t have any engineers who know how to build an efficient system architecture.

The conclusion? The Android source code and the Android system design is optimized and efficient.

Wrap-up

Considering everything from the SoC designs, the hardware design, the drivers, the Android OS and the engineers who put it all together, it is hard to find any justification to the idea that Android isn’t optimized. However that doesn’t mean that there isn’t room for improvement, nor does it mean that every smartphone maker will spend as much time (or money) ensuring that it has the best drivers and the highest level of system integration.

So why the perception that Android isn’t optimized? I think the answer is threefold: 1) Apple has been pushing the “it just works” concept for many years and in terms of marketing it certainly seems to be a powerful message. 2) Apple is winning the performance race (at the moment) and the whole “Android isn’t optimized” thing seems to be a reaction to that. 3) There is only ever one current iPhone and that single mindedness seems to portray the idea of optimization, integration and order. Whereas the Android eco-system is vast, diverse, colorful and multifaceted and that diversity can suggest chaos and chaos suggests a lack of coherence.

What do you think? Are there any reasons to think Android isn’t optimized? Please let me know in the comments below.