Affiliate links on Android Authority may earn us a commission. Learn more.

Android 16 could give Gemini the power over apps Assistant never got

Published onNovember 21, 2024

- Google is working on a new API for Android 16 that lets system apps perform actions on behalf of users inside applications.

- This new API is guarded by a permission that’ll be granted to the default assistant app, ie. Gemini on new Android devices.

- This could let Gemini act as an AI agent on your phone, which is something Google originally promised the Pixel 4’s new Google Assistant would do.

Google is giving everything it has to make its Gemini chatbot and large language model more successful, including integrating it across its entire product suite. On Android, Gemini has become the default assistant service on many devices, and the number of things it can do continues to grow with each update. While Gemini can interact with some external services, its ability to control Android apps is very limited at the moment. However, that could change in a big way with next year’s Android 16 release, which is set to include a new API that lets services like Gemini perform actions on behalf of users inside applications.

You’re reading an Authority Insights story. Discover Authority Insights for more exclusive reports, app teardowns, leaks, and in-depth tech coverage you won’t find anywhere else.

Gemini Extensions are how Google’s chatbot currently interacts with external services. Extensions give Gemini access to web services like Google Flights, Google Hotels, OpenStax, and more, allowing it to pull in data from these services when you ask it relevant questions. There are also extensions for things like Google Maps, Google Home, YouTube, and Google Workspace, all of which are available as apps on Android. However, these extensions let the chatbot use your account data when calling the backend APIs for these services rather than directly controlling the respective Android apps. Finally, there are some extensions like Utilities that do let Gemini control Android apps directly, but they only let the chatbot perform basic actions using well-defined intents.

The problem with Gemini Extensions is that they aren’t scalable. There are far too many Android apps for Google to make extensions for, not to mention the fact that many apps don’t provide public APIs that Gemini can even tap into. Using a combination of technologies like screen reading, multimodal AI, and accessibility input, Gemini could theoretically let users control any Android app through natural language, but the results likely wouldn’t be very good given the lack of context. A better solution is for Google to provide an API that lets apps work directly with Gemini to execute certain app functionality, which is exactly what Google looks to be doing in Android 16.

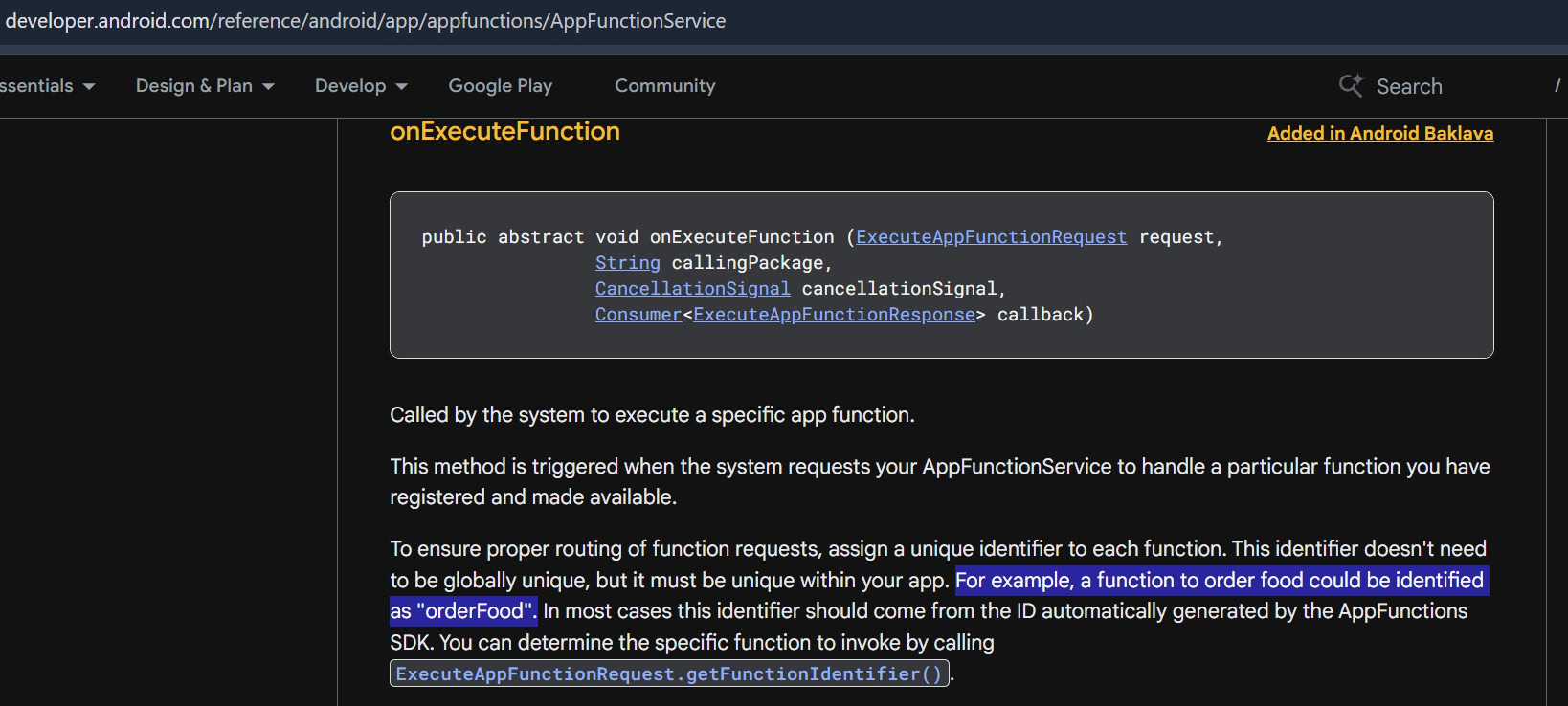

When Google released Android 16 DP1 earlier this week, we spotted a mysterious set of new APIs in Google’s developer docs related to a new feature called “app functions.” According to Google’s documentation an app function “is a specific piece of functionality that an app offers to the system.” These functionalities can be “integrated into various system features.”

Google’s description of app functions is vague, likely intentionally so, but thankfully, the description of one of the new methods offers an example of an app function. The method description talks about how function identifiers have to be unique within apps, and that, “for example, a function to order food could be identified as ‘orderFood.’” So, for example, a restaurant app could implement an app function to order food, or a hotel app could implement an app function to book a room.

Details are limited, but it seems like apps create functions by defining a service that can only be bound to by a system process. These app functions are exposed to Android’s App Search framework, which is the framework that powers the universal search experience in the Pixel Launcher, among other things. App functions can be executed by apps that hold either the EXECUTE_APP_FUNCTIONS or the EXECUTE_APP_FUNCTIONS_TRUSTED permission in Android 16.

While both permissions can only be granted to system applications, the former is currently only granted to system apps that hold the ASSISTANT role (i.e. the Google app), while the latter is currently only granted to system apps that hold the SYSTEM_UI_INTELLIGENCE role (i.e. Android System Intelligence). Both permissions allow applications to “perform actions on behalf of users inside of applications,” but “apps contributing app functions can opt to disallow callers with the” EXECUTE_APP_FUNCTIONS permission, instead only allowing callers with the EXECUTE_APP_FUNCTIONS_TRUSTED permission to execute them.

Although a lot of details are missing, it sounds to me like Android 16’s new app functions feature will let Gemini control apps in a way that Google Assistant never managed to do. Back in 2019, Google teased how its “new Google Assistant” could orchestrate tasks across apps. You could use your voice to multitask across apps and perform complex actions like replying to an incoming text via voice, and then following up by sending a photo.

What Google unveiled back in 2019 sounded a lot like what AI agents today promise to do, and given everything Google has been working on this past year, it shouldn’t be shocking to hear Google revisit this idea. Hopefully, app functions in Android 16 enable Gemini to become a true AI agent for your Android phone, but from what we can see, that will depend on whether or not app developers get on board with the idea.