Affiliate links on Android Authority may earn us a commission. Learn more.

24 hours with Apple Intelligence: Heavy on the Apple, light on the Intelligence

October 31, 2024

At long last, the day has come — Apple Intelligence has arrived. Alright, some of Apple Intelligence has arrived for US-based users who updated to iOS 18.1 and hopped on an additional waitlist. But I digress. After having had my iPhone 16 Pro for over a month, I guess something is better than nothing. When I saw that my phone was ready for the update, I jumped at the chance to try Apple’s long-awaited response to Google’s Gemini Nano and Samsung’s Galaxy AI. After all, I figured Apple would have a good level of polish to its chosen features after making us wait so long for them, right? Well, here’s how my first few experiences with Apple Intelligence have actually been going.

Siri is… somehow more annoying

One of Apple’s main selling points for Apple Intelligence was that it would completely reimagine Siri. Sound familiar? This is much like what Google had planned for its transition from Google Assistant to Gemini. Essentially, Siri would go from a generic voice assistant that could set timers and answer questions to a more personal assistant with the ability to draw in-depth context from your queries to create better responses. Oh, and it would come with a shiny new animation meant to show just how integral Siri is to your iPhone. It’s precisely the type of thing that failed to convince me when Google talked about it, only for the Pixel 9’s Gemini to win me over.

So, with my positive Gemini experience in mind, I long-pressed my iPhone’s power button and marveled at the new animation. If nothing else, Apple was right — now it feels like Siri is ready to take over your device. The new animation puts a rainbow halo around your entire display, making it clear that Siri will at least try to do something to answer your question. Of course, you still have to speak to Siri when you use the power button to activate it — which isn’t my favorite — so I decided to try Type to Siri and keep my dumber questions locked up inside.

Type to Siri is handy, but I can't stop accidentally launching it.

Once again, Apple’s motivation behind Type to Siri is great — sometimes you want to ask your assistant a question, but you’re not in a place where you can do so. If you’re in a library, a museum, a train, or just somewhere else quiet, you’d probably love the option to type out your query and read Siri’s response rather than hear it out loud. With Type to Siri, you can do just that. In my experience, it’s as responsive as regular Siri, just quieter.

However, my problem with Type to Siri is how you access it. When you want to ask your assistant a question, a simple double-tap on the bottom edge of your screen opens the Siri-powered keyboard — sounds simple, right? It is — maybe too simple. Unfortunately, I’ve run into the same issue I used to have with some of the sharper waterfall displays on Android phones in that Type to Siri is almost too easy to activate.

I can no longer count the number of times I’ve been scrolling Instagram or X and using my little finger to prop up the bottom edge of my phone, only to have Siri’s golden animation activate and its keyboard consume half of my display, and I’ve only had Apple Intelligence for a few days. At first, I thought it was activating because it thought I wanted to translate text on my screen, only to realize later that, no, the gesture is just that easy to trigger.

In a brutal, unfortunate way, Type to Siri has become about as annoying as Microsoft’s Clippy in terms of popping up when I don’t want it to — but at least it can usually help me when I need it.

Perhaps the one piece of my Siri experience that I didn’t expect was the change to the assistant’s default voice. It got a slightly more natural-sounding refresh, which I didn’t realize until I fired up Apple Maps for a little help finding the fastest way to the airport. I don’t mind the update, but it falls further into uncanny valley territory by sounding a bit more human yet decidedly more robotic than Google’s Gemini Live voices.

Notification summaries are great, Smart Reply is not

While my experience with an updated Siri left me feeling lukewarm at best, it didn’t ruin my first taste of Apple Intelligence. I’m not the biggest user of voice assistants as it is, so I was mostly giving Siri some run just to see what was truly different. What I was interested in checking out, however, was how the rest of Apple’s tools would shake out — specifically, the ones that could keep me off my phone just a little bit more.

In previous versions of iOS, I often found myself overwhelmed by how Apple handled notifications. It simply lets them pile up from each app, eventually turning them into a stack whenever it decides you have enough to catch up on. However, if those notifications include several messages from a 20-person group chat, you still have to go back and read them all to get important context on whatever your friends or coworkers had planned. Now, with Apple Intelligence, your iPhone automatically cooks up a quick summary of your notifications when you have more than two from the same source — it’s incredible.

Notification summaries are — by far — the best part of Apple Intelligence.

More than anything else, Apple’s notification summaries have sold me on Apple Intelligence, and I’m not exaggerating. They work in almost every app, sending you summaries from Messages and Mail and even running through a list of Instagram reels that one of your friends might have sent you. It’s not a long summary, either — I think I usually get two or three bullet points to put me up to speed.

That said, a few of the summaries could use some work. One of my friends remarked to me that they had to hold off on getting a flu shot until 6:00 PM so as not to feel sick at work, and Apple Intelligence somehow turned that into saying that they couldn’t arrive until after 6:00 PM. It’s technically the truth, but the summary skipped some vital context.

Apple Intelligence also added priority notifications to Mail, yet another feature I’m quickly learning to love. It pulls specific emails to the top of your feed, putting particular emphasis on those that remind you when a payment is due and those that require a response by a specific date. Apple’s priority heads-up also takes on the same colors as the revamped Siri animation, which at least helps to make it far more visible.

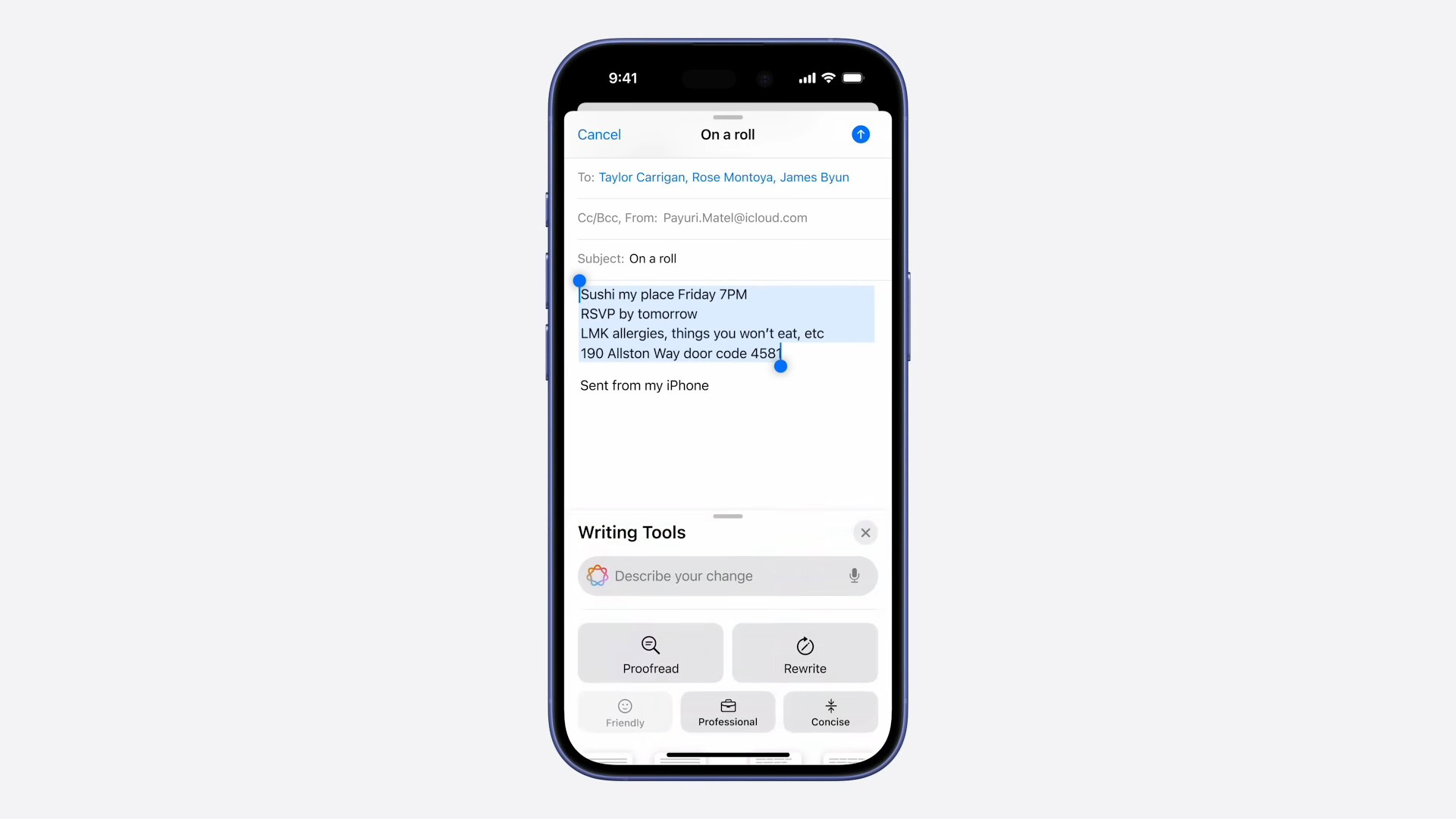

Of course, the other side of using Apple to organize your notifications is that iOS 18.1 wants to help you respond to said notifications. It’s again an experience similar to Google’s Help Me Write, but Apple prefers to call it Writing Tools. Either way, I’ve skipped out on the summarizing and rephrasing paragraphs only because I don’t usually type out long messages from my iPhone 16 Pro — especially when I have a laptop just a few feet away.

Apple's Smart Reply suggestions are too generic for my liking.

Where I keep running into Apple’s very insistent assistance, however, is with the Smart Reply options it generates in Messages. Like everything else, they pop up in the colorful Apple Intelligence scheme, which is fine, but the content of the suggestions is entirely too generic for my liking. They’re mostly like three-word responses to either agree with something or disagree with it, which works for short messages but not so much for longer conversations where you need to type out more information.

Clean Up needs some cleaning up

Since we’re following the same three main categories that Google and Samsung focused on for their respective AI rollouts, we may as well bring Apple Intelligence home with some generative editing. So far, the only real generative tool that Apple has unleashed is the earliest stage of its competitor to Google’s Magic Editor, dubbed Clean Up. Top to bottom, it feels about the same as the tool that Google has been fine-tuning for a few years, but it’s also pretty apparent that this is Apple’s first swing at the magic of editing.

What I mean is this — Clean Up isn’t great. Well, the process of using it is fine — simply start editing an image in Photos and tap the Clean Up eraser to get started. From there, Apple Intelligence will automatically pick out elements that it thinks you might want to erase, at which point you can simply tap on them to send them into the void. So far, so good; everything is easy enough to follow. The problem occurs once you start to erase things.

For example, I caught a photo of a marathon runner dressed up in a disco ball (an incredible feat, if ever there was one), and I decided to get rid of the crowds behind him so you could tell he was the main character. So, I followed the steps above, selected the people I wanted to remove, and waited a few seconds. Sure enough, they were gone, but what remained in their place looked like something that would give HP Lovecraft nightmares. I simply don’t know how to explain the rainbow-colored ball that now sits where a man in a black jacket previously sat, nor do I have a great explanation for why Apple Intelligence left the legs and feet of those spectators unchanged behind the banners lining the track.

Granted, the early days of Magic Eraser and Object Eraser felt the same as Apple’s Clean Up — vaporizing objects but leaving plenty of artifacts in their wake — but it’s not an excuse for Apple. I say that because Google has offered Magic Eraser in one form or another since the Pixel 6 series, which launched in 2021. Google even beat Apple to the punch of putting an eraser feature on an iPhone when it opened up support for non-Pixel devices in early 2023. So, having an in-house solution called Clean Up that still needs some cleaning doesn’t give me a warm, fuzzy feeling about the current state of Apple Intelligence.

And still, I know that I can’t render a final verdict on Apple Intelligence just yet. Apple has only rolled out the first few features as part of iOS 18.1, leaving some more exciting generative tools, like the Image Playground, for later updates. I also understand that Apple will probably work to fix and improve the features that have launched, which is perhaps a key reason behind only adding a few at a time. When that happens, it might prove to be a solid alternative to Gemini or Galaxy AI, but for now, Apple Intelligence seems pretty heavy on the Apple and kind of light on the Intelligence.

Thank you for being part of our community. Read our Comment Policy before posting.