Affiliate links on Android Authority may earn us a commission. Learn more.

Arm Cortex-X3 and Cortex-A715: Next-gen CPUs redefined

Published onApril 18, 2023

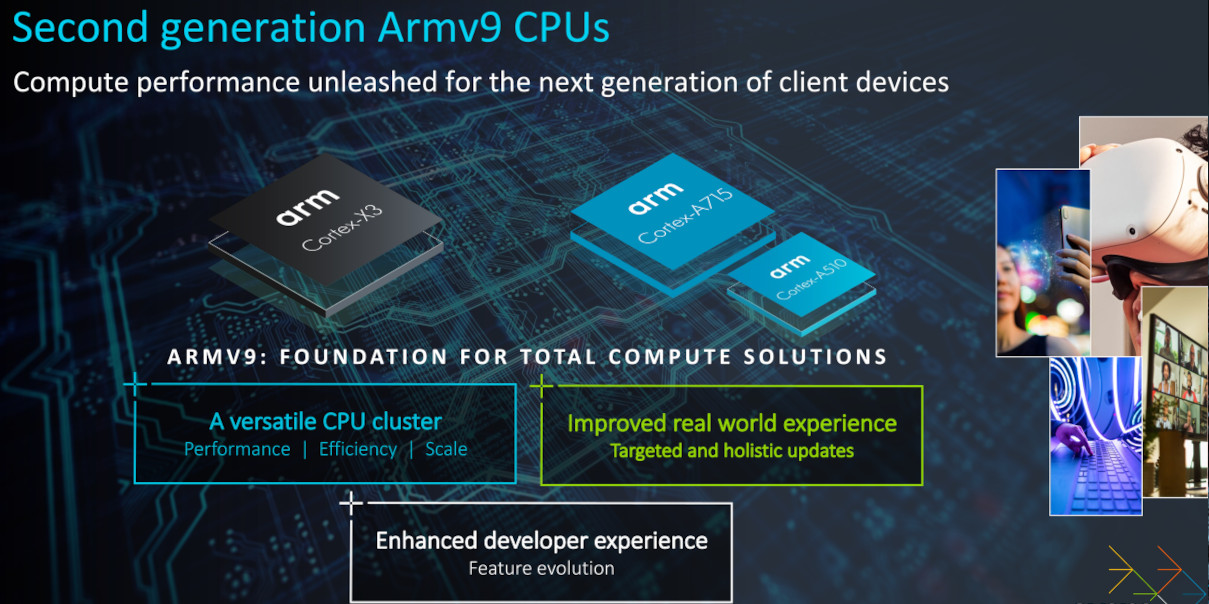

Every year, Arm unveils its latest CPU and GPU technologies that will power Android smartphones and gadgets in the following year. In 2022, we got treated to a new powerhouse – the Armv9 Cortex-X3, mid-core Cortex-A715, and a refresh of the energy-efficient Cortex-A510 announced in 2021.

We were invited to Arm’s annual Client Tech Day to learn all about the ins and outs of what’s coming down the pipeline. Let’s go deep into what’s new.

The headline figures

If you’re after a summary of what to expect next year, here are the key numbers.

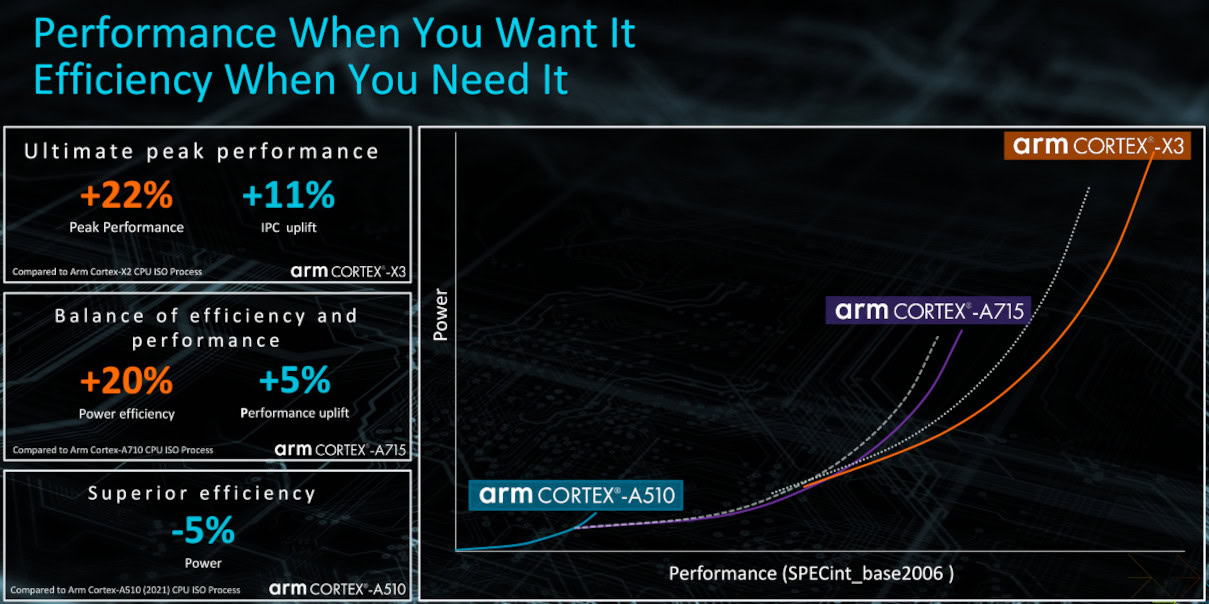

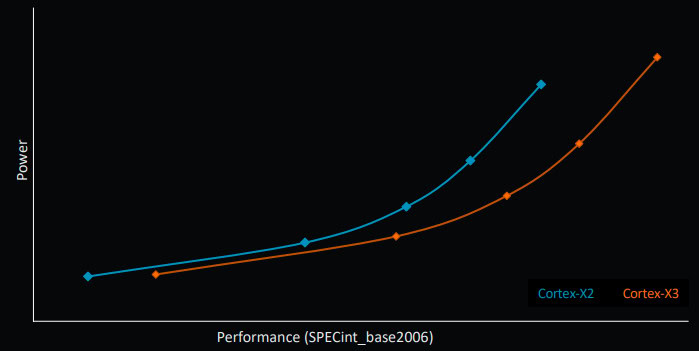

The Cortex-X3 is the third generation X-series high-performance CPU core from Arm, following up on the Cortex-X2 and X1. As such, peak performance is the aim of the game. Arm boasts that the Cortex-X3 provides an 11% performance uplift over the Cortex-X2, when based on the same process, clock speed, and cache setup (also known as ISO-process). However, this gain extends to 25% once we factor in the anticipated gains from the move to upcoming 3nm manufacturing processes. Arm expects that the core’s performance will be extended even further in the laptop market, with up to a 34% performance gain over a mid-tier Intel i7-1260P. The Cortex-X3 won’t catch Apple’s M1 and M2 but looks to close the gap.

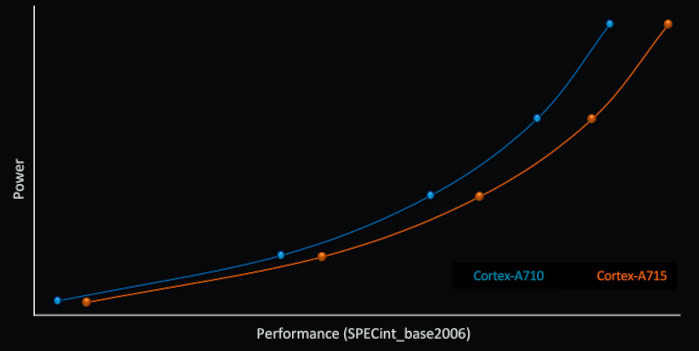

Cortex-A715 improvements are a little more conservative, with this year’s design focused more heavily on efficiency optimizations. Arm calculates a 5% performance boost over the Cortex-A710 for an ISO-process comparison. However, the touted 20% improved power efficiency is a much more tantalizing metric that should result in notable gains in battery life. It’s even better when you consider that the move from 5nm to 3nm is anticipated to provide a further 20-30% efficiency improvement for the same performance, according to TSMC. Taking the efficiency angle even further, Arm is refreshing last year’s little Cortex-A510 with a 5% power reduction over the first iteration.

Overall then, Arm is aiming to maximize the benefits of its bigger, big, and little CPU portfolio. We’re looking at higher peak and better-sustained performance while also boosting the power efficiency of cores running background tasks. Sounds good on paper, but how has Arm done it?

Arm Cortex-X3 deep dive

Before getting into the micro-architecture changes, there are a few things worth noting about the X3. Arm is now firmly committed to its 64-bit-only roadmap, so the Cortex-X3 is an AArch64-only core, just like its predecessor. Arm says it has focused on optimizing the design now that legacy AArch32 support has been removed. Importantly, the Cortex-X3 remains on the same version of the Armv9 architecture as the Cortex-X2, making it ISA-compatible with the existing cores.

Achieving year-on-year double-digit performance gains for the Cortex-X3 is no mean feat, and exactly how Arm has accomplished it this time boils down to a lot of work on the front-end of the core. In other words, Arm has optimized how it keeps the core’s execution units fed with things to do, allowing them to better maximize their potential. Thanks, in part, to the more predictable nature of AArch64 instructions.

Read more: Why Armv9 heralds the next-generation of smartphone CPUs

Specifics on the front end include improved branch prediction accuracy and lower latency thanks to a new dedicated structure for indirect branches (branches with pointers). The Branch Target Buffer (BTB) has grown significantly to benefit from the high accuracy of Arm’s branch prediction algorithms. There’s a 50% increase in L1 BTB cache capacity and a 10x larger L0 BTB capacity. The latter allows the core to realize performance gains in workloads where the BTB hits often. Arm has also had to include a third L2 cache level due to the overall size of BTB.

CPU branch predictors are built to anticipate upcoming instructions in code loops and ifs (branches) with the aim of maximizing the number of active execution units in the CPU to realize high performance and efficiency. Loop branches are often taken repeatedly within a program; predicting these instructions ahead of time is faster than acquiring them from memory on demand, particularly in out-of-order CPU cores.

A Branch Target Buffer (BTB) is a cache-like table of the predictor that stores the branch target addresses or predicted branch instruction. The bigger the BTB, the more instructions can be held for use in future branches, at the cost of silicon area.

To understand this change, you need to note that Arm’s branch predictor operates as a decoupled instruction-prefetch, running ahead of the rest of the core to minimize pipeline stalls (bubbles). This can be a bottleneck in workloads with a large codebase and Arm wants to maximize the performance of its area footprint. Increasing the size of the BTB, particularly at L0, keeps more correct instructions ready to fill up the instruction cue, resulting in fewer taken-branch bubbles and maximizing CPU performance.

The Cortex-X3 focuses on heavy front-end optimizations that pay dividends downstream in the execution core.

To that end, Arm has also extended the fetch depth, allowing the predictor to grab more instructions further ahead of time to leverage the large BTB. Again, this plays into the goal of reducing the number of stalls in the instruction pipe, where the CPU does nothing. Arm claims the overall result is an average latency reduction of 12.2% for predicted taken branches, 3% reduction in front-end stalls, and 6% reduction in mispredictions per thousand branches.

There’s now also a smaller, more efficient micro-op (decoded instruction) cache. It’s now 50% smaller than the X2, back down to the same 1.5K entries as the X1, thanks to an improved fill algorithm that reduces thrashing. This smaller mop-cache has also allowed Arm to reduce the total pipeline depth from 10 to nine cycles, reducing the penalty when branch mispredicts occur and the pipeline is flushed.

TLDR; More accurate branch prediction, bigger caches, and a lower penalty for mispredicts result in higher performance and better efficiency by the time instructions make it to the execution engine.

Instructions make their way through the CPU in a “pipeline,” from fetch and decode to execution and write-back. A stall or bubble occurs when there’s no instruction in the pipeline, resulting in nothing to execute and a wasted CPU clock cycle.

This could be intentional, such as a NOP instruction, but is more often the result of flushing the pipeline after a branch mispredict. The incorrect prefetched instructions must be removed from the pipeline and the correct instructions fetched and fed in from the beginning. A long pipeline results in many stalled cycles from a mispredict while a shorter pipeline can be refilled with instructions to execute more quickly.

All that isn’t to say Arm hasn’t made any changes to the rest of the core, although these are more incremental.

Fetching from the instruction cache has been boosted from 5 to 6 wide, alleviating pressure when the mop-cache often misses. There are now six ALUs, up from four, in the execution engine, adding two additional single-cycle ALUs for basic math. The out-of-order window is larger too, allowing for up to 640 instructions in flight at any one up, up from 576. Overall the pipeline is slightly wider, helping to realize better instruction-level parallelism.

Back-end improvements consist of 32-byte integer loads per cycle, up from 24-byte, the load/store structures have a 25% larger window size, and there are two additional data prefetch engines to accommodate spatial and pointer/indirect data access patterns. So again, wider and faster in the backend too.

| Arm Cortex-X Evolution | Cortex-X3 | Cortex-X2 | Cortex-X1 |

|---|---|---|---|

| Arm Cortex-X Evolution Expected mobile clock speed | Cortex-X3 ~3.3GHz | Cortex-X2 ~3.0GHz | Cortex-X1 ~3.0GHz |

| Arm Cortex-X Evolution Instruction dispatch width | Cortex-X3 6 | Cortex-X2 5 | Cortex-X1 5 |

| Arm Cortex-X Evolution Instruction pipeline length | Cortex-X3 9 | Cortex-X2 10 | Cortex-X1 11 |

| Arm Cortex-X Evolution OoO Execution Window | Cortex-X3 640 (2x 320) | Cortex-X2 576 (2x 288) | Cortex-X1 448 (2x 224) |

| Arm Cortex-X Evolution Execution Units | Cortex-X3 6x ALU (4 SX + 2 MX) | Cortex-X2 4x ALU (2 SX + 2 MX) | Cortex-X1 4x ALU (2 SX + 2 MX) |

| Arm Cortex-X Evolution L1 cache | Cortex-X3 64KB | Cortex-X2 64KB | Cortex-X1 64KB |

| Arm Cortex-X Evolution L2 cache | Cortex-X3 512KB / 1MB | Cortex-X2 512KB / 1MB | Cortex-X1 512KB / 1MB |

The table above helps us put some of the general trends into perspective. Between the Cortex-X1 and X3, Arm has not only increased the instruction dispatch width, OoO window size, and the number of execution units to expose better parallelism but has also continually shortened the pipeline depth to reduce the performance penalty for prediction mismatches. Combined with a focus on front-end improvements this generation, Arm continues to push for not only more powerful CPU designs but more efficient ones as well.

Arm Cortex-A715 deep dive

Arm’s Cortex-A715 supersedes the previous-generation Cortex-A710, continuing to offer a more balanced approach to performance and energy consumption than the X-series. It’s still a heavy-lifting core, though, with Arm stating that the A715 provides the same performance as the older Cortex-X1 core when equipped with the same clock and cache. Just like the Cortex-X3, the bulk of the A715’s improvements are found in the front end.

One of the more noteworthy changes compared to the A710 is that the new core is 64-bit only. The absence of AArch32 instructions has allowed Arm to shrink the size of its instruction decoders by a factor of 4x compared to its predecessor, and all of these decodes now handle NEON, SVE2, and other instructions. Overall, they’re more efficient in terms of area, power, and execution.

The Cortex-A715 is Arm's first 64-bit-only middle core.

While Arm was revamping the decoders, it switched to a 5 instruction per cycle i-cache, up from 4-lane, and has integrated instruction fusion from the mop-cache into the i-cache, both of which optimize for code with a large instruction footprint. The mop-cache is now completely gone. Arm notes that it wasn’t hitting that often in real workloads so wasn’t particularly energy-efficient, especially while moving to a 5-wide decode. Removing the mop-cache lowers overall power consumption, contributing to the core’s 20% power efficiency improvement.

Branch prediction has seen accuracy tweaks as well, doubling the direction prediction capacity, coupled with improved algorithms for branch history. The result is a 5% reduction in mispredictions, which helps improve the performance and efficiency of the execution cores. Bandwidth has expanded with two branches per cycle support for conditional branches and a 3-stage prediction pipeline to reduce latency.

Dropping legacy 32-bit support has seen Arm revamp its front end, making it more energy efficient.

The execution core remains unchanged from the A710 (perhaps why Arm chose to increment the name by 5, not 10?), which partially explains the smaller performance gains this generation. The rest of the changes are in the back end; there are twice as many data caches to increase the CPU’s capacity for parallel reads and writes and produce fewer cache conflicts for better power efficiency. The A715 L2 Translation Lookaside Buffer (TLB) now has 3x the page file reach with more entries and special optimizations for continuous pages, and 2x as many translations per entry for a performance boost. Arm has also increased the accuracy of the existing data prefetch engines, reducing DRAM traffic and contributing to the overall power savings.

All-in-all, Arm’s Cortex-A715 is a more streamlined version of the A710. Ditching legacy AArch32 necessities and optimizing the front and back ends yields a small performance uplift but the bigger takeaway is power optimization. As the workhorse of most mobile scenarios, the Cortex-A715 is more efficient than ever — a boon for battery life. However, it’s also perhaps telling that the design may have run its course and Arm will need a bigger design overhaul to push middle-core performance up a gear next time around.

Cortex-A510 refreshed: What does it mean?

Although Arm didn’t announce a new little Armv9 core, it has refreshed the Cortex-A510 and its accompanying DSU-110.

The improved A510 brings up to a 5% reduction in power consumption, along with timing improvements that result in frequency optimizations. As a drop-in replacement, next year’s smartphones will be a little bit more efficient in low-power tasks right off the bat. Interestingly, the revamped A510 can be configured with AArch32 support — the original was AArch64 only — to bring the core to legacy mobile, IoT, and other markets. So it’s a bit more flexible in terms of how Arm’s partners can use the core.

Arm’s latest Dynamic Shared Unit (DSU) now supports a maximum of 12 cores and 16MB L3 cache in a single cluster, allowing for the DSU to scale up to larger, more demanding use cases. Arm expects that we might see a 12-core setup in laptop/PC products, possibly in an eight big core, four medium core setup. We might see more than eight cores in mobile too, but that’s down to Arm’s partners. The DSU-110 also offers improved communication between CPU cores and accelerators connected to the DSU by reducing software overheating. This is less applicable to mobile but will likely be a win for server markets.

Arm’s latest CPUs continue on a familiar cadence that is all too easy to take for granted. Double-digit IPC performance and power efficiency improvements are a boon for battery-hungry mobile chipsets and Arm SoCs looking to push higher performance into laptops and other form factors.

Of course, the flexible nature of Arm’s CPU cores and DSU fabric leaves a lot open to SoC vendors. Cache sizes, clock speeds, and core count could vary even more widely than in the past couple of years as Arm’s portfolio offers an increasing breadth of options in a bid to cater to ever-growing demands.

Read more: What next-gen Arm CPUs and GPUs mean for 2023 smartphones