Affiliate links on Android Authority may earn us a commission. Learn more.

Why Arm thinks the future of mobile is “digital immersion"

Published onOctober 9, 2019

During his keynote speech at Arm TechCon, VP Marketing for Client Ian Smythe laid bare the company’s vision for the future of mobile silicon. And that future is digital immersion, made possible through its “Total Compute” approach.

Here’s what that means to users and manufacturers.

What is digital immersion?

To Arm, digital immersion means content that engages all the senses and blurs the line between digital and physical content. That doesn’t only mean XR experiences – though that is certainly part of it – it’s also the way we will be “immersed” in technology of all kinds thanks to IoT. When your home changes the lighting and the temperature to respond to your presence (and perhaps your physical cues), that is an example of digital immersion.

Digital immersion is the merger of the physical and data worlds.

After the show, I was able to ask Smythe to elaborate on what the company meant by the term.

“We’re looking at a world where we’re going to see more and more interaction both virtually and augmented,” Smythe explained. “Some will be visual, some won’t be: some will be sensor based. This is the total engagement of a person in their environment. The merger of the physical and data worlds.”

Read also: Arm processors will soon become faster than ever thanks to custom instructions

This is the kind of experience made possible thanks to the growth of exciting new areas such as 5G, IoT, and AI. But what can users expect the result to be?

What OEMs are targeting

To help make this a reality, Arm spoke with partners to ask what kinds of specific applications they wanted to target. One answer was “real time video blending.”

Video blending is essentially another expression of the kind of AR tomfoolery we’ve seen apps like Snapchat pull off for years. The difference is it will involve cutting the user out of the image and transplanting them into different environments, all in real time with no need for a green screen or editing software.

This kind of effect is already technically feasible, but it is certainly limited in scope and accuracy. The objective here (at least as far as Arm’s unnamed partner is concerned) is to provide an effect as believable as one delivered by a green screen and post-production editing, only in real-time.

Hardware manufacturers reportedly had many more specific requests along these lines, but unfortunately Arm was unable to divulge further information at this time. The implication though, was that in future, we could get to a point where IoT and XR are almost meaningless distinctions; where the line between digital and physical is almost irrevocably blurred, as devices receive and handle information from nearly all our interactions, then feed those back to us to augment our experience of the world around us.

How Arm plans to deliver digital immersion

So, when can we expect to see this kind of power in our handsets, and how does Arm expect to deliver it?

A seemingly simple effect like video blending in fact requires a large amount of processing power and the interplay of many different elements (from computer vision, to sensor tracking, to rendering). And that is just one of the less ambitious applications. The sheer scope of what’s possible is why a per-use-case approach is going to be needed to provide specific optimizations across IP, software, and tools. This approach will also help to tackle the new challenges of scaling, data privacy, and 5G. This is what Arm refers to as “Total Compute.”

“Total Compute is not trying to define a single set of products – not a single solution. Whether it’s going into a wearable or whether it goes anywhere else, the solution has to consist of multiple compute elements that will scale individually according to the workload,” Smythe explained.

“We have to find a way to make that both secure and programmable. But the view is that as you increase domain specificity, it gets harder to program. Trying to understand how to make performance analysis available to the programmer is key.”

Smythe was clear that this doesn’t include custom instruction sets coming to A-series CPUs (the ones found in your phones) and won’t any time soon.

Power, security, and collaboration

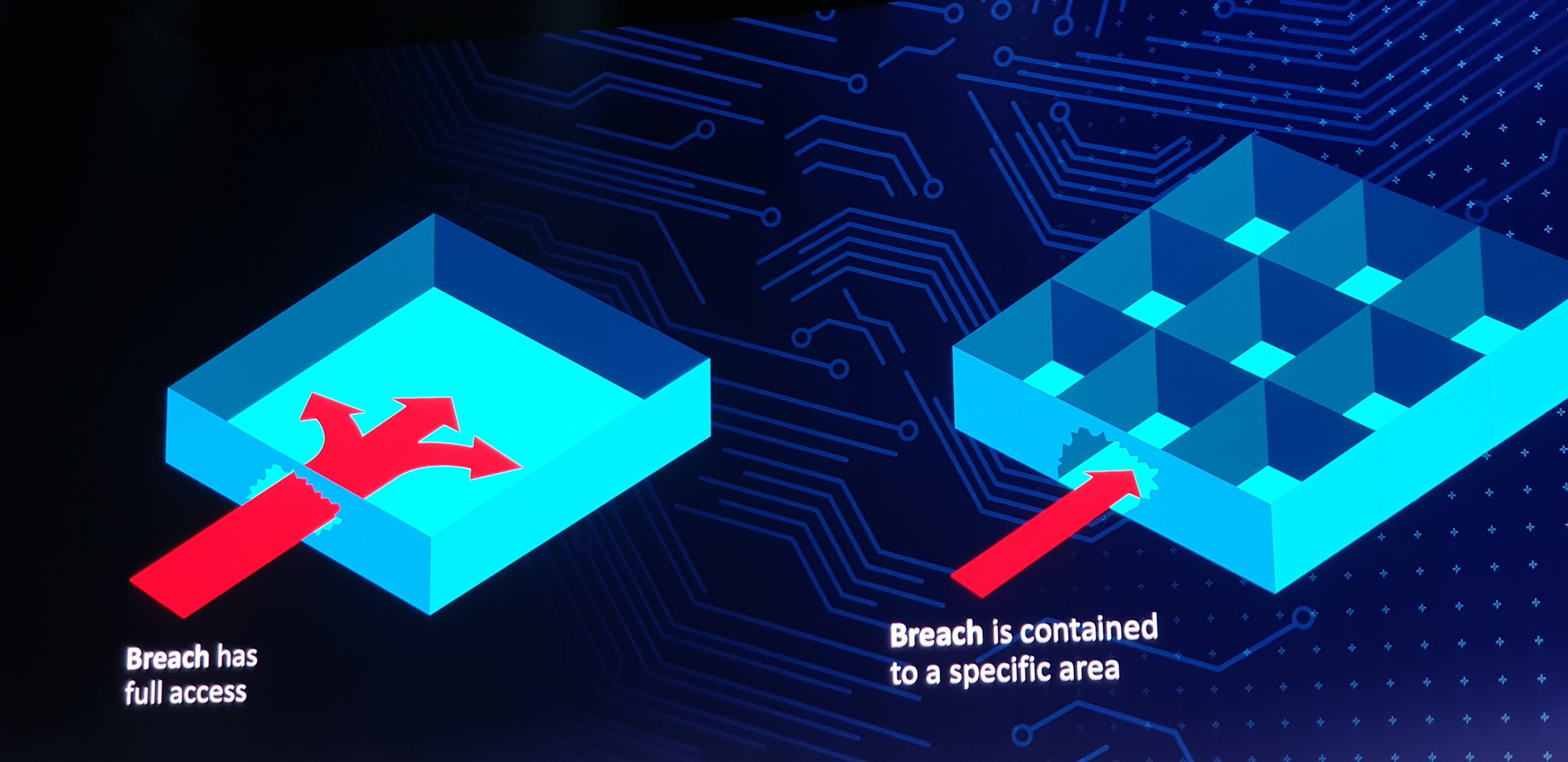

What this digitally immersed future does depend on however is security. As Smythe put it, “There is no privacy without security.” In other words, people aren’t going to be willing to integrate technology so thoroughly into their lifestyles unless they can trust the devices they use to keep it secure. And that is a responsibility that Arm is tackling head-on with solutions such as memory tagging and a more modular design sensibility to help reduce the severity of data breaches (among other strategies).

Another aspect of all this is sheer power. Looking even beyond the upcoming Hercules chips, Arm is preparing the next wave of hardware currently codenamed “Matterhorn,” scheduled for 2020. These CPUs will support something called Matrix Multiply, or MatMul for short, which is expected to double performance over previous generations and offer particular benefits for ML applications.

Read also: Internet of Things companies will dominate the 2020s

Other announcements made at TechCon further emphasized a focus on custom solutions and collaboration. Arm will be working closely with Unity for example, to provide better optimization for those graphics-intensive games and virtual reality experiences. And it will be working with OEMs utilizing the M series of CPUs found in smaller devices, allowing the utilization of custom instruction sets (which might find their way into the sensor modules on our handsets).

There is no privacy without security.

The aim is to provide scalable and customizable solutions to OEMs working with their hardware, in order to support their individual visions for digital immersion. Total compute is designed to be scalable to meet the hugely varied needs of OEMs in the coming years.

It’s an exciting time to be a tech enthusiast. Just quite what shape this immersive future will take, remains to be seen.