Affiliate links on Android Authority may earn us a commission. Learn more.

Arm's new chips will bring on-device AI to millions of smartphones

Published onFebruary 13, 2018

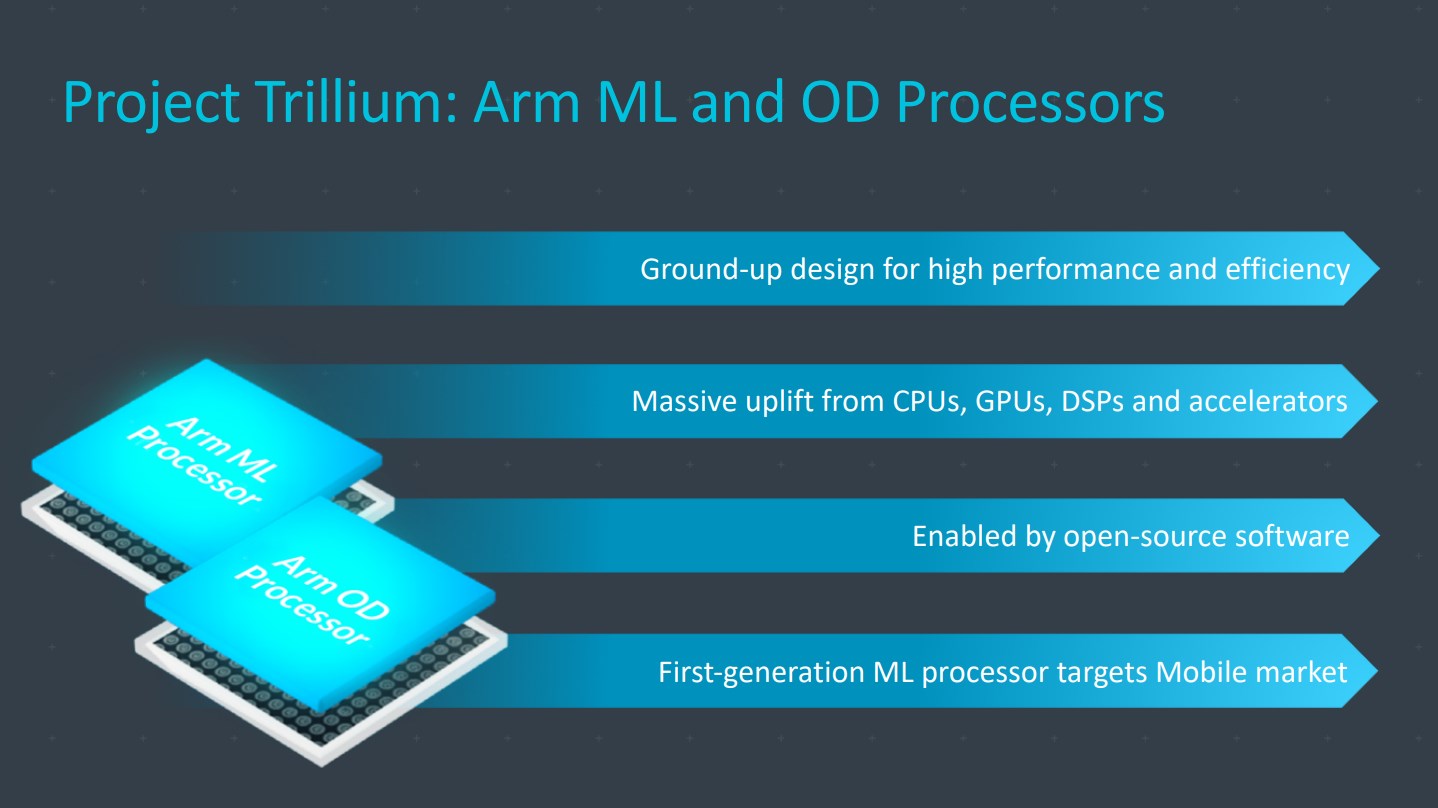

There has been quite a lot written about Neural Processing Units (NPUs) recently. An NPU enables machine learning inference on smartphones without having to use the cloud. HUAWEI made early advances in this area with the NPU in the Kirin 970. Now Arm, the company behind CPU core designs like the Cortex-A73 and the Cortex-A75, has announced a new Machine Learning platform called Project Trillium. As part of Trillium, Arm has announced a new Machine Learning (ML) processor along with a second generation Object Detection (OD) processor.

The ML processor is a new design, not based on previous Arm components and has been designed from the ground-up for high performance and efficiency. It offers a huge performance increase (compared to CPUs, GPUs, and DSPs) for recognition (inference) using pre-trained neural networks. Arm is a huge supporter of open source software and Project Trillium is enabled by open source software.

The first generation of Arm’s ML processor will target mobile devices and Arm is confident that it will provide the highest performance per square millimeter in the market. Typical estimated performance is in-excess of 4.6TOPs, that is 4.6 trillion (million millions) operations per second.

If you are not familiar with Machine Learning and Neural Networks, the latter is one of several different techniques used in the former to “teach” a computer to recognize objects in photos, or spoken words, or whatever. To be able to recognize things, a NN needs to be trained. Example images/sounds/whatever are fed into the network, along with the correct classification. Then using a feedback technique the network is trained. This is repeated for all inputs in the “training data.” Once trained, the network should yield the appropriate output even when the inputs have not been previously seen. It sounds simple, but it can be very complicated. Once training is complete, the NN becomes a static model, which can then be implemented across millions of devices and used for inference (i.e. for classification and recognition of previously unseen inputs). The inference stage is easier than the training stage and this is where the new Arm ML processor will be used.

Project Trillium also includes a second processor, an Object Detection processor. Think of the face recognition tech that is in most cameras and many smartphones, but much more advanced. The new OD processor can do real time detection (in Full HD at 60 fps) of people, including the direction the person is facing plus how much of their body is visible. For example: head facing right, upper body facing forward, full body heading left, etc.

When you combine the OD processor with the ML processor, what you get is a powerful system that can detect an object and then use ML to recognize the object. This means that the ML processor only needs to work on the portion of the image that contains the object of interest. Applied to a camera app, for example, this would allow the app to detect faces in the frame and then use ML to recognize those faces.

The argument for supporting inference (recognition) on a device, rather than in the cloud, is compelling. First of all it saves bandwidth. As these technologies become more ubiquitous then there would be a sharp spike in data being send back and forth to the cloud for recognition. Second it saves power, both on the phone and in the server room, since the phone is no longer using its mobile radios (Wi-Fi or LTE) to send/receive data and a server isn’t being used to do the detection. There is also the issue of latency, if the inference is done locally then the results will be delivered quicker. Plus there are the myriad of security advantages of not having to send personal data up to the cloud.

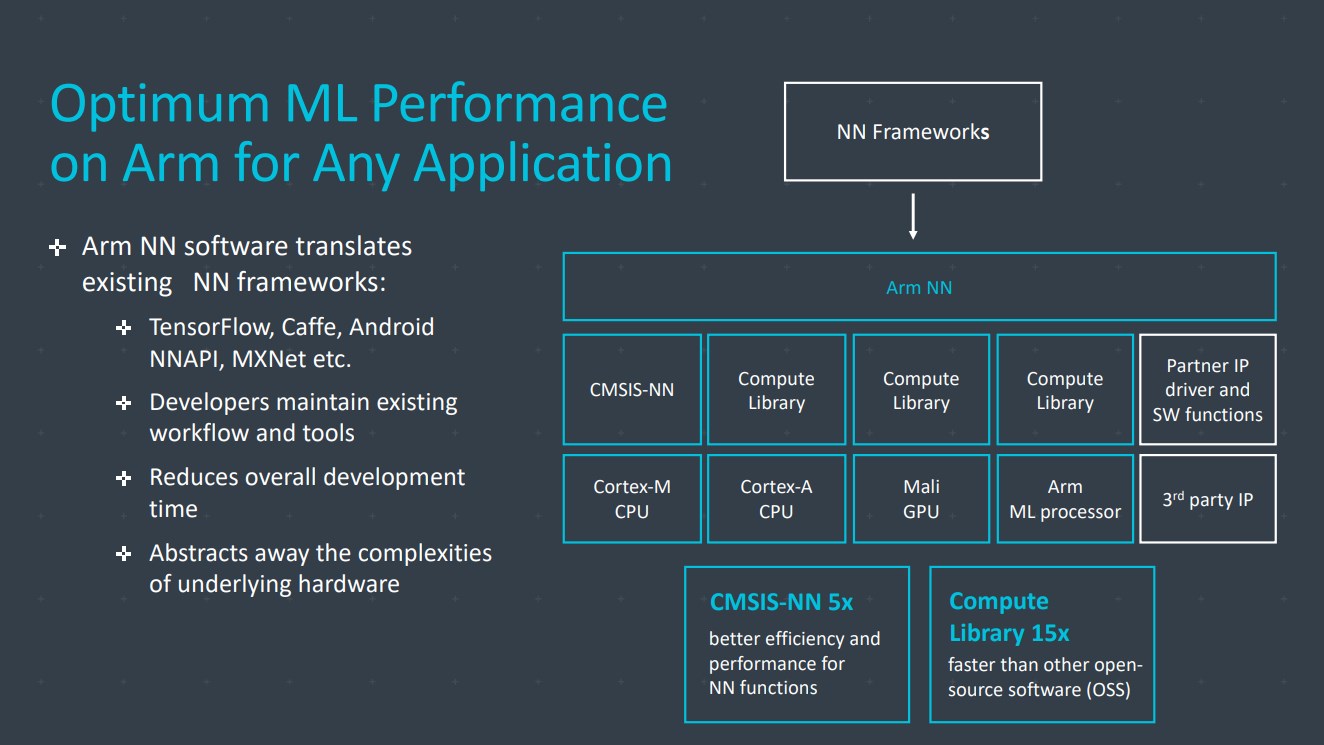

The third part of project Trillium is made up of the software libraries and drivers that Arm supply to its partners to get the most from these two processors. These libraries and drivers are optimized for the leading NN frameworks including TensorFlow, Caffe and the Android Neural Networks API.

The final design for the ML processor will be ready for Arm’s partners before the summer and we should start to see SoCs with it built-in sometime during 2019. What do you think, will Machine Learning processors (i.e. NPUs) eventually become a standard part of all SoCs? Please, let me know in the comments below.