Affiliate links on Android Authority may earn us a commission. Learn more.

FreeSync vs G-Sync: Which one should you pick?

There have been a lot of advancements in display technologies in recent years. As we have pushed the numbers with resolutions and refresh rates, issues pertaining to the same have popped up. At higher refresh rates, it can be difficult to match the frame rates being put out by your graphics card, to the refresh rate of your monitor. This is why display synchronization technologies are needed, and why FreeSync vs G-Sync is a fight worth watching.

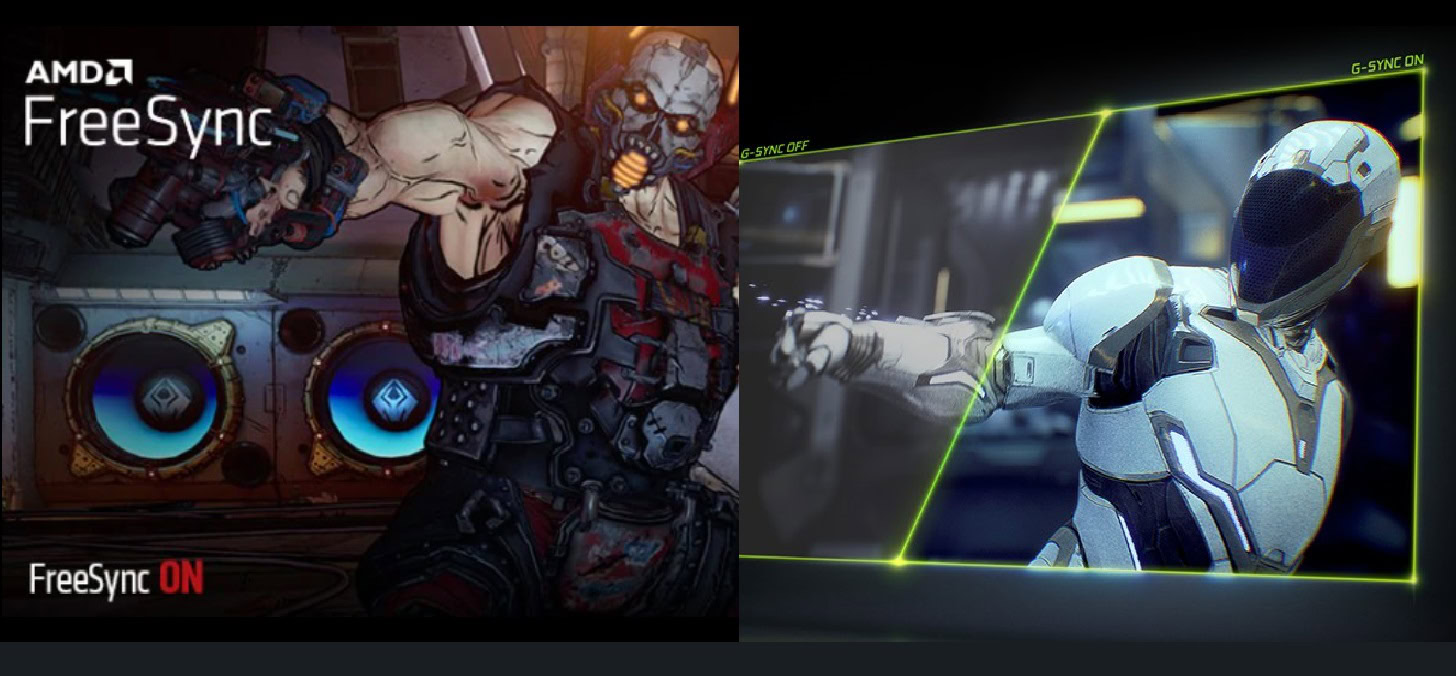

We need display synchronization technologies to ensure display artifacts don’t pop up because of a lack of synchronization between the frame rate and refresh rate. After years of development, with many different technologies, the competition now is between two — AMD’s FreeSync, and NVIDIA’s G-Sync. Since you’re going to have to pick one of these two, here’s our Freesync vs G-Sync comparison.

See also: AMD vs NVIDIA: What’s the best add-in GPU for you?

The road to FreeSync and G-Sync

In the context of display synchronization, there are two key characteristics — frame rate and refresh rate. Frame rate is the number of frames the GPU renders per second. Refresh rate is the number of times your monitor refreshes every second. When the two fail to synchronize, the display can end up getting artifacts like screen tearing, stuttering, and juddering. Synchronization technologies are thus needed to keep these issues from happening.

Before understanding FreeSync and G-Sync, we need to look at V-Sync. V-Sync was a software-based solution that made the GPU hold back frames in its buffer until the monitor could refresh. This worked fine on paper. However, being a software solution, V-Sync could not synchronize the frame rate and refresh rate quickly enough. Since the screen artifacts occur mostly when the two rates are high, this led to an unacceptable problem — input lag.

NVIDIA introduced a software-based display sync solution, Adaptive V-Sync, which was driver-based. It worked by locking the frame rate to the display refresh rate. It unlocked the frame rate when performance dipped and locked it when performance was sufficient. NVIDIA didn’t stop there and went on to later introduce G-Sync, in 2013.

Another big breakthrough in this area came from the Video Electronics Standards Association, a.k.a. VESA. VESA introduced Adaptive-Sync in 2014 as an addition to the DisplayPort display standard. AMD in 2015 then released its own solution, based on VESA Adaptive-Sync, called FreeSync.

How does G-Sync work?

G-Sync is NVIDIA’s display synchronization technology found in monitors, laptops, and some TVs. It needs a supported monitor, and a supported NVIDIA GPU to use. G-Sync is based on VESA Adaptive-Sync, a technology that works by enabling variable refresh rates on your monitor. G-Sync works opposite of NVIDIA’s last effort, using variable refresh rate to make the monitor itself sync its refresh rate to match the frame rate the GPU is churning out.

The processing occurs on the monitor itself, close to the final display output, minimizing the input lag. However, this implementation requires dedicated hardware to work. NVIDIA developed a board to replace the scalar board of the monitor, which carries out the processing on the monitor side of things. NVIDIA’s board comes with 768MB of DDR3 memory to have a buffer for frame comparison.

With this, the NVIDIA driver has greater control over the monitor. The board acts as an extension of the GPU that communicates with it to ensure the frame rate and the refresh rates are in sync. It has full control over the vertical blanking interview (VBI) — the time between the monitor displaying the current frame and it starting with the next frame. The display works in sync with the GPU, adapting its refresh rates to the frame rates of the GPU, with NVIDIA’s drivers at the wheel.

Read more: What is G-Sync? NVIDIA’s display synchronization technology explained

How does FreeSync work?

FreeSync is based on VESA’s Adaptive-Sync and uses dynamic refresh rates to make sure that the refresh rate of the monitor matches the frame rates being put out by the GPU. The graphics processor controls the refresh rate of the monitor, adjusting it to match the frame rate so that the two rates don’t mismatch.

It relies on variable refresh rate (VRR) — a technology that has become quite popular in recent years. With VRR, FreeSync can dictate the monitor to drop or raise its refresh rate as per the GPU performance. This active switching ensures that the monitor doesn’t refresh in the middle of a frame being pushed out, thus avoiding screen artifacts.

FreeSync does need to forge a connection between the GPU and the monitor. It does this by communicating with the scalar board present in the monitors, with no specialized dedicated hardware needed in the monitor.

Read more: What is FreeSync? AMD’s display synchronization technology explained

FreeSync vs G-Sync: Different tiers of certification

G-Sync has three different tiers with its certification — G-Sync, G-Sync Ultimate, and G-Sync Compatible. G-Sync Compatible is the base tier, supporting display sizes between 24 to 88 inches. You don’t get the NVIDIA board inside, but instead, get validation from the company for no display artifacts.

G-Sync is the middle tier for displays between 24 to 38 inches. It also includes the NVIDIA hardware in the monitor with the validation. In addition, displays in this tier have certifications for over 300 tests for display artifacts.

G-Sync Ultimate is the highest tier of this technology. It has displays between 27 and 65 inches. It includes the NVIDIA board inside, with the validation and certification in 300+ tests. These displays also get “lifelike” HDR, which simply means that they support true HDR with over 1,000 nits brightness.

AMD groups FreeSync compatibility criteria into three tiers — FreeSync, FreeSync Premium, and FreeSync Premium Pro. While AMD does not require dedicated hardware, it still has a certification process using these tiers.

FreeSync, the base tier, promises a tear-free experience and low latency. FreeSync Premium also promises these features but needs at least a 120 Hz frame rate at a minimum resolution of Full HD. It also comes with low framerate compensation, duplicating the frames to push the frame rate above the minimum refresh rate of the monitor.

Lastly is the FreeSync Premium Pro tier, formerly known as FreeSync 2 HDR. This tier has an HDR requirement. This means the monitor needs a certification for its color and brightness standards, which include the HDR 400 spec, requiring at least 400 nits brightness. In addition, this tier requires low latency with SDR as well as HDR.

See also: NVIDIA GPU guide: All NVIDIA GPUs explained, and the best NVIDIA GPU for you

FreeSync vs G-Sync: System requirements

G-Sync needs a supported display and a supported NVIDIA GPU with DisplayPort 1.2 support. Supported operating systems are Windows 7, 8.1, and 10. Here are the other requirements:

- Desktop PC connected to G-Sync monitor: NVIDIA GeForce GTX 650 Ti BOOST GPU or higher, NVIDIA driver version R340.52 or higher

- Laptop connected to G-Sync monitor: NVIDIA GeForce® GTX 980M, GTX 970M or GTX 965M GPU or higher, NVIDIA driver version R340.52 or higher

- Laptop with G-Sync-supported laptop displays: NVIDIA GeForce® GTX 980M, GTX 970M or GTX 965M GPU or higher (SLI supported), NVIDIA driver version R352.06 or higher

G-Sync HDR (i.e. G-Sync Ultimate) only works with Windows 10 and needs DisplayPort 1.4 support directly from the GPU. It also requires an NVIDIA GeForce GTX 1050 GPU or higher, and NVIDIA R396 GA2 or higher.

AMD FreeSync requires a compatible laptop, or a monitor or TV, and an AMD APU/GPU that supports it, using the latest graphics driver. Additionally, the Xbox Series X/S and Xbox One X/S consoles support it. Compatible GPUs include all AMD Radeon GPUs, starting with Radeon RX 200 Series released in 2013, i.e. Radeon GPUs with GCN 2.0 architecture and later, and all Ryzen APUs. FreeSync runs over a DisplayPort or HDMI connection.

AMD says other GPUs that support DisplayPort Adaptive-Sync, like NVIDIA GeForce Series 10 and later, should also work fine with FreeSync. NVIDIA GPUs officially support FreeSync under the G-Sync Compatible tier.

- Full list of G-Sync supported displays

- List of FreeSync supported monitors

- List of FreeSync supported TVs

See also: AMD GPU guide: All AMD GPUs explained, and the best AMD GPU for you

FreeSync vs G-Sync: Which one is for you?

The major difference between the two is that FreeSync doesn’t use proprietary hardware. It uses the regular scalar board found in monitors. This significantly cuts down on the requirements needed to use FreeSync down to an AMD GPU.

With G-Sync, the dedicated hardware comes at a price premium with supported monitors. FreeSync is the more affordable option in that sense, making monitor support an easier process.

As far as picking the right one for you in this FreeSync vs G-Sync fight, it depends on your choice of GPU. If you want to cover your bases, you could also go for a monitor that supports both G-Sync and FreeSync. NVIDIA offers FreeSync support under the G-Sync Compatible branding, so you should be good to go with any monitors under that tier.

In the meantime, check out our other articles about monitors and other PC tech.