Affiliate links on Android Authority may earn us a commission. Learn more.

Galaxy S24 generative edit tested: Is it better than Google's Magic Editor?

Published onFebruary 8, 2024

If you watched Samsung’s Galaxy S24 launch event or any of its ad spots, you’d be forgiven to think that Samsung is basically copying Google’s Magic Editor for its Generative Edit feature. As a matter of fact, Samsung is using Google’s AI engines to power it, so it must be the same thing, right?

The truth is far from that. Samsung and Google both offer a generative AI-powered photo editor that, on the face of it, looks identical, but differs in many minor and major ways. Both in the editing possibilities at hand and the results you get.

I was curious to see how both of them perform, how they compare, and which one does better with object and person removal, resizing, and any other funky edits I’d want to apply to my photos. Here are the results.

Many big and small differences that add up

Both Google’s Magic Editor and Samsung’s Generative Edit, which is part of its larger suite of Galaxy AI features, can be accessed the same way. You open a photo in Google Photos on your Pixel 8 or in the Gallery app on your Galaxy S24 and you tap the Edit button, then you look for the colorful starry icon on the bottom right. And that’s it.

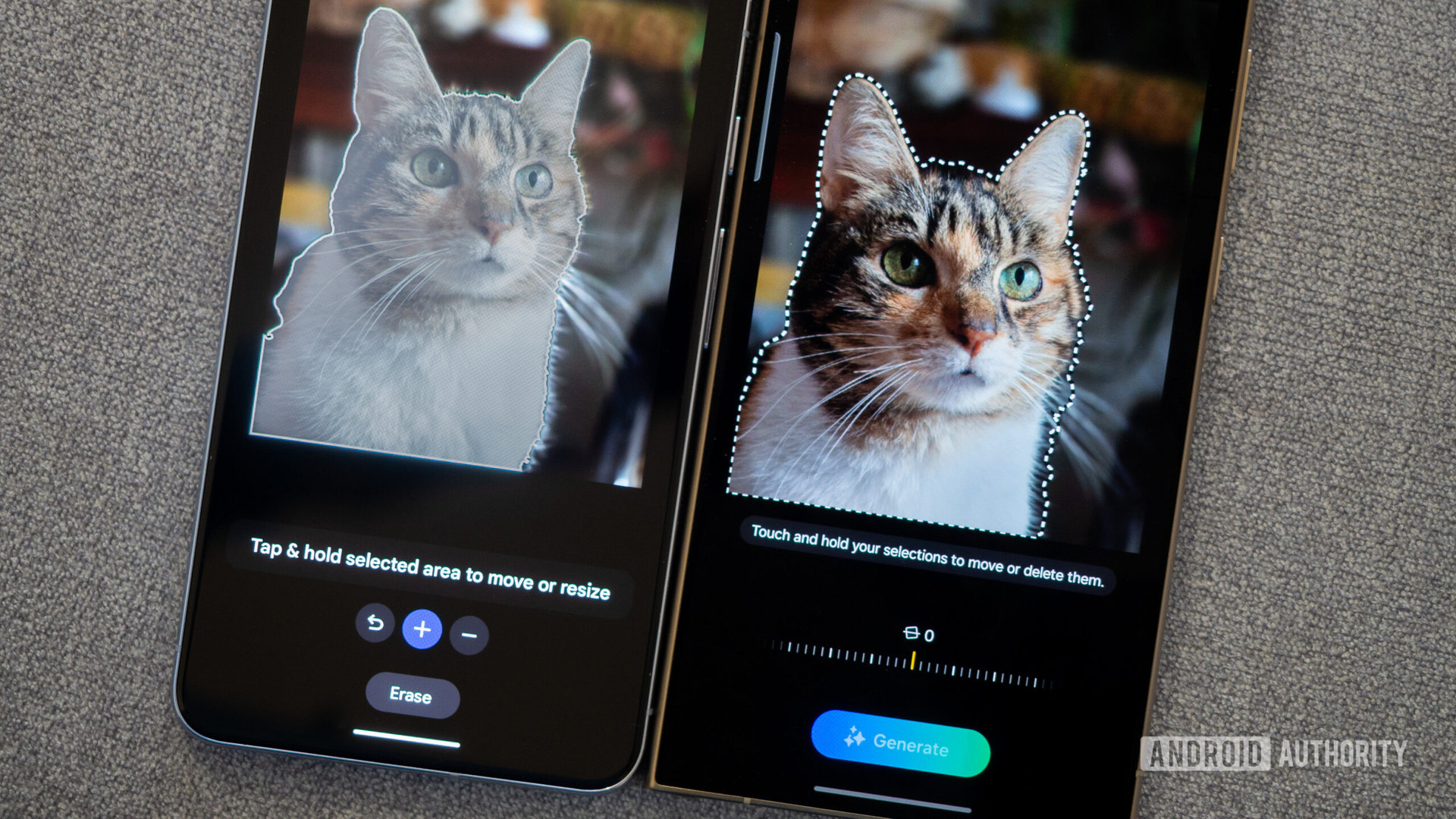

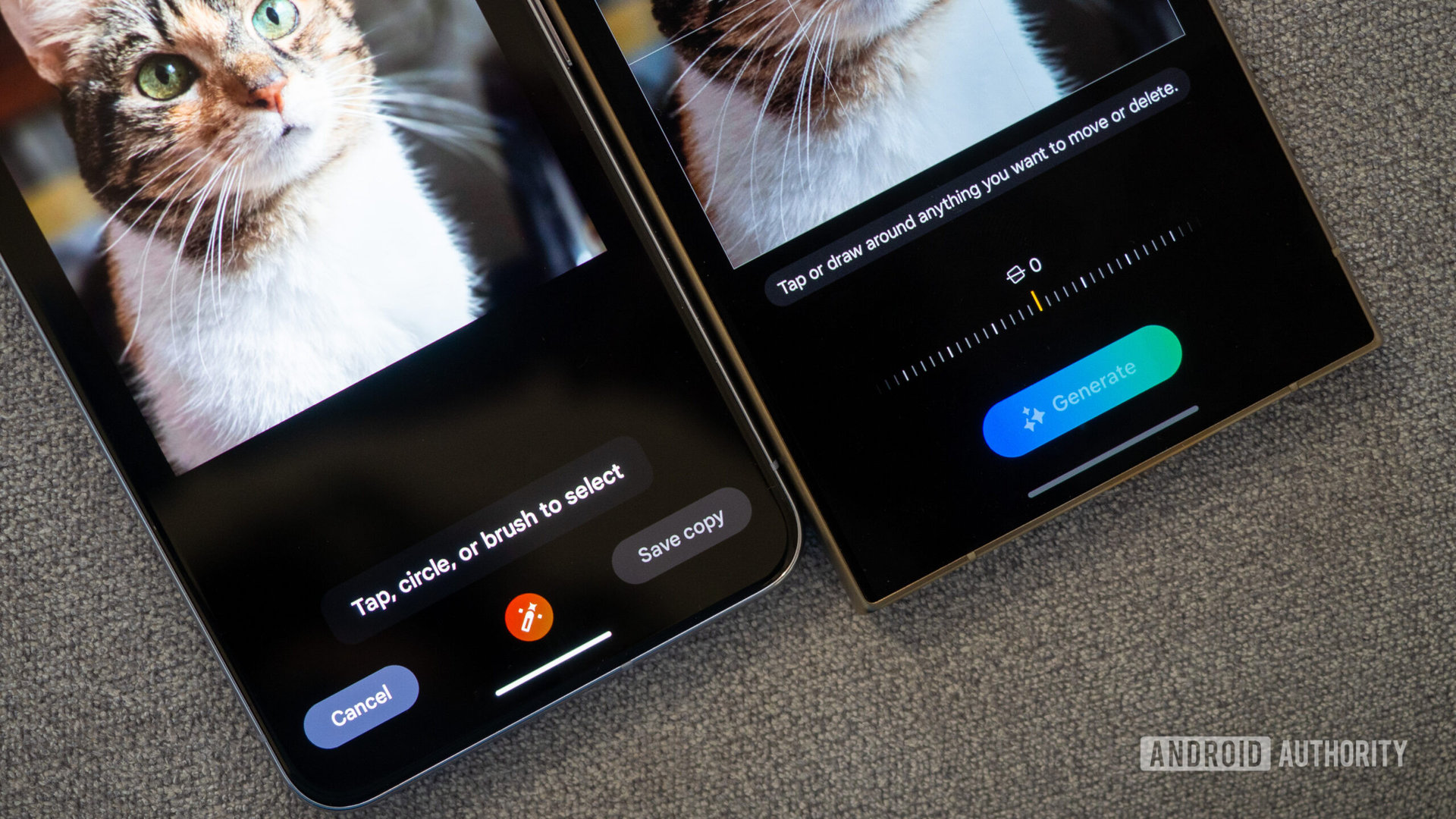

You tap to select a distinct object or person, or you draw around a shape, and the AI will do its best to select it even if you didn’t hit the exact borders. Now you can do all of your fun edits. And this is where I noticed three major differences between these two, plus a few minor ones.

Step-by-step on Pixel, all-in-one on Samsung

The first difference is in the way these two operate. I open Magic Editor and mark an edit, generate a set of images, and pick between them, then I can undo or do more on the Pixel 8 until I’m satisfied with the result and want to save it. In comparison, Samsung allows multiple edits in one go, but once you hit Generate on the Galaxy S24, that’s it. You can’t undo one step at a time and the result kicks you out of the Generative Edit interface. You can only add more or try again by re-opening the editor.

Samsung’s approach worked well for me when I knew exactly what I wanted to do, but Google’s was better when I was experimenting and seeing what worked. The combination of multiple steps, undo, and multiple results is perfect for messing things up and changing your mind.

Samsung generates one image, Google makes four

Magic Editor and Samsung’s Generative Edit both take almost the same time to turn in a result, but the S24 only makes one image, whereas Google provides me with four options. With Samsung, I have to go back and retry the entire edit to attempt another result; with Google, I can just swipe between the four options and pick the most realistic one.

In everyday use, I noticed that that was a huge advantage for Magic Editor because I could often find an edit that worked better, no matter what I asked it to do.

Watermark on Samsung, but no watermark on Google

The final distinction you’ll see in all of the images below is the watermark that Samsung applies on the bottom left of all of its Generative Edit photos. Google doesn’t watermark its images in any visible way, and the only way to tell whether a photo was edited by Magic Editor is to dig deep into its EXIF data — something not many people will do.

I appreciate Samsung taking the lead here and deciding to properly mark photos as manipulated. The internet is full of millions of fake photos; we don’t need to add more to that tally from the comfort of our phones.

Other smaller differences

Aside from the three points above, Samsung and Google’s generative AI photo editors also differ in the way you select things. Google gives me more control and lets me remove parts of a selection, but Samsung just lumps things together and doesn’t let me select something if it doesn’t have a clear contrasted border with its surroundings.

Samsung also offers alignment guides when I move or resize things, but Google doesn’t.

But the bigger differences between these two appear in the results generated, so let’s get to those.

Object and person removal

Both Samsung’s Generative Edit on the Galaxy S24 and Magic Editor on the Pixel 8 can delete objects and people from your photos, but in general, I found the Pixel’s results much more realistic.

There are some exceptions, though. The two photo sets below are the only ones where I think Samsung did a better job than Google. It removed all of the crane’s artifacts and bits from the sky, whereas you can see some ghosting left in Google’s result. It also tried to continue drawing the full hand after I erased the glove in the second photo set.

In situations where the AI editor had to get creative to replace a large object, the results were a bit mitigated, but Google did better in most situations.

In general, Magic Editor was better at removing objects and people from photos. Samsung's Generative Edit isn't as precise or as smart.

In the first photo set, Magic Editor replaced the white van with a food stand, while Samsung fabricated a weird produce truck that doesn’t look half realistic. Google also attempted to properly delete the entire building reflection in the second set (although it didn’t nail the glass pattern all that well), while Samsung threw a smaller brick building instead. And in the third photo, I definitely don’t like Samsung’s choice to go with red traffic light trails, making the photo a million times busier than it should be. Google’s subtle fixes are miles better there.

Google’s Magic Editor also did better with crowd removal. Because its selection is more precise and I can add/remove from it, I was able to get more people out of the first fancy mall photo and the second spider structure photo. In the third pic, both editors removed the crowd inside the Paris Opera, but Magic Editor kept the tile patterns while Samsung smudged everything into a blurry mush.

Plus, Google’s selection tools allow for much more precise edits. In this pic, I was able to select the glove my husband was holding in his left hand, without selecting his gloved left hand, and thus delete only the extra glove. Samsung wouldn’t allow me to only select the dangling glove, so it decided to replace his whole hand instead.

In this set of photos, I had a lot of trouble getting the S24 to let me select the entire crowd of passers-by, and it just wouldn’t let me select the beige dog on the grey ground. If you zoom in, you’ll see that Magic Editor deleted everything on the Pixel, whereas the Galaxy’s Generative Edit left a lot of bits and pieces in place.

And finally, in this photo, things look fine until you notice that Samsung left the person’s shadow on the ground, but Google didn’t. That’s because I was able to select the shadow in Magic Editor on my Pixel 8, but not on my Galaxy S24 Ultra.

Making things smaller or larger, and moving them around

Resizing and moving objects is a fun experiment and I felt that both Google’s Magic Editor and Samsung’s Generative Edit did a good job here. You’ll notice a loss of detail when making things larger (mostly in the duck photo) and Samsung’s generative fill looks bad in the photo of my husband and the San Juan de Gaztelugatxe sunset (see the halo around his silhouette), but overall, this is a cool edit and no one will notice a lot of manipulation if you share it on social media.

Google-only: Sky, water, and other effects

Moving on to the generative edits that are unique to either Google or Samsung, the Pixel Magic Editor offers a set of photo effects and styles that the Galaxy S24 doesn’t. In photos with sky, water, nature, landscapes, and more, you can apply sky effects snf water effects, stylize the image, or fake a golden hour. So you can make a sunny day gloomier (or vice-versa) for example. You’ll notice that Google’s edits don’t just affect the sky, but everything around it too. So in the golden hour edit, for example, both the skyscraper and the water become warmer.

Samsung-only: Rotating, adding from other photos, shadow and moire removal

Samsung, on the other hand, offers a bunch of unique edits that are interspersed across the Generative Edit and Gallery interface.

Rotating objects and people inside an image

First, when you select any object or person, you can not only move, remove, and resize them, but you can also rotate them on the Galaxy S24. Google doesn’t offer that. Here’s the photo of my husband jumping in the snow, but rotated sideways and upside down. I could get into a lot of shenanigans because of this editing option. I love it.

Rotating the whole image

Samsung’s most impressive demo during the Galaxy S24 launch was the option to rotate a photo and have its AI fill up around it. That’s cool and all, but in my experience, in most cases, a basic rotate and crop do a similar job and consume way fewer resources. Plus, they look less edited. Check the photo set below and tell me: Is there any benefit from filling up the crop with AI-generated blur (look at the water on the right side of the photo) versus just cropping to align and removing the borders?

The only time I can realistically say this is a good option is when you want to align something without losing anything from the borders. For example, I was able to better align the park kiosk and the sushi platter below, without cropping more of them out.

As a matter of fact, the Galaxy S24 filled in and generated the bottom side and bottom left corner of my platter.

Personally, I just think this would be a million times more useful if it corrected skewed perspectives instead of just rotating photos, and filled in the lacking bits with generative AI.

Adding things and people from other photos

Samsung also lets you add a selection from another photo into the photo you’re editing. Another fun edit, sure, but the blending isn’t perfect. This will be a cool feature when you find the perfect situation to use it, but in most cases, a regular Photoshop job is practically the same. At least you can do it quickly on your phone now, I guess.

Shadow, reflection, and moire removal

And finally, Samsung has more generative edits, but it hides those under the strip of suggestions that pop up when you view a photo in the Galaxy S24’s Gallery. For example, you’ll see shadow removal, moire removal, and reflection removal among these suggestions.

It’s nice to have a one-click button to remove shadows from photos, but you can basically do the same thing if you manually select the shadow in Magic Editor on the Pixel.

In most cases, the Galaxy S24 does a good job of it, but sometimes it’ll fail to detect all shadows (shadow of the metal bars below), in which case the Pixel does a better job because you can manually select them… Except that sometimes the Pixel gets stubborn and decides not to remove a shadow, no matter how hard you try (see the legs’ shadow).

For moire, Samsung does a decent job of reducing the display and glass pattern effect. And it’s not something I can easily replicate on Magic Editor at all.

For reflections, the Galaxy S24 did a pretty bad job for me most of the time; it even darkened the cat fur’s color here! I think this happens because most of my photos have complex reflections, so you might be able to get a better result if you have pics with more straightforward and clearly delimited reflections.

In the end, I came away pretty mitigated between the two. I don’t think either one is inherently better, but I feel like Google’s results are better and more realistic in general. The fact that there are four of them definitely helps.

Samsung, on the other hand, focuses on quantity more than quality, and it lets me manipulate my photos in more ways, but the one-and-done approach and the single result end up eating away at the general versatility of Generative Edit. If I don’t like an edited image, it takes much more time and many more taps to attempt to fix it.

Which one is better? Google's Magic Editor or Samsung's Generative Edit?

What do you think? Which one is better? Let me know in the poll above.