Affiliate links on Android Authority may earn us a commission. Learn more.

Google I/O showed that the future of Android is less Android, more Assistant

Published onMay 10, 2019

Ever since the advent of Google Now (currently called Discover), the Silicon Valley giant has been trying to bring information to users before they need it.

At Google I/O 2019, Google showed how the Assistant would soon have more control over the Android experience. By providing on-device AI with the functionality to manage features within apps and the OS itself, users will have less of a reason to aimlessly tap through their phone.

As we have reached a point in time where people are worried about the health effects of screen time, this shift in focus is an important one. By giving Assistant more power to do things on its own for the user, people don’t have to spend so much time on their handsets. This provides convenience across the board.

To complete this, Google is putting less focus on Android. When the Assistant can achieve 90 percent of a task with minimal input, Google no longer has to worry as much about building an experience for people to use on smartphones.

Here’s what Google is doing now to make this future a reality.

Assistant and Duplex make tasks easy

Since its inception, the Google Assistant’s primary focus has been to help the user with everyday tasks. But for the most part, this has mainly involved checking the weather, turning on or off a light, and answering basic queries.

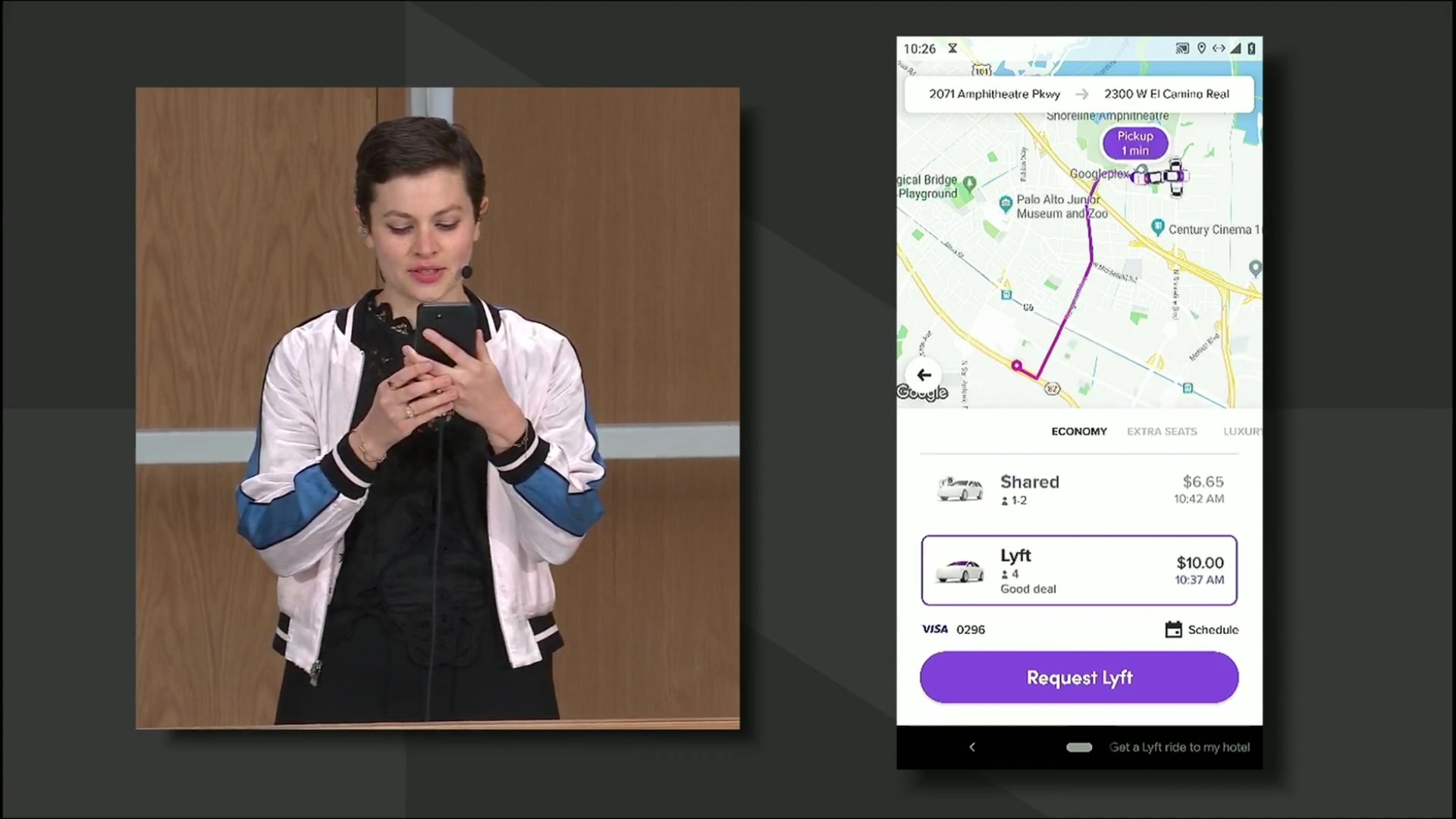

As Google discussed at its developer conference, Assistant is meant for so much more. During the festival’s “Build Your First Action” session, developers were shown just how easy it is to add hooks into their apps for the Assistant to use.

The example that was used on-stage was an s’mores-making app. If the user were to pick up the app and dig through it, they would have to complete close to a dozen steps to finalize their custom order. But with the Google Assistant involved, the users just had to trigger the voice assistant, tell it what it wanted, and then confirm their order.

As you can imagine, taking the latter option shortens the amount of time spent on the phone. Instead of wasting many minutes tapping through the fictional interface, users could complete their order in a matter of seconds by vocalizing a short command to Assistant.

The Assistant can complete tasks in seconds that normally take users multiple minutes.

Duplex takes the real-world time saving to the next level. Unfortunately, its announcement at I/O ’18 was met with mixed reactions. While the underlying premise was intriguing to many, some worried that having a human-like voice call people to book appointments was taking it a step too far.

Despite these worries, Google has slowly rolled out Duplex to handsets in 43 U.S. states.

Now, Google is bringing the Assistant’s Duplex power to the web. Instead of just setting up a haircut or reserving a table at a restaurant, the Assistant will be able to fill in online forms and complete more complex tasks. The example shown at the conference was the multi-step process of setting up a car rental for a future trip.

Read next: What is Google Duplex and how do you use it?

Duplex’s new features are possible because the Assistant has access to vital pieces of information from a user’s Google account. This data includes trip information that might have been sent to Gmail, previous car rental selections, and more. Combined, the virtual assistant can locate and use information that the user might not even know without looking through their records.

Although Duplex will still require confirmation before it uses Google Pay to finalize the rental, this automation mostly removes humans from the equation. Again, this job would have likely taken a person close to ten minutes to complete. With the Assistant, it was done within moments.

Google stops giving you excuses to look at your phone

Look no further than Android Q and Digital Wellbeing to find evidence that Google is trying to get people off of their phones.

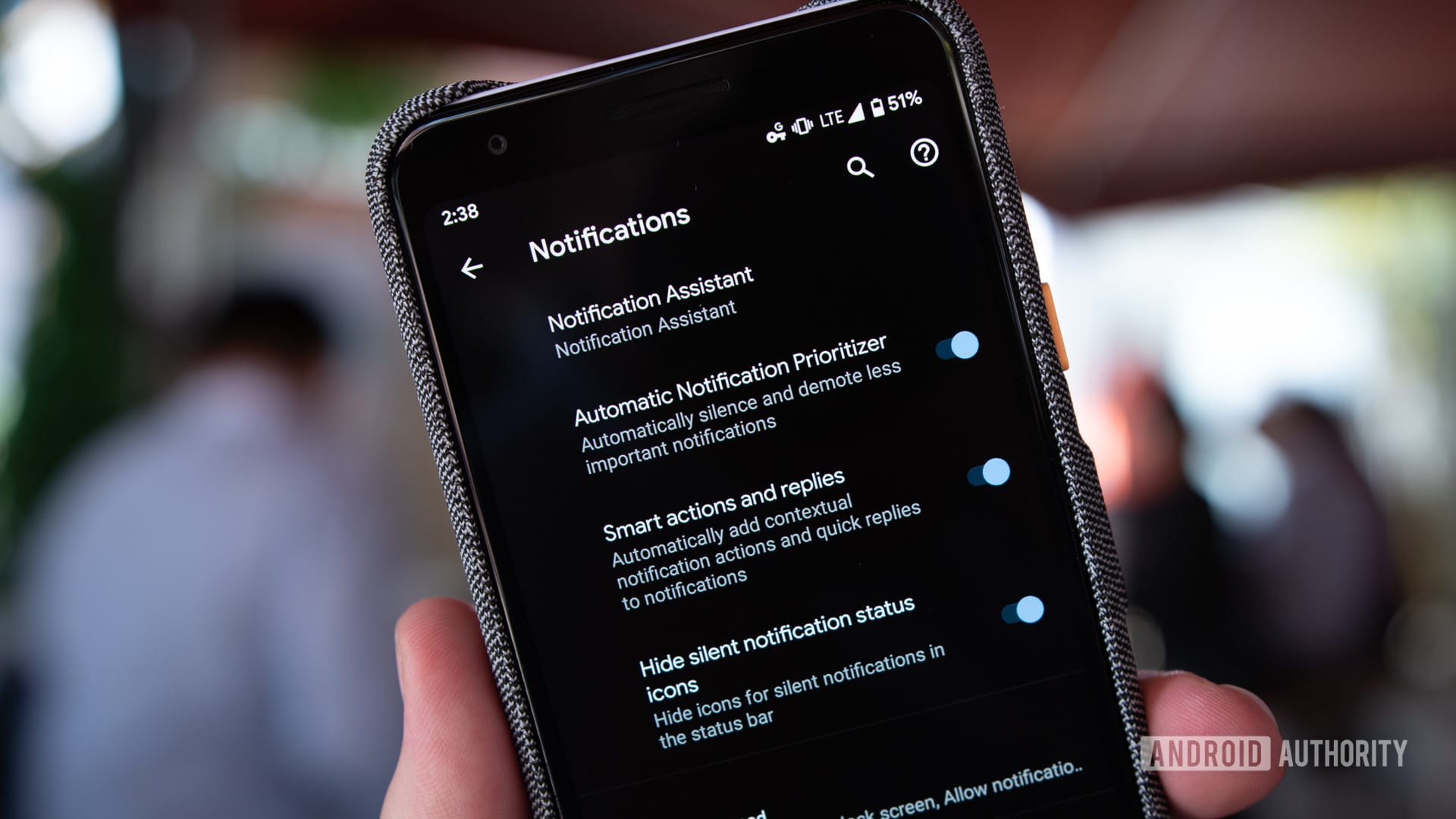

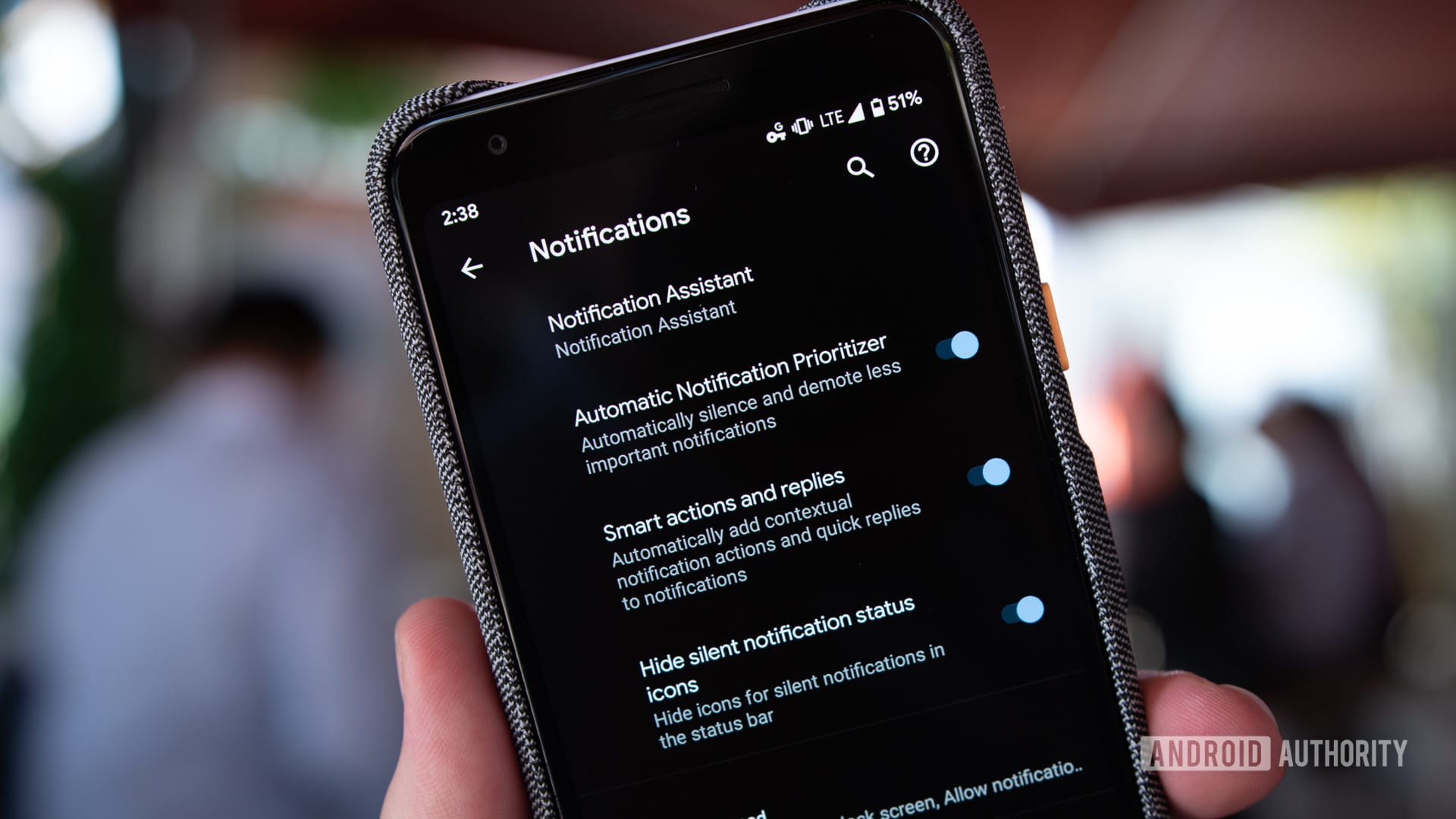

With the third Android Q beta, Google is now automatically prioritizing notifications. This automated service “smartly” decides the importance of incoming alerts and hides the less important ones. If the phone never vibrates or rings, the user has less of a reason to unlock the device and look at it.

Digital Wellbeing, on the other hand, is more focused on the user’s health. While it isn’t a core Android feature just yet, it is a tool that Google provides to some users to help cut back on app usage.

The difference between Android Q’s built-in features and Digital Wellbeing is that one is opt-out and the other is opt-in. By default, Android Q starts hiding notifications that it deems less crucial without even altering the user to what’s happening. The user has to go in and manually configure Digital Wellbeing.

This dynamic is extremely telling of what Google wants the future of Android to look like. Fewer alerts and distractions will cut back on the number of times the phone is used. Add the Assistant and its upcoming functionality to this equation and screen time should dramatically drop.

Smartphone usage is always going to vary between users. Google’s work on the Assistant won’t get people to stop scrolling through social media or playing games, but it will give them the power to spend less time completing tasks.

This focus on Assistant appears to be Google’s plan for the future of Android. Sure, the company will continue adding and tweaking the OS’ user interface, but those are primarily visual changes. Building new functionality into Assistant gets people in and out of apps with as little work and time as possible.

This year’s Google I/O seemed to reflect that shift in thinking as well. Instead of offering an abundance of entertainment and other hands-on options between sessions, the company focused on productivity and educating.

Google won’t be replacing Android with Assistant anytime soon, but we have seen the company put more of its energy towards building automation into the mobile OS. The shift is happening slowly, but the focus is clearly on making the Assistant more capable. When AI can do most of the everyday tasks without help, there’s less reason for users to spend all day digging through Android.