Affiliate links on Android Authority may earn us a commission. Learn more.

Google testing new way to learn from your data while protecting privacy

Published onApril 10, 2017

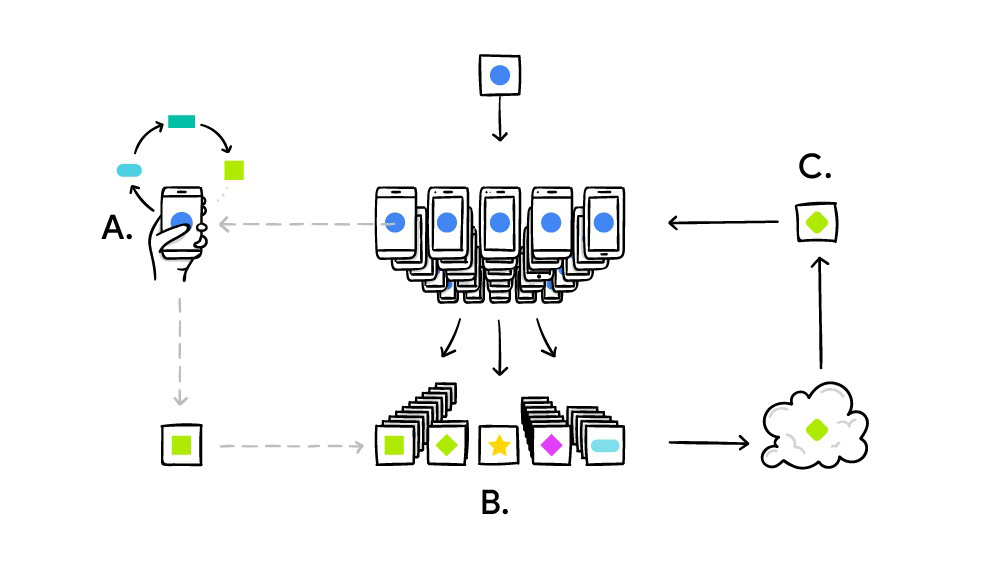

Google has outlined a new machine learning model which it is testing in an attempt to increase user privacy. The company discussed the new process, which it is calling Federated Learning, in a post on its research blog late last week.

Typically, training algorithms requires storing user data on servers (or “the cloud”) to process it. However, this poses a potential security concern, as cloud-based data can be targetted by hackers.

With Federated Learning, Google claims that it can achieve this data collection and training on an individual’s device — and the learning can still be shared. This process does mean that some data is transferred to Google’s servers, but it’s essentially an encrypted summary which is mixed with other user data to anonymize it. The original content never leaves a person’s phone.

Google says that this Federated Learning is currently being testing with its Gboard keyboard on Android. “When Gboard shows a suggested query, your phone locally stores information about the current context and whether you clicked the suggestion,” wrote Google in the blog post, adding, “Federated Learning processes that history on-device to suggest improvements to the next iteration of Gboard’s query suggestion model.”

This means Gboard is learning and applying what are the most relevant suggestions in a given context without the usual data transfer taking place. The benefits, then, are not just related to security, but also to speed — Gboard can apply what has been learned to the app without having to wait for an update to roll out from Google.

Crucially, Google also says that this won’t impact device performance: “Careful scheduling ensures training happens only when the device is idle, plugged in, and on a free wireless connection, so there is no impact on the phone’s performance.”

If this technology (or similar) could be applied to other apps it would be a huge advance for user privacy. More and more companies are implementing machine learning techniques to increase the effectiveness of their products, and it relies on our data to do so. If these services could be improved while also keeping our data safe, it would benefit everybody.

Federated Learning is far more complicated and clever than I’ve made it seem here, so if you want to take a better look at the science behind it, you can head to the Google Research blog now.

Let us know in the comments what you think of Google’s developments.