Affiliate links on Android Authority may earn us a commission. Learn more.

Features

After using the Galaxy S25 Edge, I have one word on the brain: ‘Frankenstein’

0

Features

There’s a dark side to 7 years of Android updates, and we’re already starting to see it

101

Features

I tried Google’s secret Gallery app, and it has one big advantage over Google Photos

17

Latest poll

Do you care about smartphone thinness?

1579 votes

In case you missed it

More news

Nick FernandezMay 6, 2025

0

Amazon Luna: Everything you need to know about Amazon's cloud gaming service

With its new plans, is Google Fi finally competitive again?

Andrew GrushMay 4, 2025

17

The best new Android apps and games for May 2025

Andy WalkerApril 30, 2025

2

These are my 10 favorite Android games to play with a controller

Nick FernandezApril 30, 2025

0

What happens to your data if you stop paying for Google One?

Mitja RutnikApril 28, 2025

9

The iPhone 17 could get pricier, and that's bad news for Android fans too

Aamir Siddiqui15 hours ago

0

The Retroid Pocket Mini is back, minus the unfixable screen issues

Nick Fernandez15 hours ago

0

Manufacturers think you want thinner phones, but survey shows otherwise

Christine Romero-Chan15 hours ago

1

One UI 8 leaked firmware corroborates Galaxy Z Flip 7's big design change (APK teardown)

Aamir Siddiqui16 hours ago

0

Goodbye, emulators: Native GameCube fan ports are now a reality, starting with Mario Party 4

Nick Fernandez17 hours ago

0

US lowers tariffs, making retro gaming handhelds affordable again (for now)

Nick Fernandez18 hours ago

0

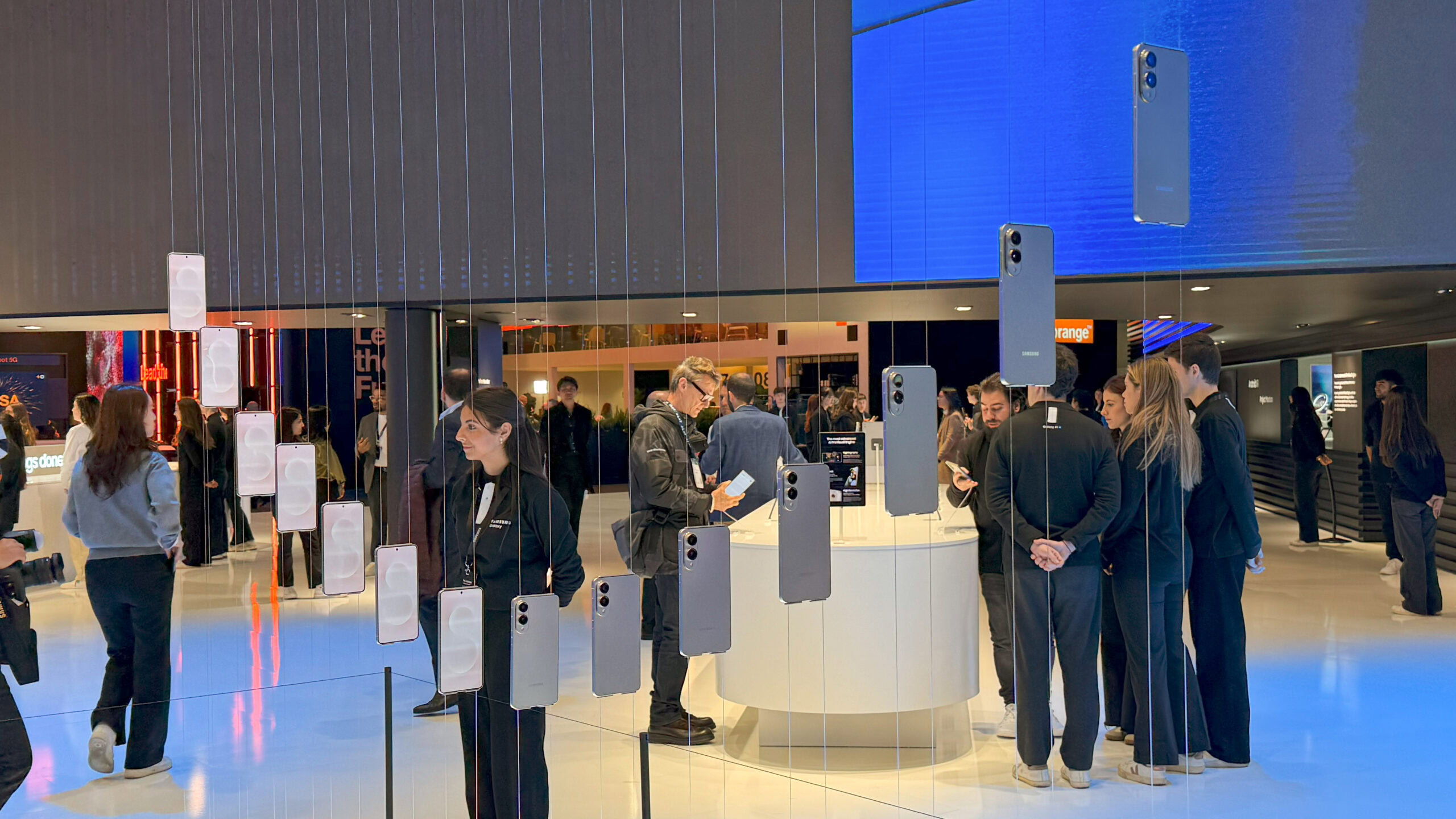

Samsung's 'backup plan' for the Galaxy S25 FE should be its main plan

Hadlee Simons20 hours ago

0

Forget Audio Overviews — NotebookLM's next big feat could be generating Video Overviews

Stephen SchenckMay 9, 2025

0

Nintendo warns it may brick your Switch if you engage in unauthorized use

Ryan McNealMay 9, 2025

0

Bluetooth has a new trick to protect your privacy, with a battery-saving bonus

Stephen SchenckMay 9, 2025

0