Affiliate links on Android Authority may earn us a commission. Learn more.

Get down with Google’s new pose-matching machine learning experiment

Published onJuly 20, 2018

- Google has created a machine learning experiment that matches users’ poses to a similar picture.

- It can identify the location of 17 body parts and uses this information to find a match from over 80,000 photos.

- You can test out the experiment on its website.

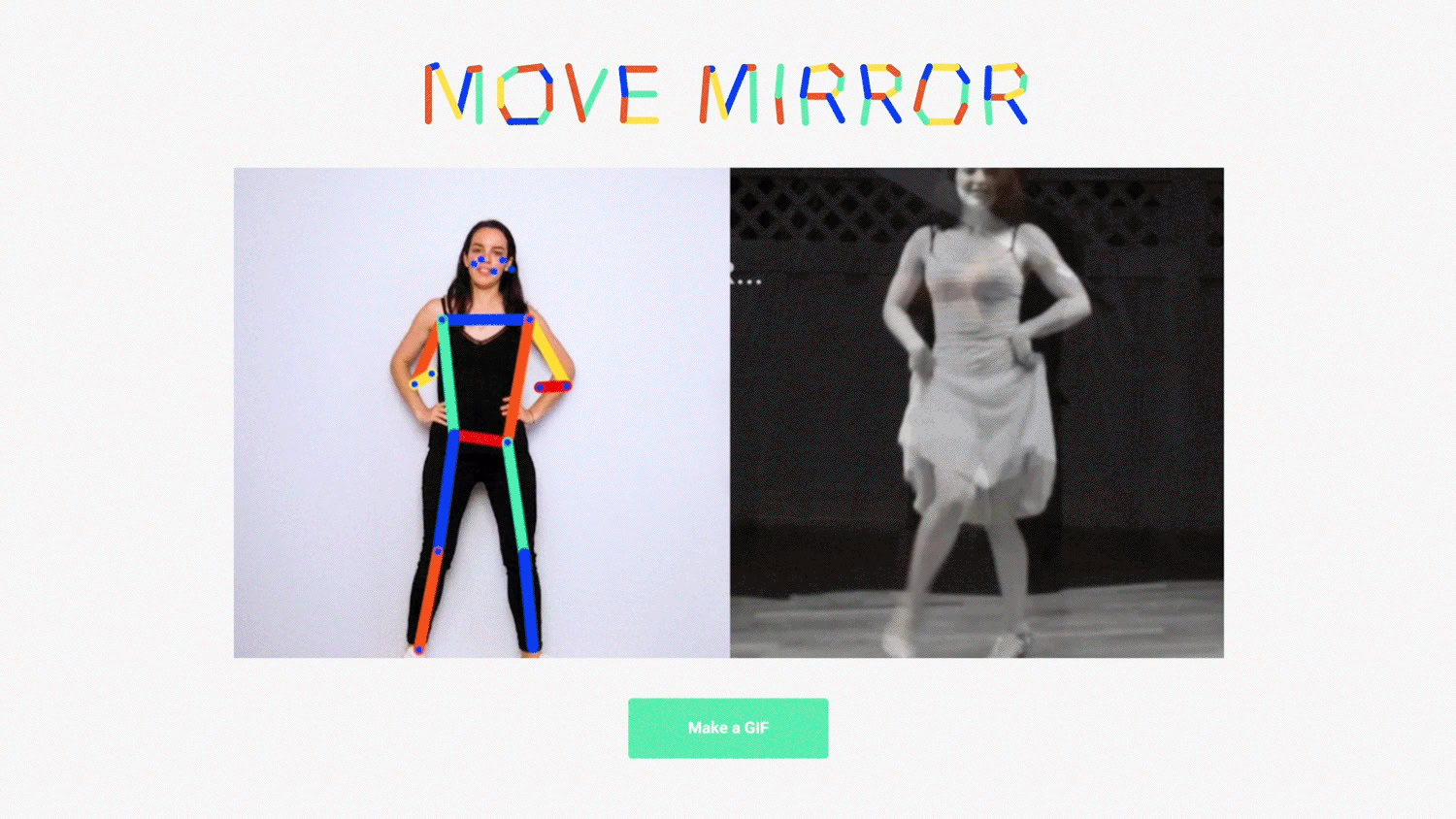

Google has released a new machine learning experiment that can match a pose you make in real time to an image of a person making the same pose. Announced on the Google Blog, the experiment is called Move Mirror and it works using just a web browser and a webcam. You can check out a video of the experiment in action below.

Google built the experiment using PoseNet, a machine learning model that detects poses by identifying the relative location of 17 body parts. The experiment feeds the input from your webcam into a database of over 80,000 images to find the image that best matches the pose. Google stresses that all the matching happens on your browser and none of your images are sent to a server.

To test out the experiment, you just need to head to the Move Mirror website. Once you allow the webpage access to your webcam, you can try out different poses and watch as the program matches what you do to images in its database. If you like what you see, you can create a GIF to download and share the match.

Google hopes the experiment will help make machine learning more accessible to coders and makers in the future. In a post on Medium, the makers of PoseNet suggest that pose estimation can be used in fields such as augmented reality, animation, and fitness.

Google has a history of releasing quirky experiments. You can check out ones that use augmented reality, artificial intelligence, voice, and more at the Google experiments website. If you don’t know where to start, MixLab is well worth trying out.

Next up: Machine learning is already part of your life, you just don’t know it yet