Affiliate links on Android Authority may earn us a commission. Learn more.

Features

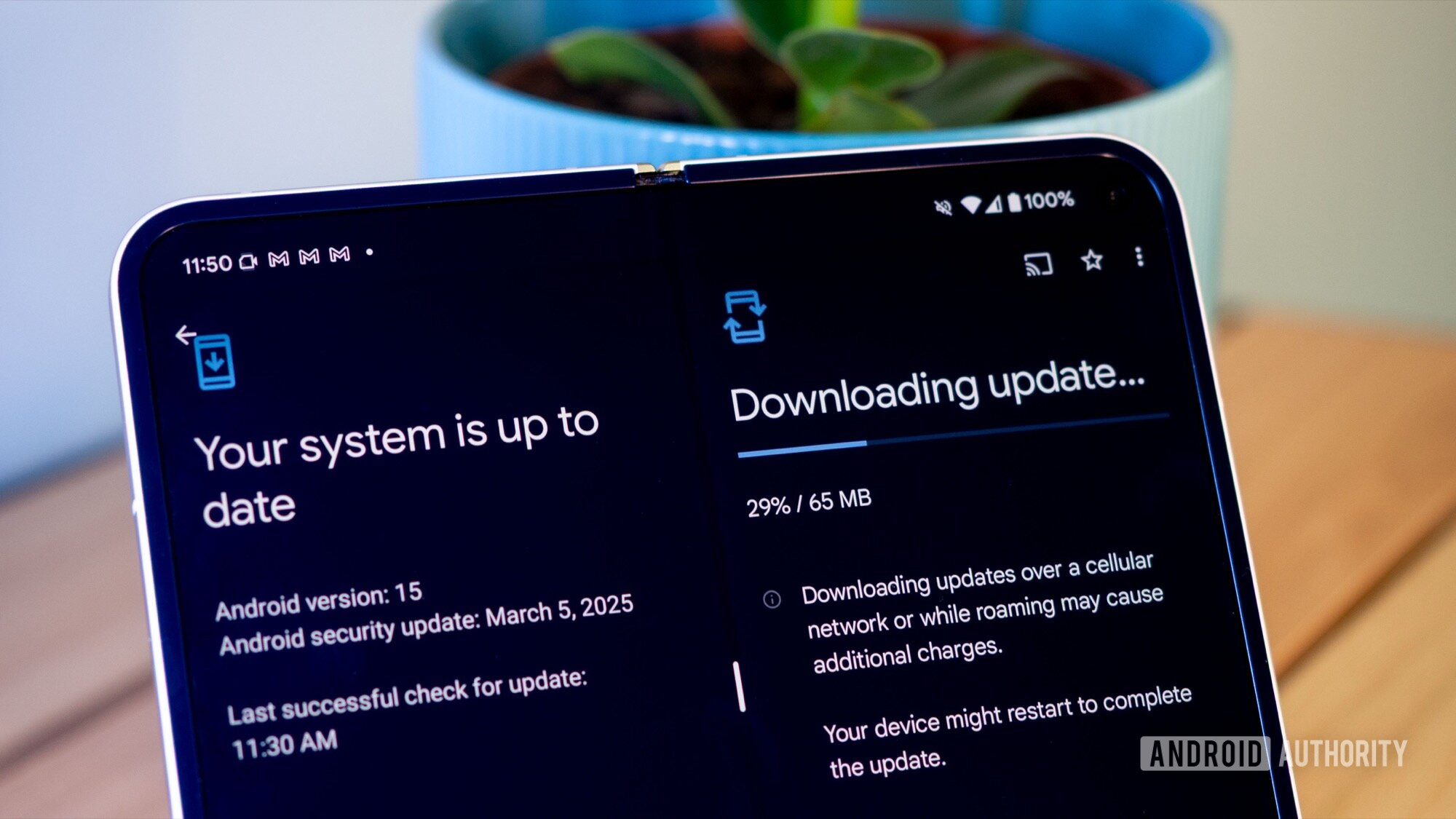

Your Android phone is always lying to you: It’s not really up to date

0

Features

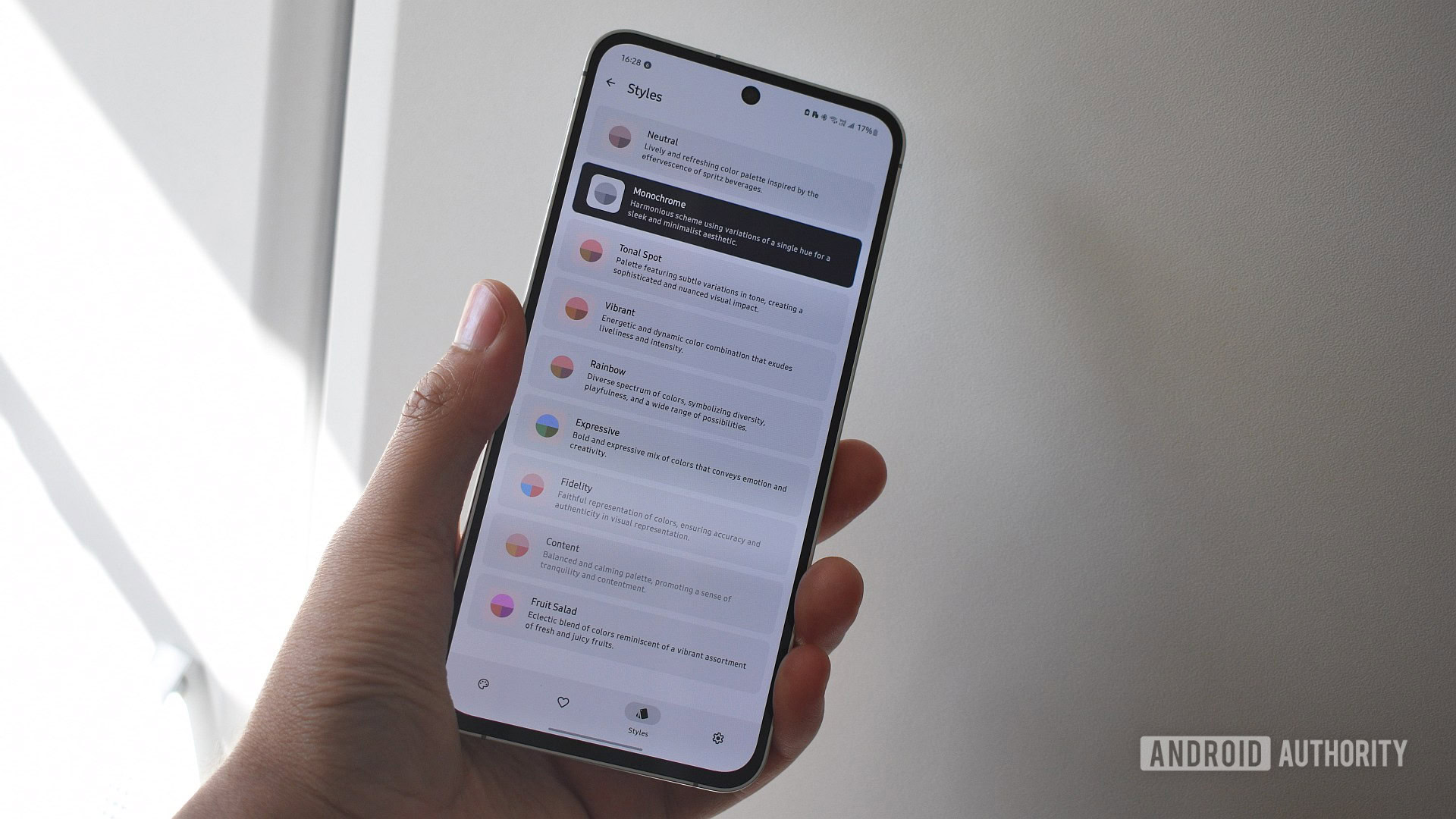

Is iOS’s final form just… Android?

0

Reviews

If the Nothing Phone 3a and 3a Pro fixed this one thing I would tell everyone to buy them

0

Top stories

Latest poll

Would you feel comfortable giving an AI chatbot your search history?

4313 votes

In case you missed it

More news

Megan EllisApril 2, 2025

0

These 4 free smartphone apps are all I need to stay organized

The best new Android apps and games for April 2025

Andy WalkerMarch 31, 2025

0

5 best SNES emulators for Android

Joe HindyMarch 31, 2025

0

The best Game Boy Advance emulators for iOS

Ben PriceMarch 26, 2025

0

Does the Google Pixel 9a support eSIM and dual-SIM?

Edgar CervantesMarch 26, 2025

0

You haven't even pre-ordered the Pixel 9a yet, and there's already a teardown video

Stephen SchenckApril 4, 2025

0

Nintendo delays Switch 2 pre-orders in the US over tariff uncertainty

Ryan McNealApril 4, 2025

0

RCS setup in Google Messages keeps failing? Here’s how to (maybe) fix it (Update: Google response)

Aamir SiddiquiApril 4, 2025

0

Cricket finally adds one of its most-requested features — and it's better than the competition

Andrew GrushApril 4, 2025

0

Google Assistant is wreaking havoc on DND settings and making people miss alarms and calls

Ryan McNealApril 4, 2025

0

Google wants to make Android 16 even better at streaming music and video

Mishaal RahmanApril 4, 2025

0

Nothing's CMF Phone 2 teaser hints at notable camera improvements

Pranob MehrotraApril 4, 2025

0

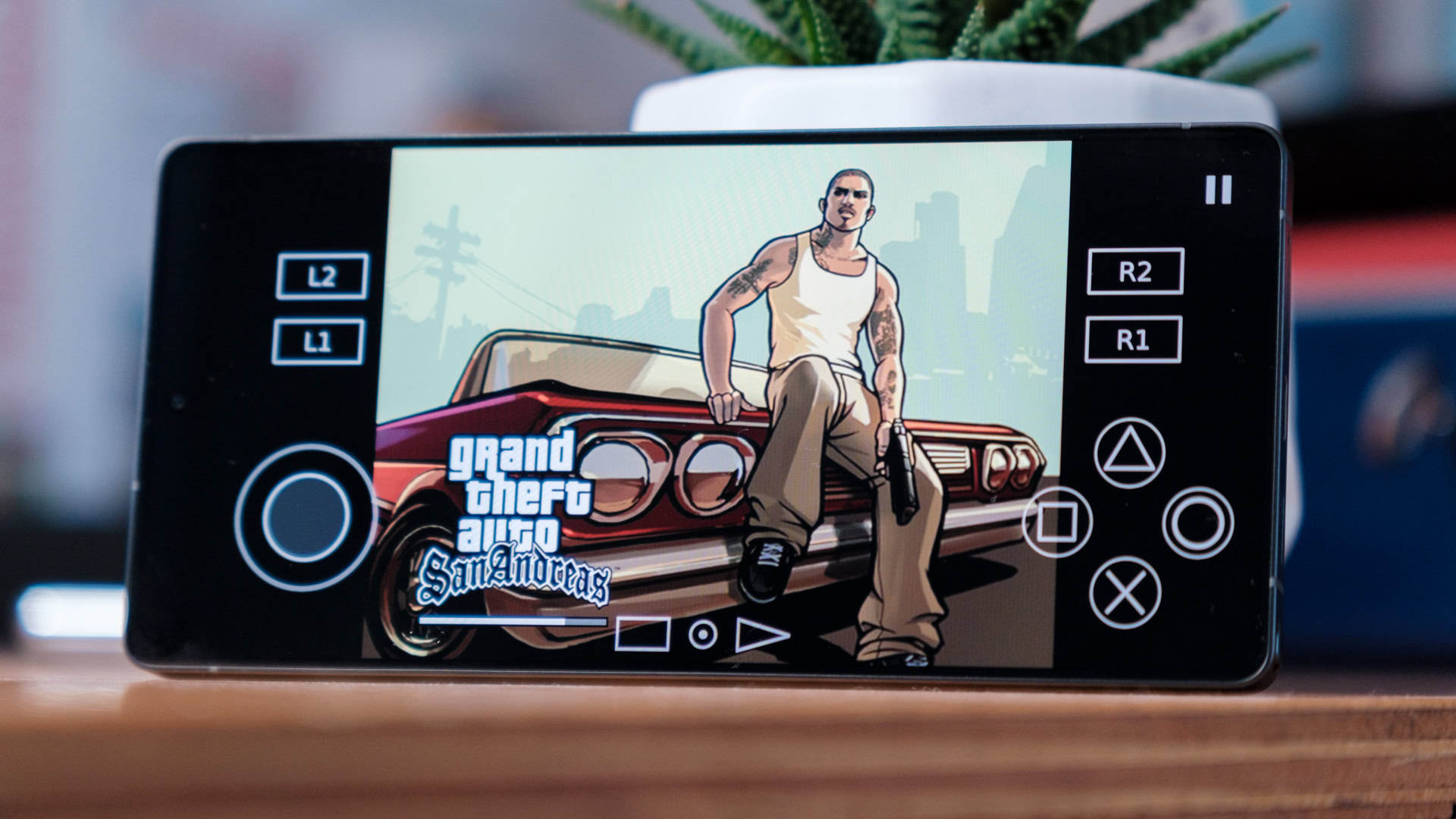

Serious about your retro gaming? This PS2 emulator now tracks true retro trophies

Nick FernandezApril 4, 2025

0

Google Calendar and Keep widgets are getting some much-needed spring cleaning (APK teardown)

Stephen SchenckApril 4, 2025

0

Driver ran away with your package? Uber is working on a solution (APK teardown)

Hadlee SimonsApril 4, 2025

0