Affiliate links on Android Authority may earn us a commission. Learn more.

How does a CPU work?

Published onOctober 21, 2024

If you’ve read any of Android Authority‘s CPU deep dives, you’re hopefully a bona fide expert on the ins and outs of how processors work. But don’t worry if not. These topics are dense and require a lot of background knowledge to get to grips with fully. It’s taken me over a decade in the industry to learn all this, after all (and almost as long to write it down!).

With that in mind, an article like this is well overdue, especially with recent shifts in the mobile and laptop landscapes, like Copilot Plus PCs and Qualcomm’s next-gen Snapdragon with custom CPUs. So, let’s go through everything you need to know about how CPUs work and how that influences the design decisions we see in today’s mobile processors. We’ll also talk about a few design concerns that are more applicable to Arm processors rather than those designed by AMD and Intel. But much of what we’ll discuss is universal to all application processors.

Before we dive in, let’s get a key term out of the way — clock speed. Measured in GHz, clock speed indicates how fast the processor’s internal clock is running and is the most common metric you’ll see talked about across Arm, AMD, Intel, and other processors. While more GHz is faster on a like-for-like basis, it tells you very little about how many operations a processor actually performs per second. For that, you’ll need to look much deeper into the CPU core itself. Let’s get in there.

We’ll start at the end

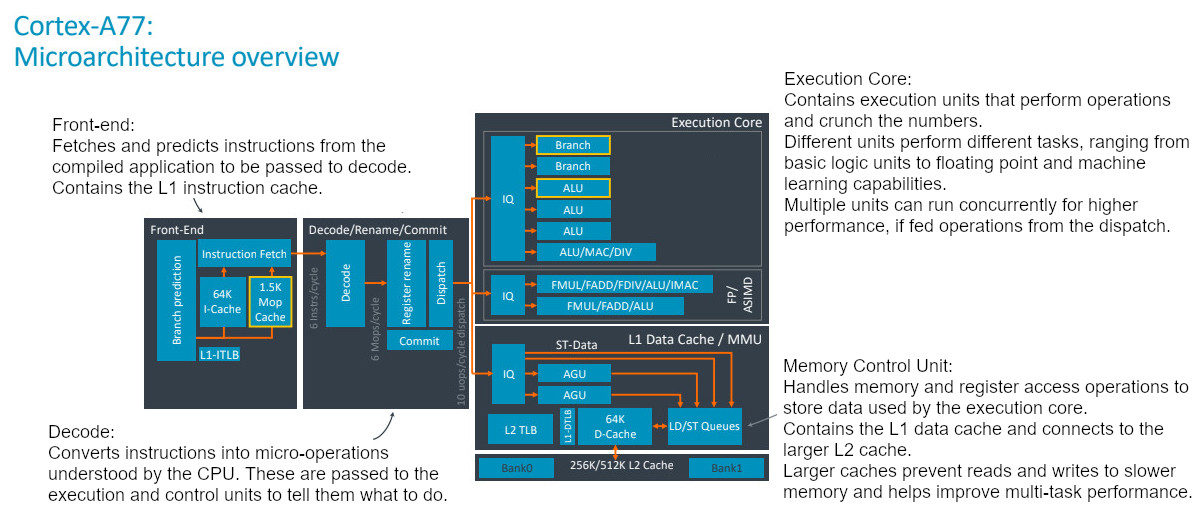

I’ve always found it more intuitive to understand what CPUs do and how they work by leaping right to the back end of the processor — the execution core. The execution core is full of execution units, the parts of the CPU that actually perform calculations and operations.

CPUs are general-purpose processors in the sense that they need to be able to handle a wide variety of sporadic workloads. By contrast, a DSP, AI processor, or GPU is built to run a smaller set of workloads repeatedly. As such, CPUs comprise several different execution units, each capable of different operations.

'Wider' processors do more with each clock, making them faster.

Common units include an arithmetic logic unit (ALU) for basic math functions, a floating point unit (FPU) to speed up complex math, single-instruction multiple-data math (like Arm’s SVE2), load-store units (LSU) to transfer data in and out of memory, and a Branch Unit to jump to a new point in a program. With the growing popularity of machine learning workloads, you might also find a dedicated multiply-accumulate unit or similar capabilities added to an ALU/FPU.

There are some architectural intricacies here, though. Not every ALU is necessarily the same. For instance, they don’t always support division or multiply capabilities. FPUs can be very different too, with varying register sizes (bits) and support for simultaneous operations varying greatly between architectures. The number of clock cycles it takes to complete an operation often varies too. Some operations are single-cycle while more complex ones, such as division, may take multiple cycles to complete.

The TL;DR here is that CPU capabilities can vary widely, despite them appearing somewhat similar. What companies stuff into their CPUs (how wide they are) determines how much they can compute with each clock and how much power they consume. This is one of the key differences between an Arm Cortex-X925, Apple’s M cores, and Qualcomm’s Oryon CPU, for example.

How to execute faster

A CPU can be built to handle a wide range of tasks, making it very flexible and useful. However, all of these execution units take up valuable silicon space and power, which would be pretty inefficient and slow if just one of these units was running at once, especially if it takes multiple cycles to complete a task.

This is the ultimate CPU conundrum: How do you make a blazing-fast CPU that doesn’t eat through battery life? Fortunately, it is possible to run multiple execution units simultaneously; this is called Instruction Level Parallelism or a superscalar processor.

Let’s start out simple. To get any execution unit to do something, you have to pass an instruction and some data, such as storing data in a register, ADD, or pulling data from RAM. These instructions arrive from the CPU’s dispatch engine (more on this later), which itself fetches instructions from the running program. A very basic scalar processor will fetch and dispatch one instruction, execute on one unit, and repeat.

In-order CPUs go carefully step by step, while out-of-order is faster but hard to keep on top of.

A superscalar processor can dispatch multiple instructions to multiple execution units at once (often quoted as instructions per cycle or IPC), improving its performance and optimizing power efficiency by keeping units as active as possible. In theory, superscalar processors can crunch through a program faster if there are more execution units to use. This is often what’s referred to as processor “width.” However, there are caveats to this idea.

For starters, there’s a limit on realistic processor width based on the workload you’re running. You could stuff five FPUs into a CPU core, but they’d just sit there wasting power (and silicon area) when opening a web browser. A good CPU is optimized for purpose; there’s a reason GPUs are built differently from CPUs, after all. Furthermore, there’s a limit on the number of instructions that it’s feasible to dispatch at once based on the program’s code. Consider the basic code example below:

x = 5 * 10

y = 23 + 89

z = x * y

While we could calculate x and y simultaneously on two ALUs, we can’t compute z until after x and y, regardless of how many execution units we have. A third execution unit would be wasted here (aka stalled) unless there’s another operation somewhere else in our code we could run while we’re waiting. This is where out-of-order (OoO) execution comes into play.

Although a program is essentially a long list of instructions, it doesn’t always have to be executed in the order it’s written. Take our previous example; it makes no difference when we calculate x or y, as long as they’re done before z. The core concept of OoO is to fetch and buffer a chunk of upcoming instructions in memory, then execute them as soon as an execution unit is free to avoid stalling the processor.

Modern superscalar processors run fast but consume silicon space and power.

There’s a complication, though, the CPU must track the status of buffered execution instructions. This is the job of a re-order buffer, and its size determines the maximum number of instructions in flight. Once again, though, the looming specter of a power and silicon budget reigns in what’s feasible. Storing instructions and data for OoO requires a fast local cache of memory, which doesn’t come cheap. Still, we’ve seen big CPU cores use increasingly large re-order buffers in recent years (likely helped by smaller manufacturing nodes), allowing CPUs to scale up their execution cores and run at full tilt.

What was that about instruction dispatch?

OK, so we have a beefy execution core and a nice out-of-order buffer to keep it busy, but how do the necessary instructions make their way into the buffer? This is where the dispatch (front) end of the CPU comes in, which can get a little complicated. But don’t worry; we’ve got the foundations behind us now, and they will tie this all together nicely.

The basics are simple enough. A fetch engine grabs instructions from RAM, instruction caches hold these instructions for processing, a decoder breaks complex instructions down, and a dispatch engine fires these instructions out to the execution engines for processing. Likewise, data is grabbed in a similar manner and held in a data cache.

The implementation is more complex. First, dispatch width can vary, which ideally should be paired with the capabilities of the execution units and the CPU’s OoO nature. There’s also a very complex relationship between instruction fetching, branch prediction, and latency. A lot of modern CPU secret sauce boils down to optimal prefetch patterns to quickly scan through RAM, optimizing for looping code, and branch prediction accuracy. That’s all well beyond the scope of this article, as it’s the “dark art” of modern CPU design.

A final word on branch prediction, though. With modern OoO CPUs, computing potential branches (IF this OR that) ahead of time leads to big performance gains, but there’s always the risk that the CPU will calculate the wrong branch. In this case, the current instruction pipeline (those that have been dispatched) has to be flushed and the process restarted, wasting CPU performance and power. This is why branch prediction is such an important aspect of modern CPU, especially with today’s huge re-order buffer sizes. As such, smaller execution windows and even in-order CPU designs still have a place, particularly where lower power is concerned.

Why do we have multi-cluster CPUs?

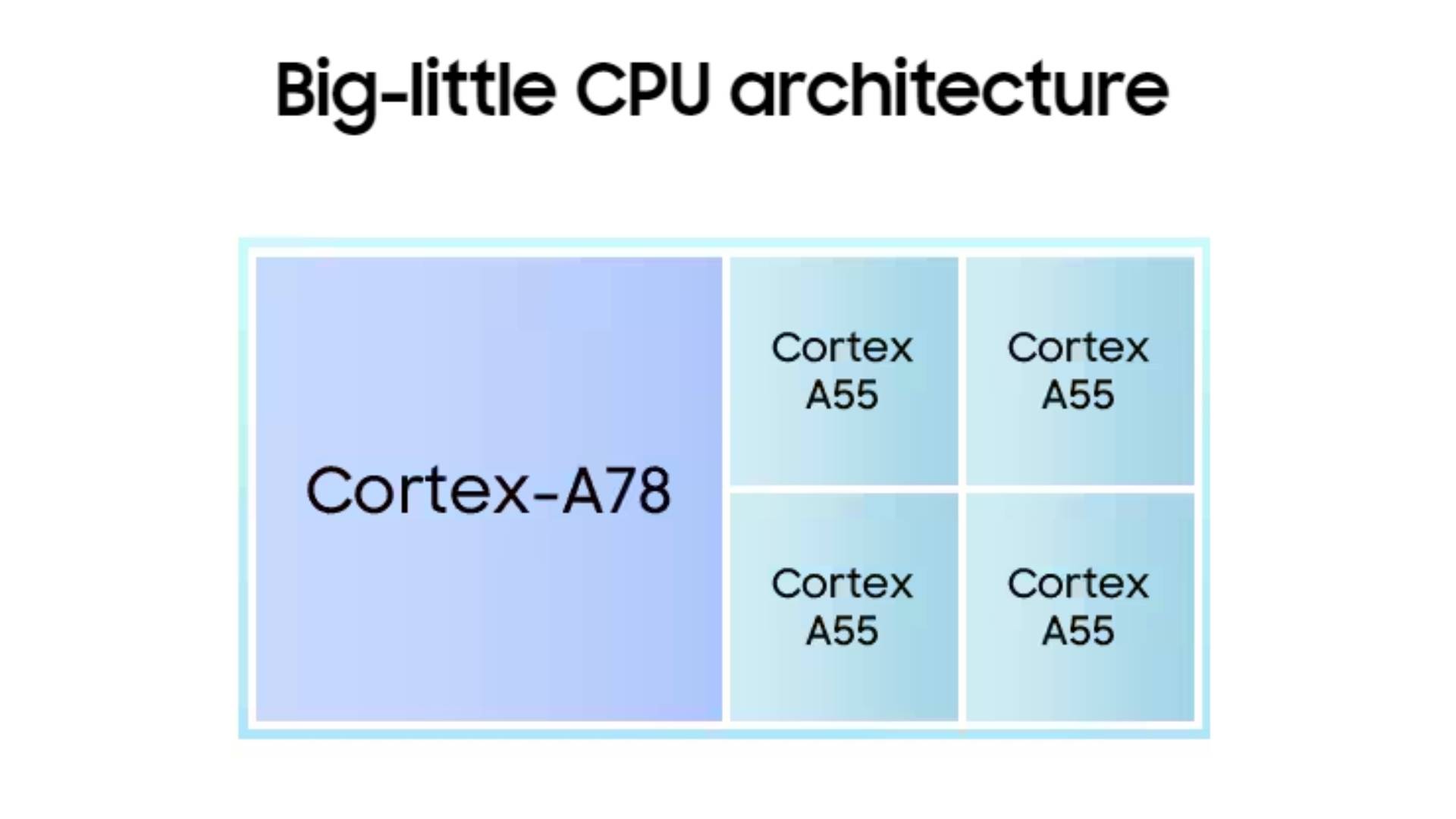

If you look at current mobile, laptop, and even desktop chipsets, you’ll see tiered clusters featuring different CPU cores within the same chip. Arm’s big.LITTLE (now DynamIQ) popularized the idea, but Apple, AMD, and Intel have their takes on scalable performance and efficiency across CPU cores. This idea takes all the principles we outlined above and mixes and matches the benefits of bigger, wider, and more powerful cores with smaller, less powerful cores featuring less memory but more efficient designs. The reason for this is simple — have both maximum performance and best-in-class power efficiency in the same system on a chip.

Broadly speaking, modern mobile applications want at least one or perhaps two powerhouse cores to ensure responsive app launch performance and to burst those occasional more complex tasks. However, those big powerhouse cores are overkill for the majority of workloads your apps require on a daily basis. You can browse the web, send Facebook messages, and even play the latest mobile games comfortably on lower-power CPU cores, with the added benefit of longer battery life and a cheaper chip that doesn’t require as much silicon area.

This is why we typically see just one or two large CPU cores in modern mobile designs, accompanied by lower-power middle and/or efficiency cores. However, the appeal of the latter appears to be diminishing as mid-tier cores become increasingly power efficient, aided by cutting-edge manufacturing processes. A low-power, in-order CPU core for background tasks isn’t as necessary as it once was, at least in flagship phones. It’s still a popular setup for more affordable chipsets that don’t benefit from the latest and most expensive manufacturing nodes.

Apple vs Arm vs Qualcomm… and more

This wide range of CPU development options has given rise to many of the industry’s most hotly debated rivalries. Why are Apple’s mobile processors faster than Google’s? Is Exynos as good as Snapdragon? And, more recently, should you use an Arm or x64 PC?

Historically, Apple has led in raw mobile CPU performance, owing to its custom Arm-based designs that have opted for bigger, wider, big cores paired with just four efficiency cores. Meanwhile, chipsets used by Android smartphones have often had ten or eight cores split into three clusters. Their biggest cores haven’t quite rivaled Apple’s behemoths, but the sheer core count has kept the gap relatively small when it comes to modern multi-threaded applications. Likewise, Android has benefited from solid power efficiency, thanks to those small cores, while iPhones have had a less reliable reputation.

CPU performance in the Android space has, typically, been a closer run affair in recent years, with silicon manufacturers tweaking off-the-shelf Arm CPU components, meaning much smaller differences between models. However, that has changed again in recent years, with Google’s Tensor opting for older CPU parts and MediaTek’s Dimensity flagships moving to bigger core designs. Things are set to change once again with the arrival of the next Snapdragon chip, which sees Qualcomm return to custom mobile CPU design after many years away and now tracking a course that’s much closer to Apple than other chipsets you’ll find in the Android sphere.

If you found this article interesting, there’s even more intrigue to come as we approach 2025 and a whole new raft of flagship Android smartphones. Plus, next time someone says, “Ah, but this chip has 0.2GHz more, so it must be faster,” you’ll be well-placed to tell them exactly why they’re wrong.