Affiliate links on Android Authority may earn us a commission. Learn more.

Recognize text, faces and landmarks: Adding Machine Learning to your Android apps

Published onApril 7, 2019

Machine learning (ML) can help you create innovative, compelling and unique experiences for your mobile users.

Once you’ve mastered ML, you can use it to create a wide range of applications, including apps that automatically organize photos based on their subject matter, identify and track a person’s face across a livestream, extract text from an image, and much more.

But ML isn’t exactly beginner friendly! If you want to enhance your Android apps with powerful machine learning capabilities, then where exactly do you start?

In this article, I’ll provide an overview of an SDK (Software Development Kit) that promises to put the power of ML at your fingertips, even if you have zero ML experience. By the end of this article, you’ll have the foundation you need to start creating intelligent, ML-powered apps that are capable of labelling images, scanning barcodes, recognizing faces and famous landmarks, and performing many other powerful ML tasks.

Meet Google’s Machine Learning Kit

With the release of technologies such as TensorFlow and CloudVision, ML is becoming more widely used, but these technologies aren’t for the faint of heart! You’ll typically need a deep understanding of neural networks and data analysis, just to get started with a technology such as TensorFlow.

Even if you do have some experience with ML, creating a machine learning-powered mobile app can be a time-consuming, complex and expensive process, requiring you to source enough data to train your own ML models, and then optimize those ML models to run efficiently in the mobile environment. If you’re an individual developer, or have limited resources, then it may not be possible to put your ML knowledge into practice.

ML Kit is Google’s attempt to bring machine learning to the masses.

Under the hood, ML Kit bundles together several powerful ML technologies that would typically require extensive ML knowledge, including Cloud Vision, TensorFlow, and the Android Neural Networks API. ML Kit combines these specialist ML technologies with pre-trained models for common mobile uses case, including extracting text from an image, scanning a barcode, and identifying the contents of a photo.

Regardless of whether you have any previous knowledge of ML, you can use ML Kit to add powerful machine learning capabilities to your Android and iOS apps – just pass some data to the correct part of ML Kit, such as the Text Recognition or Language Identification API, and this API will use machine learning to return a response.

How do I use the ML Kit APIs?

ML Kit is divided into several APIs that are distributed as part of the Firebase platform. To use any of the ML Kit APIs, you’ll need to create a connection between your Android Studio project and a corresponding Firebase project, and then communicate with Firebase.

Most of the ML Kit models are available as on-device models that you can download and use locally, but some models are also available in the cloud, which allows your app to perform ML-powered tasks over the device’s internet connection.

Each approach has its own unique set of strengths and weaknesses, so you’ll need to decide whether local or remote processing makes the most sense for your particular app. You could even add support for both models, and then allow your users to decide which model to use at runtime. Alternatively, you might configure your app to select the best model for the current conditions, for example only using the cloud-based model when the device is connected to Wi-Fi.

If you opt for the local model, then your app’s machine learning features will always be available, regardless of whether the user has an active Internet connection. Since all the work is performed locally, on-device models are ideal when your app needs to process large amounts of data quickly, for example if you’re using ML Kit to manipulate a live video stream.

Meanwhile, cloud-based models typically provide greater accuracy than their on-device counterparts, as the cloud models leverage the power of Google Cloud Platform’s machine learning technology. For example, the Image Labeling API’s on-device model includes 400 labels, but the cloud model features over 10,000 labels.

Depending on the API, there may also be some functionality that’s only available in the cloud, for example the Text Recognition API can only identify non-Latin characters if you use its cloud-based model.

The cloud-based APIs are only available for Blaze-level Firebase projects, so you’ll need to upgrade to a pay-as-you-go Blaze plan, before you can use any of ML Kit’s cloud models.

If you do decide to explore the cloud models, then at the time of writing, there was a free quota available for all ML Kit APIs. If you just wanted to experiment with cloud-based Image Labelling, then you could upgrade your Firebase project to the Blaze plan, test the API on less than 1,000 images, and then switch back to the free Spark plan, without being charged. However, terms and conditions have a nasty habit of changing over time, so be sure to read the small print before upgrading to Blaze, just to make sure you don’t get hit by any unexpected bills!

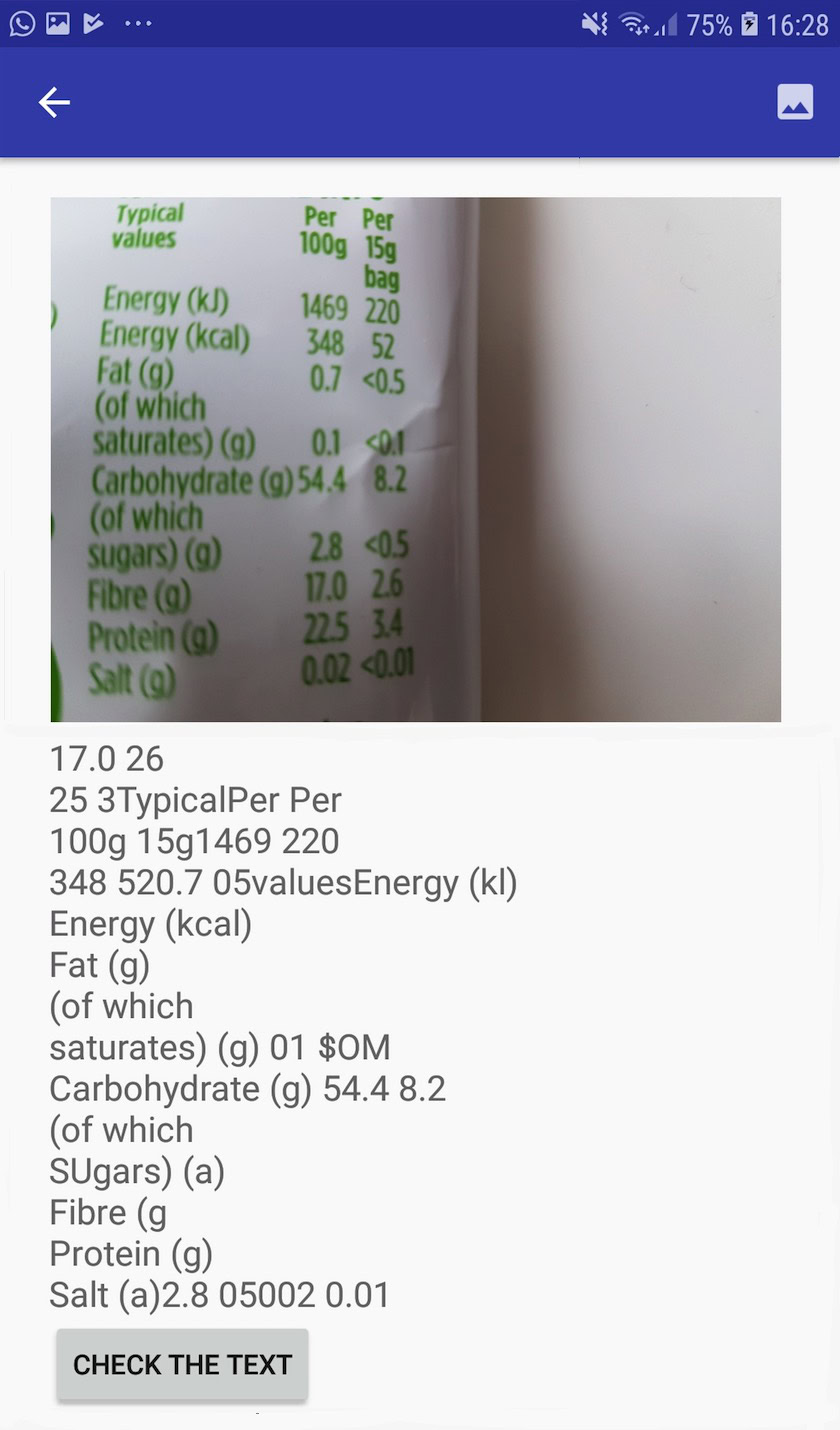

Identify text in any image, with the Text Recognition API

The Text Recognition API can intelligently identify, analyze and process text.

You can use this API to create applications that extract text from an image, so your users don’t have to waste time on tedious manual data entry. For example, you might use the Text Recognition API to help your users extract and record the information from receipts, invoices, business cards, or even nutritional labels, simply by taking a photo of the item in question.

You could even use the Text Recognition API as the first step in a translation app, where the user takes a photo of some unfamiliar text and the API extracts all the text from the image, ready to be passed to a translation service.

ML Kit’s on-device Text Recognition API can identify text in any Latin-based language, while its cloud-based counterpart can recognize a greater variety of languages and characters, including Chinese, Japanese, and Korean characters. The cloud-based model is also optimized to extract sparse text from images and text from densely-packed documents, which you should take into account when deciding which model to use in your app.

Want some hands-on experience with this API? Then check out our step-by-step guide to creating an application that can extract the text from any image, using the Text Recognition API.

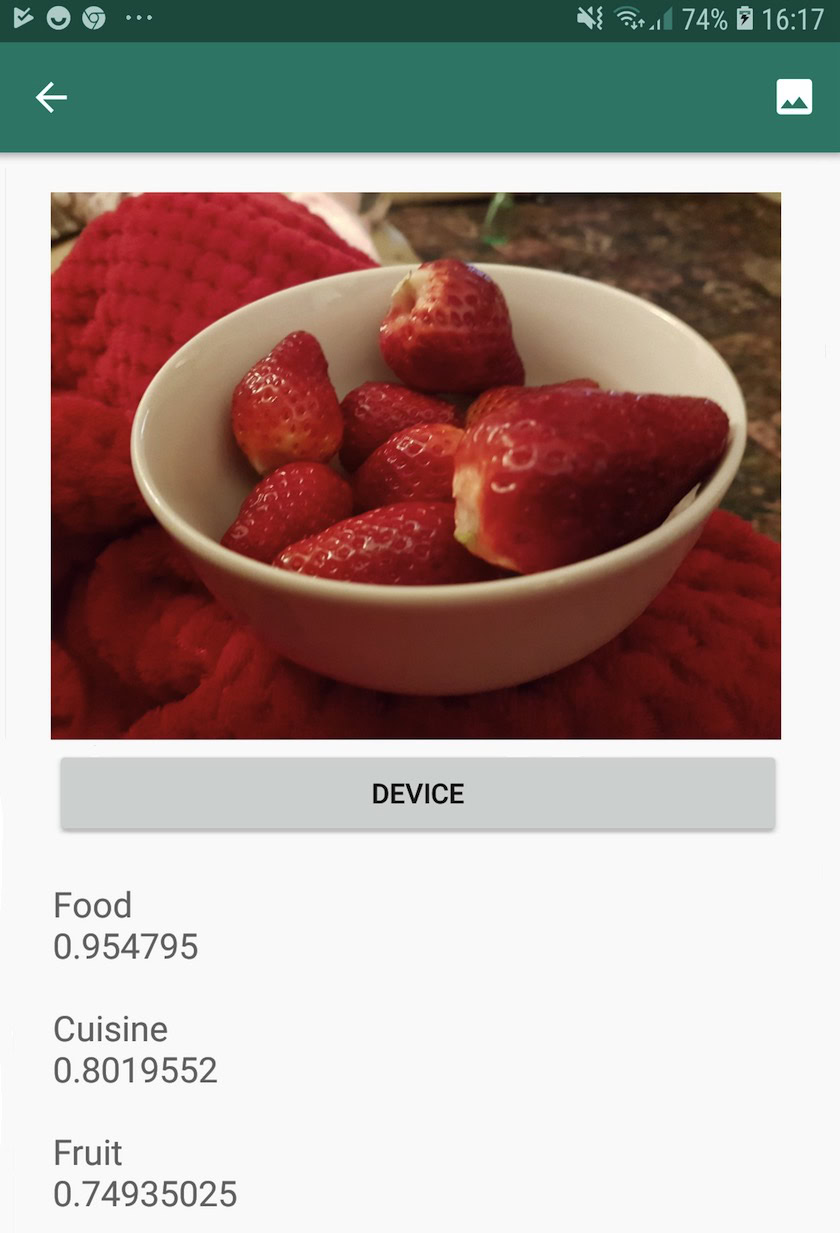

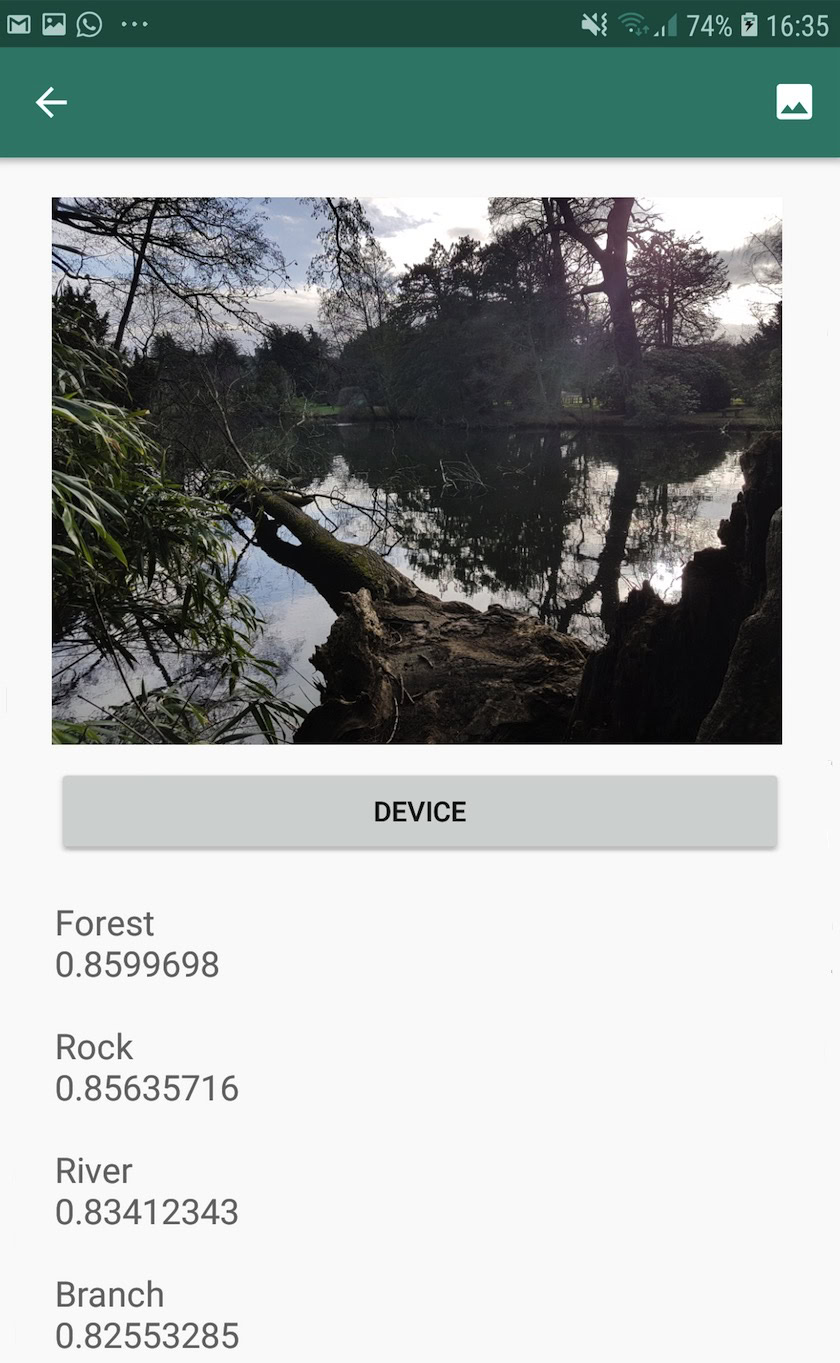

Understanding an image’s content: the Image Labeling API

The Image Labeling API can recognize entities in an image, including locations, people, products and animals, without the need for any additional contextual metadata. The Image Labeling API will return information about the detected entities in the form of labels. For example in the following screenshot I’ve provided the API with a nature photo, and its responded with labels such as “Forest” and “River.”

This ability to recognize an image’s contents can help you create apps that tag photos based on their subject matter; filters that automatically identify inappropriate user-submitted content and remove it from your app; or as the basis for advanced search functionality.

Many of the ML Kit APIs return multiple possible results, complete with accompanying confidence scores – including the Image Labeling API. If you pass Image Labeling a photo of a poodle, then it might return labels such as “poodle,” “dog,” “pet” and “small animal,” all with varying scores indicating the API’s confidence in each label. Hopefully, in this scenario “poodle” will have the highest confidence score!

You can use this confidence score to create a threshold that must be met, before your application acts on a particular label, for example displaying it to the user or tagging a photo with this label.

Image Labeling is available both on-device and in the cloud, although if you opt for the cloud model then you’ll get access to over 10,000 labels, compared to the 400 labels that are included in the on-device model.

For a more in-depth look at the Image Labeling API, check out Determine an image’s content with machine learning. In this article, we build an application that processes an image, and then returns the labels and confidence scores for each entity detected within that image. We also implement on-device and cloud models in this app, so you can see exactly how the results differ, depending on which model you opt for.

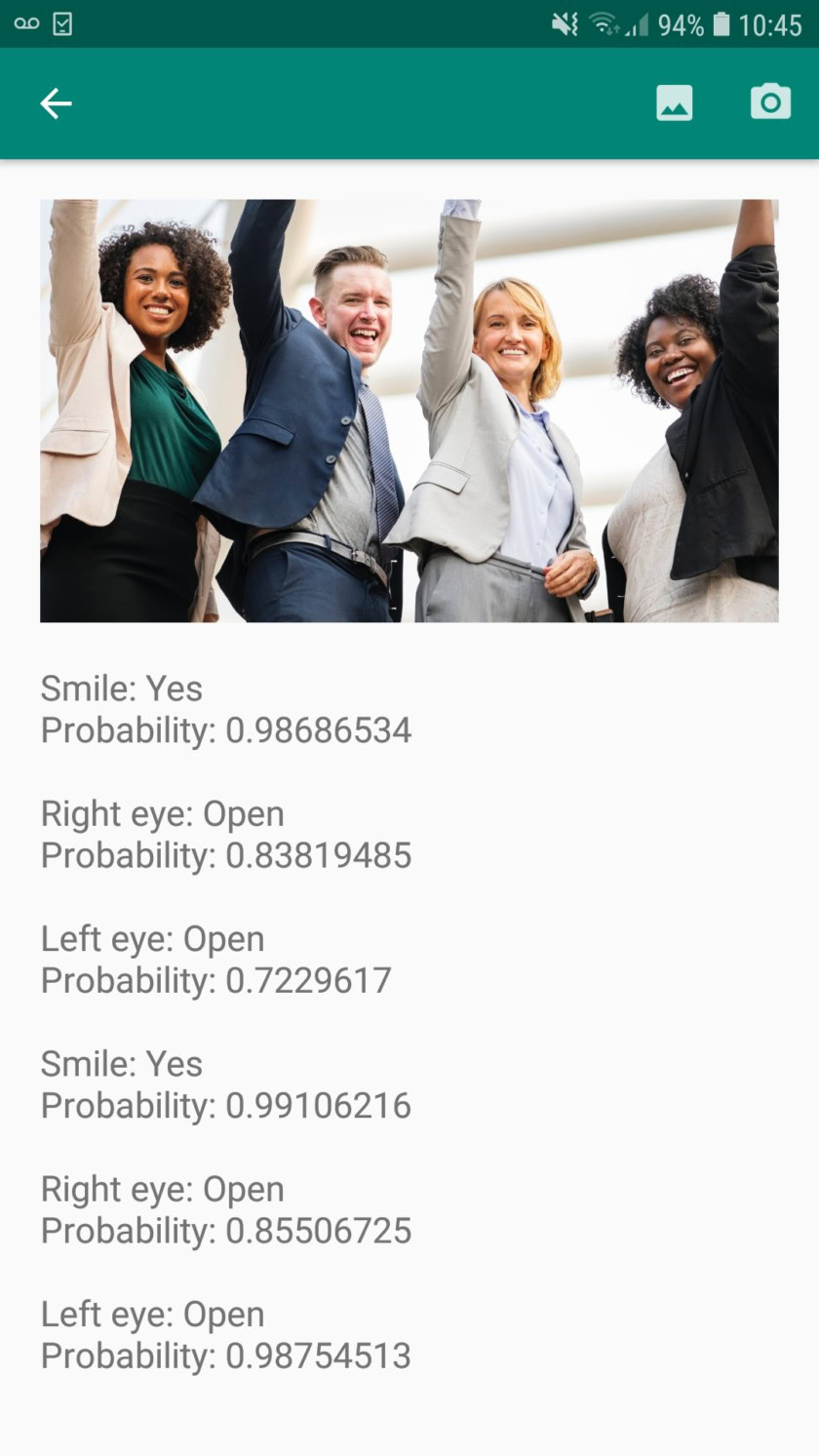

Understanding expressions and tracking faces: the Face Detection API

The Face Detection API can locate human faces in photos, videos and live streams, and then extracts information about each detected face, including its position, size and orientation.

You could use this API to help users edit their photos, for example by automatically cropping all the empty space around their latest headshot.

The Face Detection API isn’t limited to images – you can also apply this API to videos, for example you might create an app that identifies all the faces in a video feed and then blurs everything except those faces, similar to Skype’s background blur feature.

Face detection is always performed on-device, where it’s fast enough to be used in real-time, so unlike the majority of ML Kit’s APIs, Face Detection does not include a cloud model.

In addition to detecting faces, this API has a few additional features that are worth exploring. Firstly, the Face Detection API can identify facial landmarks, such as eyes, lips, and ears, and then retrieves the exact coordinates for each of these landmarks. This landmark recognition provides you with an accurate map of each detected face – perfect for creating augmented reality (AR) apps that add Snapchat-style masks and filters to the user’s camera feed.

The Face Detection API also offers facial classification. Currently, ML Kit supports two facial classifications: eyes open, and smiling.

You could use this classification as the basis for accessibility services, such as hands-free controls, or to create games that respond to the player’s facial expression. The ability to detect whether someone is smiling or has their eyes open can also come in handy if you’re creating a camera app – after all, there’s nothing worse than taking a bunch of photos, only to later discover that someone had their eyes closed in every single shot.

Finally, the Face Detection API includes a face-tracking component, which assigns an ID to a face and then tracks that face across multiple consecutive images or video frames. Note that this is face tracking and not true facial recognition. Behind the scenes, the Face Detection API is tracking the position and motion of the face and then inferring that this face likely belongs to the same person, but it’s ultimately unaware of the person’s identity.

Try the Face Detection API for yourself! Find out how to build a face-detecting app with machine learning and Firebase ML Kit.

Barcode Scanning with Firebase and ML

Barcode Scanning may not sound as exciting as some of the other machine learning APIs, but it’s one of the most accessible parts of ML Kit.

Scanning a barcode doesn’t require any specialist hardware or software, so you can use the Barcode Scanning API while ensuring your app remains accessible to as many people as possible, including users on older or budget devices. As long as a device has a functioning camera, it should have no problems scanning a barcode.

ML Kit’s Barcode Scanning API can extract a wide range of information from printed and digital barcodes, which makes it a quick, easy and accessible way to pass information from the real world, to your application, without users having to perform any tedious manual data entry.

There’s nine different data types that the Barcode Scanning API can recognize and parse from a barcode:

- TYPE_CALENDAR_EVENT. This contains information such as the event’s location, organizer, and it’s start and end time. If you’re promoting an event, then you might include a printed barcode on your posters or flyers, or feature a digital barcode on your website. Potential attendees can then extract all the information about your event, simply by scanning its barcode.

- TYPE_CONTACT_INFO. This data type covers information such as the contact’s email address, name, phone number, and title.

- TYPE_DRIVER_LICENSE. This contains information such as the street, city, state, name, and date of birth associated with the driver’s license.

- TYPE_EMAIL. This data type includes an email address, plus the email’s subject line, and body text.

- TYPE_GEO. This contains the latitude and longitude for a specific geo point, which is an easy way to share a location with your users, or for them to share their location with others. You could even potentially use geo barcodes to trigger location-based events, such as displaying some useful information about the user’s current location, or as the basis for location-based mobile games.

- TYPE_PHONE. This contains the telephone number and the number’s type, for example whether it’s a work or a home telephone number.

- TYPE_SMS. This contains some SMS body text and the phone number associated with the SMS.

- TYPE_URL. This data type contains a URL and the URL’s title. Scanning a TYPE_URL barcode is much easier than relying on your users to manually type a long, complex URL, without making any typos or spelling mistakes.

- TYPE_WIFI. This contains a Wi-Fi network’s SSID and password, plus its encryption type such as OPEN, WEP or WPA. A Wi-Fi barcode is one of the easiest ways to share Wi-Fi credentials, while also completely removing the risk of your users entering this information incorrectly.

The Barcode Scanning API can parse data from a range of different barcodes, including linear formats such as Codabar, Code 39, EAN-8, ITF, and UPC-A, and 2D formats like Aztec, Data Matrix, and QR Codes.

To make things easier for your end-users, this API scans for all supported barcodes simultaneously, and can also extract data regardless of the barcode’s orientation – so it doesn’t matter if the barcode is completely upside-down when the user scans it!

Machine Learning in the Cloud: the Landmark Recognition API

You can use ML Kit’s Landmark Recognition API to identify well-known natural and constructed landmarks within an image.

If you pass this API an image containing a famous landmark, then it’ll return the name of that landmark, the landmark’s latitude and longitude values, and a bounding box indicating where the landmark was discovered within the image.

You can use the Landmark Recognition API to create applications that automatically tag the user’s photos, or for providing a more customized experience, for example if your app recognizes that a user is taking photos of the Eiffel Tower, then it might offer some interesting facts about this landmark, or suggest similar, nearby tourist attractions that the user might want to visit next.

Unusually for ML Kit, the Landmark Detection API is only available as a cloud-based API, so your application will only be able to perform landmark detection when the device has an active Internet connection.

The Language Identification API: Developing for an international audience

Today, Android apps are used in every part of the world, by users who speak many different languages.

ML Kit’s Language Identification API can help your Android app appeal to an international audience, by taking a string of text and determining the language it’s written in. The Language Identification API can identify over a hundred different languages, including romanized text for Arabic, Bulgarian, Chinese, Greek, Hindi, Japanese, and Russian.

This API can be a valuable addition to any application that processes user-provided text, as this text rarely includes any language information. You might also use the Language Identification API in translation apps, as the first step to translating anything, is knowing what language you’re working with! For example, if the user points their device’s camera at a menu, then your app might use the Language Identification API to determine that the menu is written in French, and then offer to translate this menu using a service such as the Cloud Translation API (perhaps after extracting its text, using the Text Recognition API?)

Depending on the string in question, the Language Identification API might return multiple potential languages, accompanied by confidence scores so that you can determine which detected language is most likely to be correct. Note that at the time of writing ML Kit couldn’t identify multiple different languages within the same string.

To ensure this API provides language identification in real time, the Language Identification API is only available as an on-device model.

Coming Soon: Smart Reply

Google plan to add more APIs to ML Kit in the future, but we already know about one up-and-coming API.

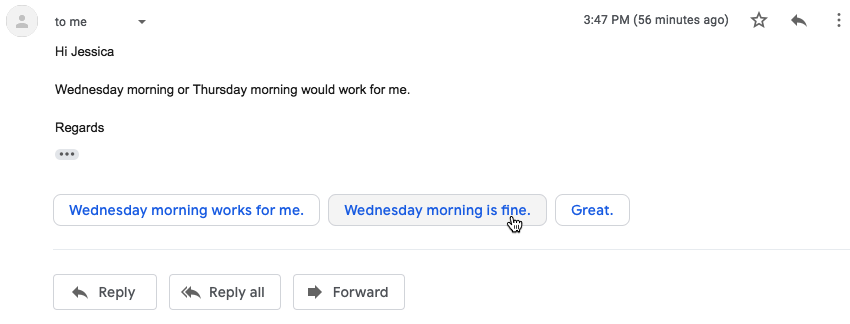

According to the ML Kit website, the upcoming Smart Reply API will allow you to offer contextual messaging replies in your applications, by suggesting snippets of text that fit the current context. Based on what we already know about this API, it seems that Smart Reply will be similar to the suggested-response feature already available in the Android Messages app, Wear OS, and Gmail.

The following screenshot shows how the suggested response feature currently looks in Gmail.

What’s next? Using TensorFlow Lite with ML Kit

ML Kit provides pre-built models for common mobile use cases, but at some point you may want to move beyond these ready-made models.

It’s possible to create your own ML models using TensorFlow Lite and then distribute them using ML Kit. However, just be aware that unlike ML Kit’s ready-made APIs, working with your own ML models does require a significant amount of ML expertise.

Once you’ve created your TensorFlow Lite models, you can upload them to Firebase and Google will then manage hosting and serving those models to your end-users. In this scenario, ML Kit acts as an API layer over your custom model, which simplifies some of the heavy-lifting involved in using custom models. Most notably, ML Kit will automatically push the latest version of your model to your users, so you won’t have to update your app every single time you want to tweak your model.

To provide the best possible user experience, you can specify the conditions that must be met, before your application will download new versions of your TensorFlow Lite model, for example only updating the model when the device is idle, charging, or connected to Wi-Fi. You can even use ML Kit and TensorFlow Lite alongside other Firebase services, for example using Firebase Remote Config and Firebase A/B Testing to serve different models to different sets of users.

If you want to move beyond pre-built models, or ML Kit’s existing models don’t quite meet your needs, then you can learn more about creating your own machine learning models, over at the official Firebase docs.

Wrapping up

In this article, we looked at each component of Google’s machine learning kit, and covered some common scenarios where you might want to use each of the ML Kit APIs.

Google is planning to add more APIs in the future, so which machine learning APIs would you like to see added to ML Kit next? Let us know in the comments below!