PC/Windows

Best lists, buying guides. and explainers on Windows and Windows-powered machines from ASUS, Dell, Lenovo, and more.

Reviews

Guides

How-to's

Features

All the latest

PC/Windows news

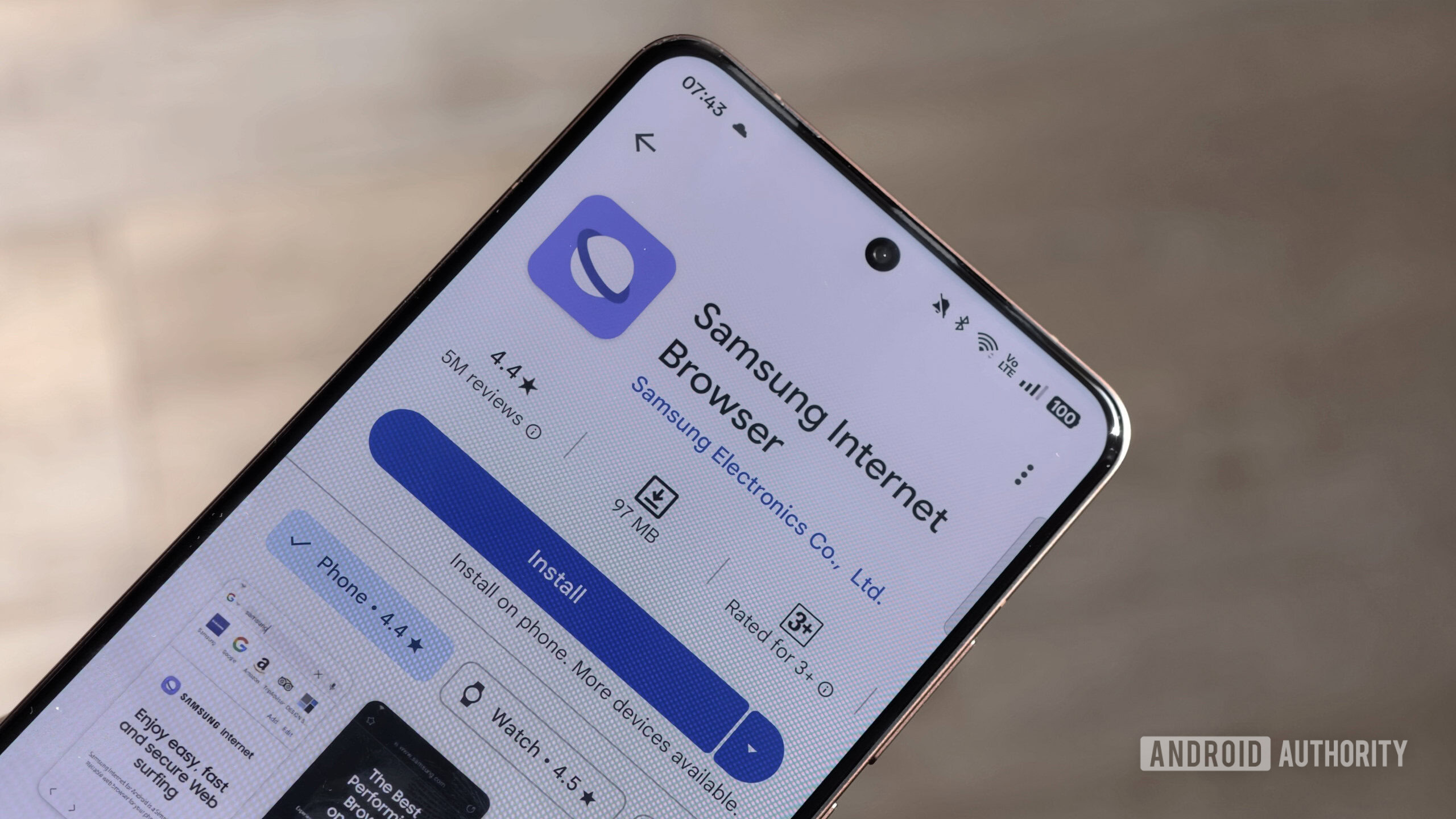

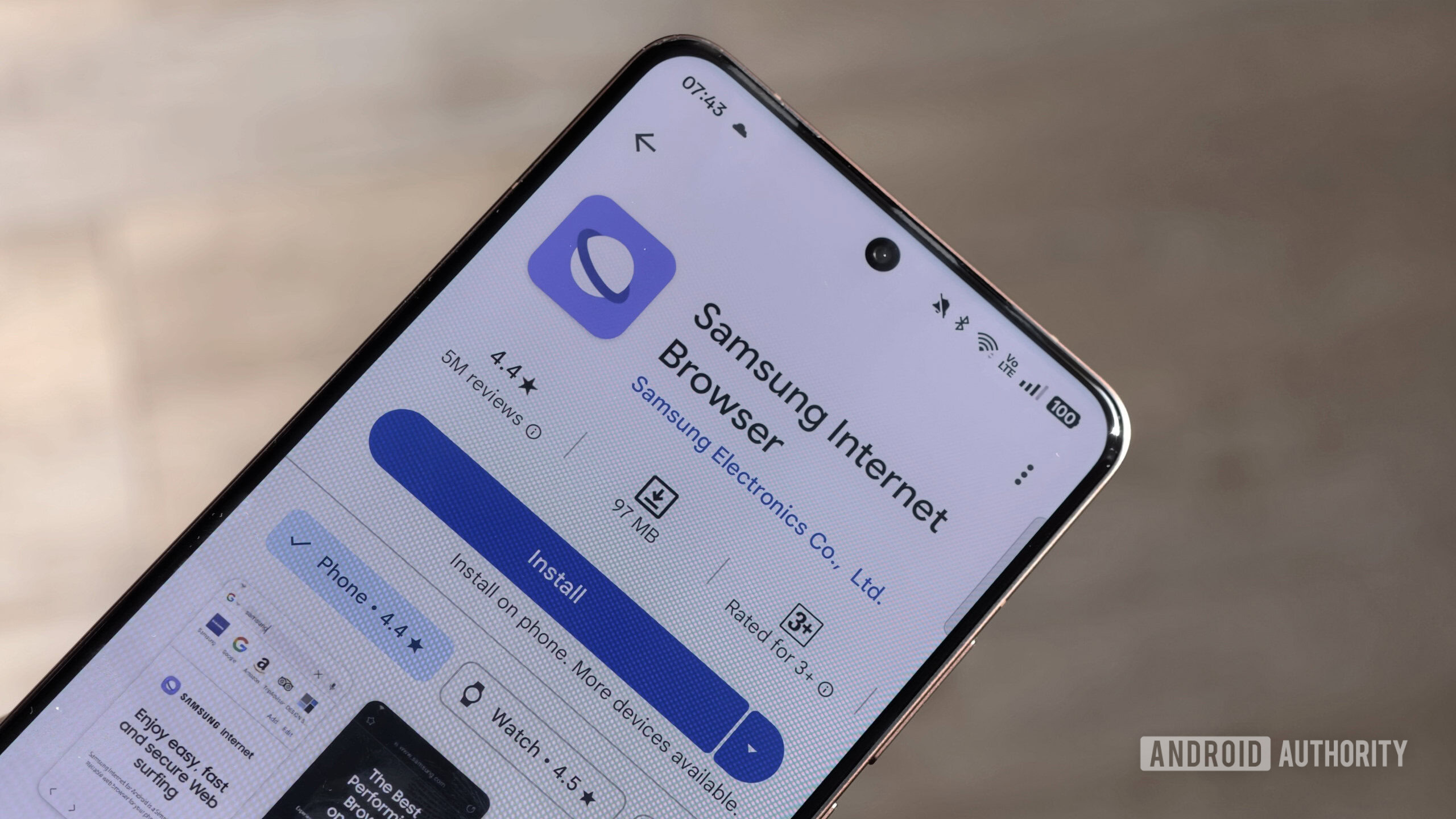

These 3 features made me drop Chrome for Samsung’s new PC browser

Andy WalkerNovember 10, 2025

0

How to run Android apps on Windows 11: Official and APK methods

Palash VolvoikarNovember 9, 2025

0

I almost ditched Chrome for Samsung’s new PC browser, but one missing feature is stopping me

Zac Kew-DennissNovember 9, 2025

0

How to use Android apps on Windows 11 without the Play Store

Andy WalkerNovember 6, 2025

0

Why Apple should be terrified if Gemini comes to macOS

Karandeep SinghOctober 16, 2025

0

There's already a big concern about Google's Android-powered PCs

Joe MaringSeptember 25, 2025

0

Is Bluestacks safe for PC? Here's what you need to know

Calvin WankhedeSeptember 8, 2025

0

The best Nintendo 3DS emulators for PC and Mac

Joe HindyAugust 12, 2025

0

7 features I want Chrome to steal from its rivals, and why

Dhruv BhutaniAugust 9, 2025

0

One of the hottest new browsers is also the best thing that's happened to my YouTube experience

Adamya SharmaJuly 27, 2025

0

This ultrawide Samsung gaming monitor just got a huge price drop!

Matt HorneNovember 25, 2025

0

Google Chat is borrowing this handy shortcut from YouTube

Stephen SchenckNovember 21, 2025

0

Android PCs may be coming, but not so soon

Tushar MehtaNovember 11, 2025

0

Is uBlock Origin dead on Chrome? New update says yes, but here's how to get the ad blocker back

Aamir SiddiquiNovember 5, 2025

0

First Android, now Quick Share gets a redesign on Windows

Hadlee SimonsNovember 4, 2025

0

Chrome's latest update will save you even more time when filling out tedious forms

Ryan McNealNovember 3, 2025

0

Samsung is finally making a PC version of its Android browser

Brady SnyderOctober 29, 2025

0

Microsoft Surface Laptop 2025 drops to $799.99 in Prime Big Deal

Team AAOctober 8, 2025

0

Google's brand-new Windows app has already hit a snag

Ryan McNealSeptember 29, 2025

0

Qualcomm's new Snapdragon X2 Elite and X2 Elite Extreme could be the fastest chips for Windows

Aamir SiddiquiSeptember 24, 2025

0