Affiliate links on Android Authority may earn us a commission. Learn more.

Lytro's demise and the future of light field cameras

Published onJune 16, 2018

The sudden, but not completely unexpected demise of Lytro at the end of March and the acquisition of many of its employees (but apparently not its IP) by Google has focused new attention on “light field” cameras. In particular, the focus has centered on their future in the consumer tech market and how the technology might affect mobile devices.

The Lytro company started in 2006 and brought its first consumer-model camera to the market six years later. Despite the impressive tech, the company had struggled recently, as it shifted its attention more to the field of virtual reality and 360-degree image capture.

The details of Google’s involvement are not yet clear, but the tech giant was already known to be involved in light-field R&D of its own. Apparently it’s adding a good deal of Lytro’s talent to that effort. But what exactly is a light field camera?

Further reading: What is aperture?

What can it do that ordinary cameras can’t, and how might this technology benefit mobile devices and their users in the future?

Lytro's original camera came with a price tag of $399, which explains at least in part why it never took off.

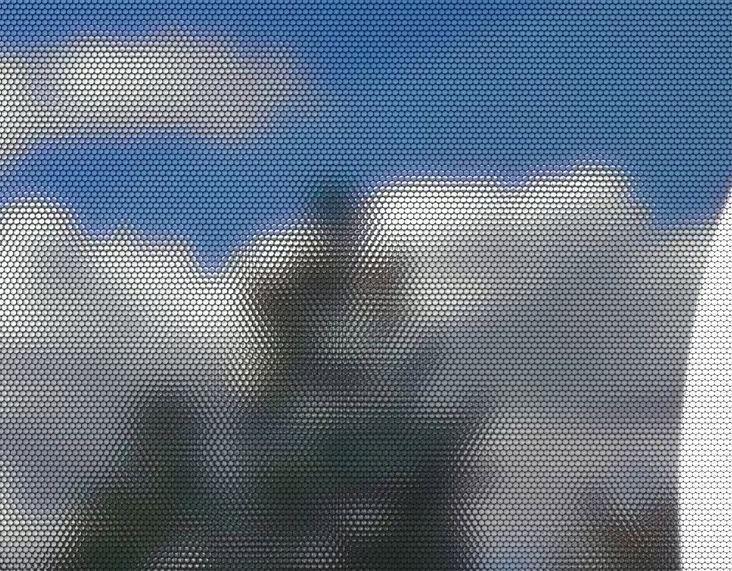

When Lytro’s first product, known simply as the “first generation camera,” was released, the main advantages claimed for the tech were the ability to refocus the image after it was taken. They also contained some 3D information and could give the appearance of depth as you changed the apparent point of view even on a 2D screen. Lytro referred to these images as “living pictures,” and there was at least some novelty in their capabilities. The camera – a square tube about an inch and a half on the sides and slightly under four and a half inches long – came with a price tag of $399.

This put it at about the same price as a smartphone, which was already becoming the preferred tool for casual photography. Of course, the Lytro just took pictures. Sure, it was a new kind of picture, but you couldn’t use it to play Candy Crush, watch YouTube, or even make calls. Its price also put it in competition with some fairly decent (albeit traditional) digital cameras with wider ranges of features — just not the 3D effects. Perhaps unsurprisingly, it never took off.

Lytro’s follow up was the the nearly $1,600 Illum. It offered higher resolution and a few more features. It was also larger and didn’t provide overall image quality on par with the professional or prosumer cameras its price and bulk now put it up against. As a result, it did no better in than the original. Today, both products can be found for a fraction of their original price.

So is the light field approach interesting, but ultimately a dead end? Just what is this light field stuff, anyway?

Given Lytro's failure, is the light field approach an interesting oddity but ultimately a dead end?

The basic idea isn’t new at all; light-field capture was first proposed in 1908 by Nobel laureate physicist Gabriel Lippmann (who also contributed to early color photography). Lippmann called the technique “integral photography,” and used an array of lenses to capture images of an object from multiple different perspectives in a single exposure, on one sheet of film. When viewed through a similar lens array, Lippmann’s photographs provided a sense of depth similar to Lytro’s “living pictures” over a century later. However, the equipment both to take the photos and to view them was cumbersome, and the “integral photographs” weren’t much good for anything without the special viewing lenses. There was certainly no ability to produce a 2D version with the focus-changing abilities Lytro later developed.

The fundamental technique behind these images really isn’t that complicated. What distinguishes a light field camera – also known as a plenoptic camera – is its ability to capture both the intensity and direction of light rays crossing a given plane, also known as the “light field” at that plane. As we’ve discussed previously, a hologram also achieves this, only by recording an interference pattern created by combining the image light field with a reference light beam – something that generally requires a laser and some slightly complex optics to pull off.

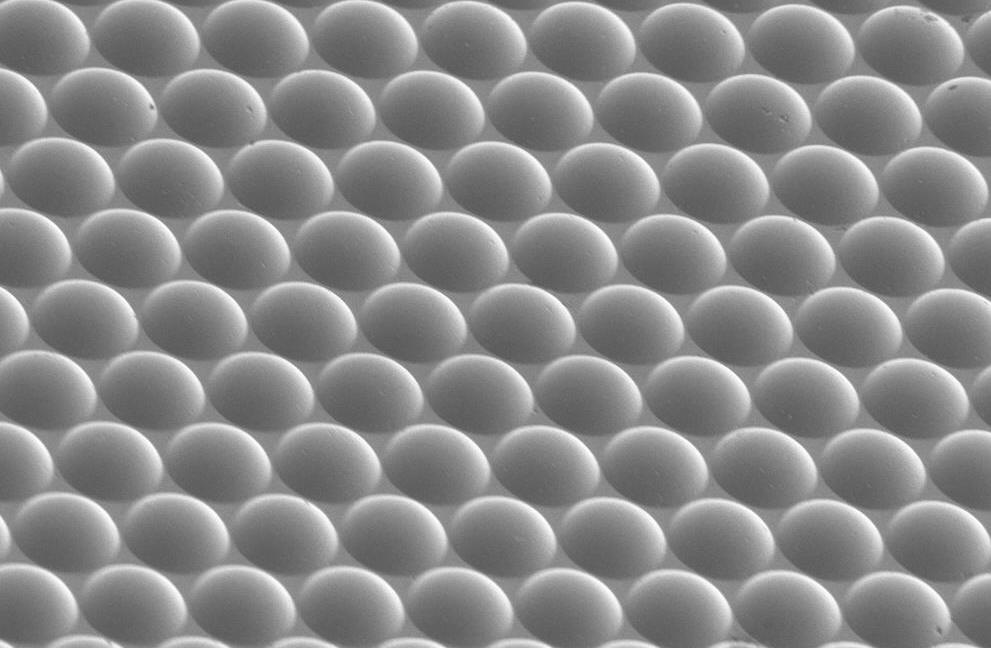

The light field camera uses an array of tiny “microlenses,” typically (as was the case in Lytro’s design) between the main lens and the film or image sensor. This means multiple two-dimensional images are captured, each from a slightly different perspective. It’s almost as though you’d taken a number of conventional pictures while changing the position of the camera, up and down and side to side, except that the light field camera pulls this off all at the same time.

However, as the saying goes, there’s no such thing as a free lunch. The cost of capturing this additional data, which basically contributes depth information to the image, is a significant reduction in the horizontal and vertical resolution. The original Lytro camera used what was essentially an 11MP image sensor to deliver images with a final 1,080 x 1,080-pixel count. You could refocus them to different depths, as well as add some perspective and parallax effects, but current processing can only go so far to improve that basic 2D resolution. Lytro’s later Illum camera offered greatly improved resolution – at four times the price – by using a 40MP sensor.

Cost is another reason that this technique sat on the shelf for over a century.

This technique sat on the shelf for over a century in part because of its cost. In the original film-based light field cameras, special lenses needed not only to capture the picture, but also to view it. In the modern digital incarnation of this technology, you never even see the raw image from the sensor.

Instead, the method needs fairly sophisticated software and image processing hardware to extract the depth information from the multiple perspectives and present it as the “refocusable” 2D image. The hardware and software algorithms that drive it didn’t even exist until the last decade, which is part of why the cameras cost so much.

Lytro has apparently failed to make a commercial success of light field technology, but we shouldn’t count this approach out for good just yet. As evidenced by Google’s interest in Lytro’s talent, there are still a number of heavy hitters seriously looking at light field image capture, especially with the rapidly growing interest in the fields of VR and AR.

Denmark-based Raytrix makes its own line of light field cameras, although its products are aimed primarily at commercial and industrial use rather than consumer devices. Two years ago the technology of light field startup Pelican Imaging was acquired by Tessera Technologies in a deal apparently aimed at lower-cost applications such as smartphone cameras. Adobe, Sony, and Mitsubishi Electric have all been working in this field as well. Light field methods are also garnering considerable interest from the motion picture industry. Radiant Images, a leader in the development of digital cinema technology, recently demonstrated a light field image capture system based on a large array of Sony cameras:

But what about smartphones? Image sensors and graphics processing hardware both continue to increase in capabilities and drop in price, so these trends could bring such technology within a commercially-viable cost range.

Can we expect to see smartphones benefit from light field methods and advantages, without the high price tag or other negatives?

The biggest problem is the sheer physical size of the components needed. You need an image sensor with lots of pixels to get decent results, and you can only make a sensor pixel so small before running into problems with sensitivity and noise. In addition, the size of the optics involved – both the main lens and the array of smaller lenses – have a significant impact on the camera’s overall sensitivity and the usable depth of field of the resulting light field image data. These things can’t easily be shoehorned into a smartphone-sized package.

Still, stranger things have happened, and smartphone makers are nothing if not innovative. Perhaps the optical end of the system could be produced as a separate, detachable module, so you wouldn’t have to carry it around as part of the phone. Maybe clever optical design will permit the optical path to be at least greatly reduced in depth, so the added bulk wouldn’t be quite as objectionable. In any case, this is still certainly an area to watch closely, even if some of its pioneers fall by the wayside. Don’t be too surprised if in the not too distant future, your smartphone photos literally take on added depth.