Affiliate links on Android Authority may earn us a commission. Learn more.

ML Kit: Extracting text from images with Google’s Machine Learning SDK

Published onJanuary 16, 2019

Machine learning (ML) is quickly becoming an important part of mobile development, but it isn’t the easiest thing to add to your apps!

To benefit from ML you typically needed a deep understanding of neural networks and data analysis, plus the time and resources required to source enough data, train your ML models, and then optimize those models to run efficiently on mobile.

Increasingly, we’re seeing tools that aim to make ML more accessible, including Google’s new ML Kit. Announced at Google I/O 2018, ML Kit gives you a way to add powerful ML capabilities to your applications without having to understand how the underlying algorithm works: just pass some data to the appropriate API, and ML Kit will return a response.

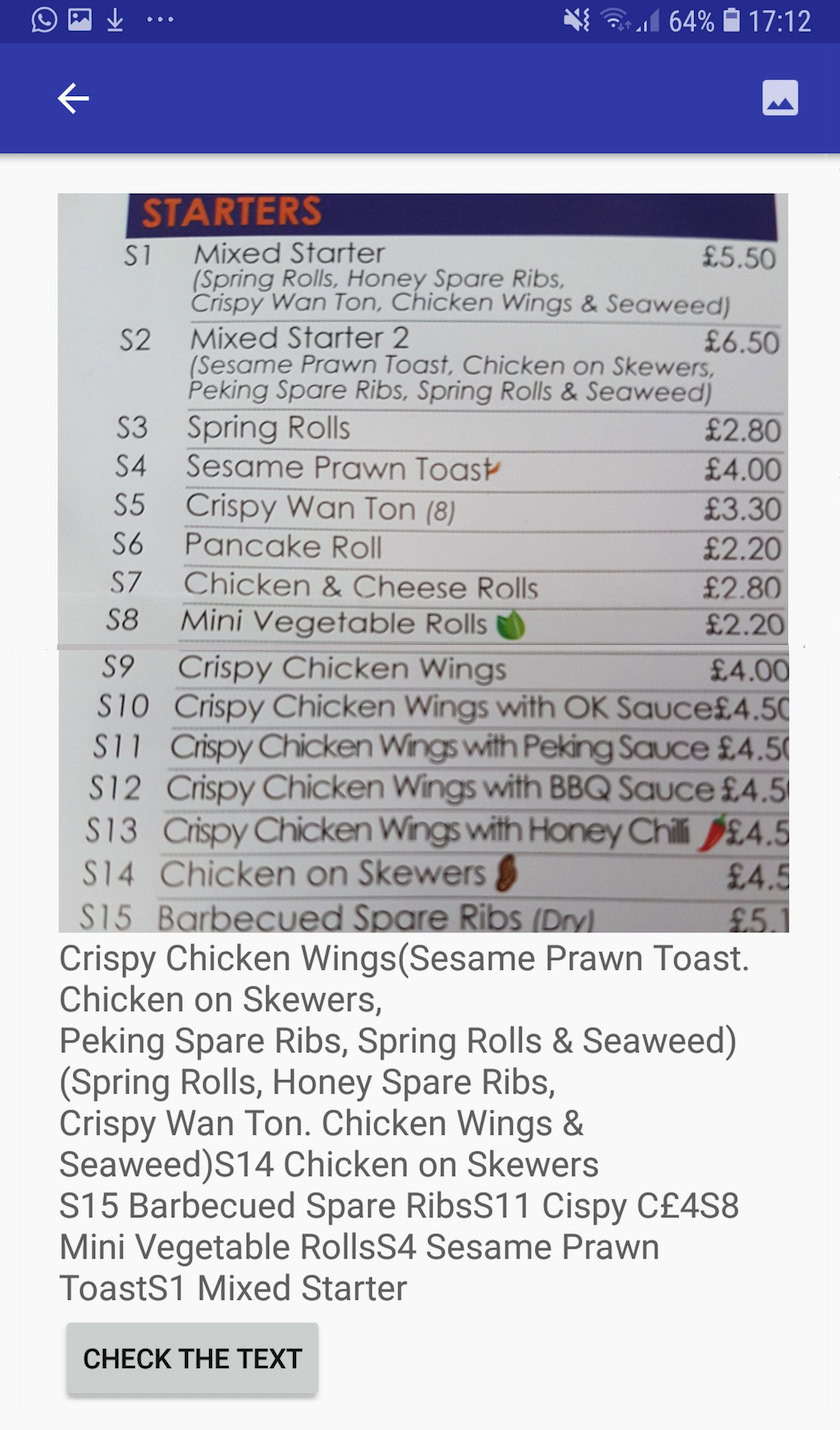

In this tutorial I’ll show you how to use ML Kit’s Text Recognition API to create an Android app that can intelligently gather, process and analyze the information it’s been given. By the end of this article, you’ll have created an app that can take any image, and then extract all the Latin-based text from that image, ready for you to use in your app.

Google’s new machine learning SDK

ML Kit is Google’s attempt to bring machine learning to Android and iOS, in an easy-to-use format that doesn’t require any previous knowledge of machine learning.

Under the hood, the ML Kit SDK bundles together a number of Google’s machine learning technologies, such as Cloud Vision and TensorFlow, plus APIs and pre-trained models for common mobile use cases, including text recognition, face detection, and barcode scanning.

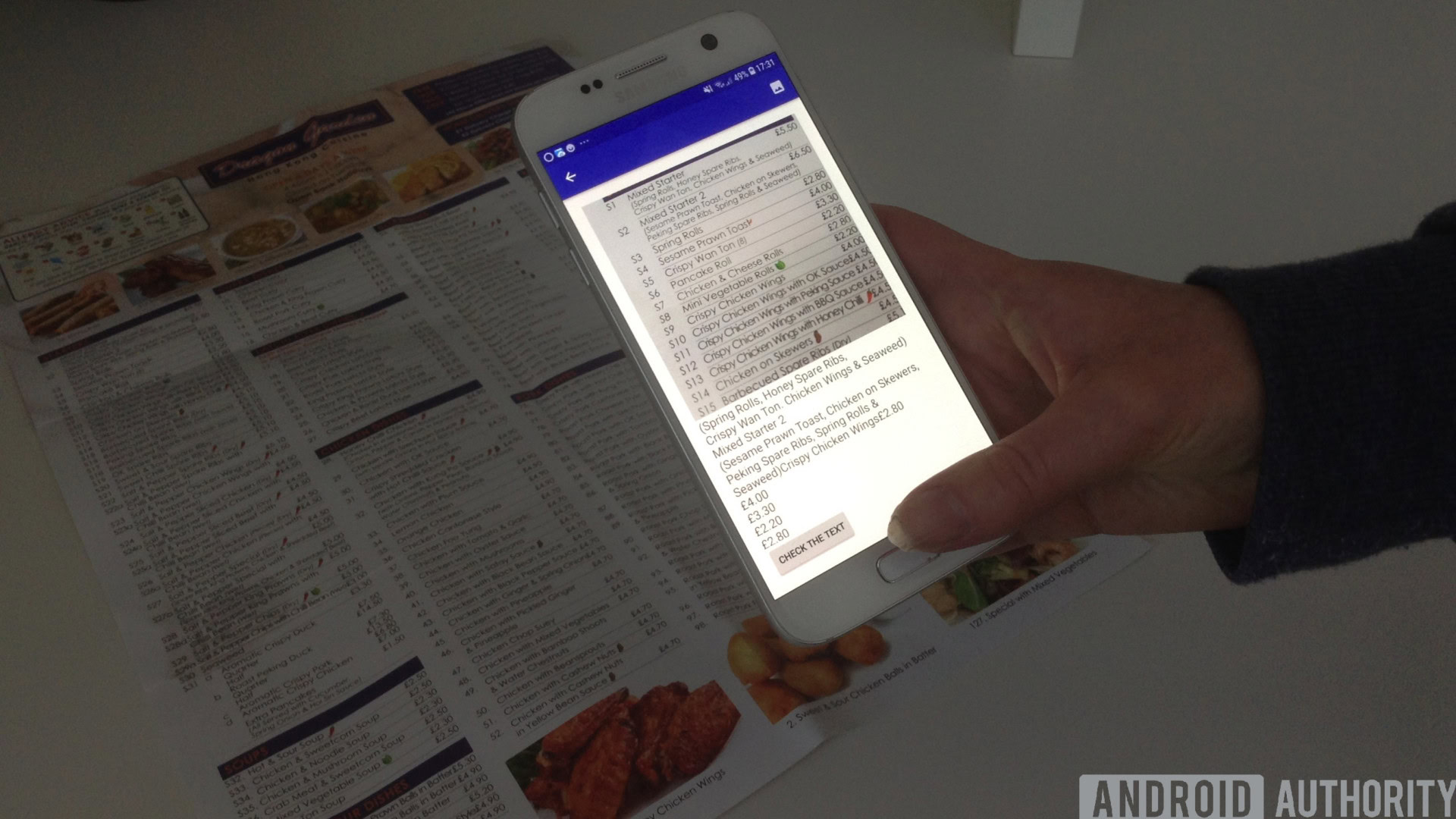

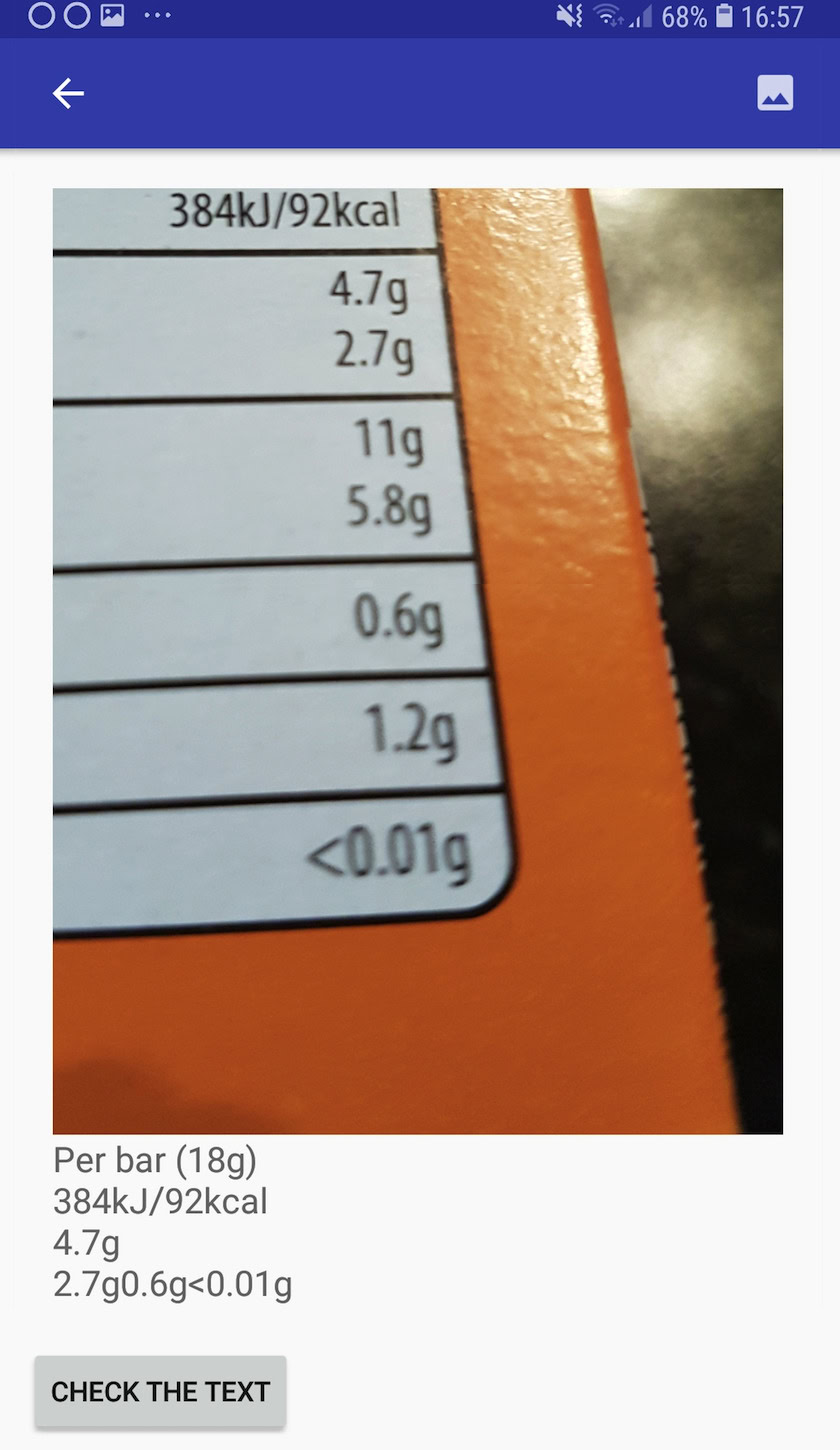

In this article we’ll be exploring the Text Recognition API, which you can use in a wide range of apps. For example, you could create a calorie-counting app where users can take a photo of nutritional labels, and have all the relevant information extracted and logged for them automatically.

You could also use the Text Recognition API as the basis for translation apps, or accessibility services where the user can point their camera at any text they’re struggling with, and have it read aloud to them.

In this tutorial, we’ll lay the foundation for a wide range of innovative features, by creating an app that can extract text from any image in the user’s gallery. Although we won’t be covering it in this tutorial, you could also capture text from the user’s surroundings in real time, by connecting this application to the device’s camera.

On device or in the cloud?

Some of the ML Kit APIs are only available on-device, but a few are available on-device and in the cloud, including the Text Recognition API.

The cloud-based Text API can identify a wider range of languages and characters, and promises greater accuracy than its on-device counterpart. However, it does require an active Internet connection, and is only available for Blaze-level projects.

In this article, we’ll be running the Text Recognition API locally, so you can follow along regardless of whether you’ve upgraded to Blaze, or you’re on the free Firebase Spark plan.

Creating a text recognition app with ML Kit

Create an application with the settings of your choice, but when prompted select the “Empty Activity” template.

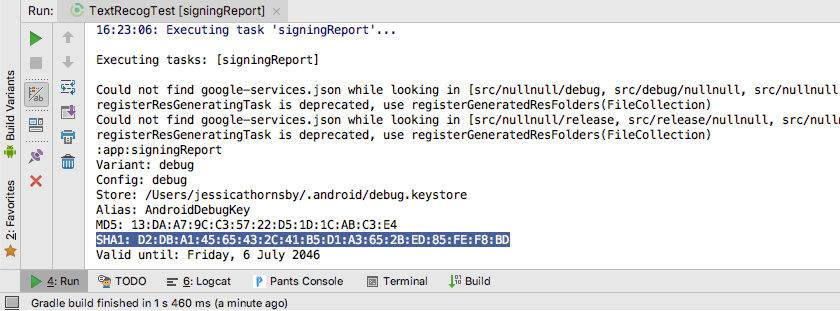

The ML Kit SDK is part of Firebase, so you’ll need to connect your project to Firebase, using its SHA-1 signing certificate. To get your project’s SHA-1:

- Select Android Studio’s “Gradle” tab.

- In the “Gradle projects” panel, double-click to expand your project’s “root,” and then select “Tasks > Android > Signing Report.”

- The panel along the bottom of the Android Studio window should update to display some information about this project – including its SHA-1 signing certificate.

To connect your project to Firebase:

- In your web browser, launch the Firebase Console.

- Select “Add project.”

- Give your project a name; I’m using “ML Test.”

- Read the terms and conditions, and if you’re happy to proceed then select “I accept…” followed by “Create project.”

- Select “Add Firebase to your Android app.”

- Enter your project’s package name, which you’ll find at the top of the MainActivity file, and inside the Manifest.

- Enter your project’s SHA-1 signing certificate.

- Click “Register app.”

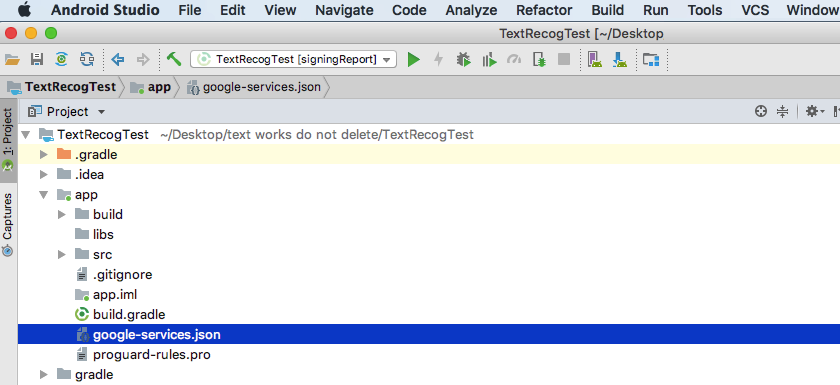

- Select “Download google-services.json.” This file contains all the necessary Firebase metadata for your project, including the API key.

- In Android Studio, drag and drop the google-services.json file into your project’s “app” directory.

- Open your project-level build.gradle file and add the Google services classpath:

classpath 'com.google.gms:google-services:4.0.1'- Open your app-level build.gradle file, and add dependencies for Firebase Core, Firebase ML Vision and the model interpreter, plus the Google services plugin:

apply plugin: 'com.google.gms.google-services'

...

...

...

dependencies {

implementation fileTree(dir: 'libs', include: ['*.jar'])

implementation 'com.google.firebase:firebase-core:16.0.1'

implementation 'com.google.firebase:firebase-ml-vision:16.0.0'

implementation 'com.google.firebase:firebase-ml-model-interpreter:16.0.0'At this point, you’ll need to run your project so that it can connect to the Firebase servers:

- Install your app on either a physical Android smartphone or tablet, or an Android Virtual Device (AVD).

- In the Firebase Console, select “Run app to verify installation.”

- After a few moments, you should see a “Congratulations” message; select “Continue to the console.”

Download Google’s pre-trained machine learning models

By default, ML Kit only downloads models as and when they’re needed, so our app will download the OCR model when the user attempts to extract text for the first time.

This could potentially have a negative impact on the user experience – imagine trying to access a feature, only to discover that the app has to download more resources before it can actually deliver this feature. In the worst case scenario, your app may not even be able to download the resources it needs, when it needs them, for example if the device has no Internet connection.

To make sure this doesn’t happen with our app, I’m going to download the necessary OCR model at install-time, which requires some changes to the Maniest.

While we have the Manifest open, I’m also going to add the WRITE_EXTERNAL_STORAGE permission, which we’ll be using it later in this tutorial.

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.jessicathornsby.textrecogtest">

//Add the WRITE_EXTERNAL_STORAGE permission//

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<application

android:allowBackup="true"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:roundIcon="@mipmap/ic_launcher_round"

android:supportsRtl="true"

android:theme="@style/AppTheme">

<activity android:name=".MainActivity">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

//Add the following//

<meta-data

android:name="com.google.firebase.ml.vision.DEPENDENCIES"

android:value="ocr" />

</application>

</manifest>Building the layout

Let’s get the easy stuff out of the way, and create a layout consisting of:

- An ImageView. Initially, this will display a placeholder, but it’ll update once the user selects an image from their gallery.

- A Button, which triggers the text extraction.

- A TextView, where we’ll display the extracted text.

- A ScrollView. Since there’s no guarantee the extracted text will fit neatly onscreen, I’m going to place the TextView inside a ScrollView.

Here’s the finished activity_main.xml file:

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:padding="20dp"

tools:context=".MainActivity">

<ScrollView

android:layout_width="match_parent"

android:layout_height="match_parent"

android:layout_above="@+id/extracted_text">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<ImageView

android:id="@+id/imageView"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:adjustViewBounds="true"

android:src="@drawable/ic_placeholder" />

<TextView

android:id="@+id/textView"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:textAppearance="@style/TextAppearance.AppCompat.Medium" />

</LinearLayout>

</ScrollView>

<LinearLayout

android:id="@+id/extracted_text"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_alignParentBottom="true">

<Button

android:id="@+id/checkText"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Check the text" />

</LinearLayout>

</RelativeLayout>This layout references a “ic_placeholder” drawable, so let’s create this now:

- Select “File > New > Image Asset” from the Android Studio toolbar.

- Open the “Icon Type” dropdown and select “Action Bar and Tab Icons.”

- Make sure the “Clip Art” radio button is selected.

- Give the “Clip Art” button a click.

- Select the image you want to use as your placeholder; I’m using “Add to photos.”

- Click “OK.”

- Open the “Theme” dropdown, and select “HOLO_LIGHT.”

- In the “Name,” field, enter “ic_placeholder.”

- Click “Next.” Read the information, and if you’re happy to proceed then click “Finish.”

Action bar icons: Launching the Gallery app

Next, I’m going to create an action bar item that’ll launch the user’s gallery, ready for them to select an image.

You define action bar icons inside a menu resource file, which lives inside the “res/menu” directory. If your project doesn’t contain this directory, then you’ll need to create it:

- Control-click your project’s “res” directory and select “New > Android Resource Directory.”

- Open the “Resource type” dropdown and select “menu.”

- The “Directory name” should update to “menu” automatically, but if it doesn’t then you’ll need to rename it manually.

- Click “OK.”

You’re now ready to create the menu resource file:

- Control-click your project’s “menu” directory and select “New > Menu resource file.”

- Name this file “my_menu.”

- Click “OK.”

- Open the “my_menu.xml” file, and add the following:

<menu xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools">

//Create an <item> element for every action//

<item

android:id="@+id/gallery_action"

android:orderInCategory="101"

android:title="@string/action_gallery"

android:icon="@drawable/ic_gallery"

app:showAsAction="ifRoom"/>

</menu>The menu file references an “action_gallery” string, so open your project’s res/values/strings.xml file and create this resource. While I’m here, I’m also defining the other strings we’ll be using throughout this project.

<string name="action_gallery">Gallery</string>

<string name="permission_request">This app need to access files on your device.</string>

<string name="no_text">No text found</string>Next, use the Image Asset Studio to create the action bar’s “ic_gallery” icon:

- Select “File > New > Image Asset.”

- Set the “Icon Type” dropdown to “Action Bar and Tab Icons.”

- Click the “Clip Art” button.

- Choose a drawable; I’m using “image.”

- Click “OK.”

- To make sure this icon is clearly visible in the action bar, open the “Theme” dropdown and select “HOLO_DARK.”

- Name this icon “ic_gallery.”

- “Click “Next,” followed by “Finish.”

Handling permission requests and click events

I’m going to perform all the tasks that aren’t directly related to the Text Recognition API in a separate BaseActivity class, including instantiating the menu, handling action bar click events, and requesting access to the device’s storage.

- Select “File > New > Java class” from Android Studio’s toolbar.

- Name this class “BaseActivity.”

- Click “OK.”

- Open BaseActivity, and add the following:

import android.app.Activity;

import android.support.v4.app.ActivityCompat;

import android.support.v7.app.ActionBar;

import android.support.v7.app.AlertDialog;

import android.support.v7.app.AppCompatActivity;

import android.os.Bundle;

import android.content.DialogInterface;

import android.content.Intent;

import android.Manifest;

import android.provider.MediaStore;

import android.view.Menu;

import android.view.MenuItem;

import android.content.pm.PackageManager;

import android.net.Uri;

import android.provider.Settings;

import android.support.annotation.NonNull;

import android.support.annotation.Nullable;

import java.io.File;

public class BaseActivity extends AppCompatActivity {

public static final int WRITE_STORAGE = 100;

public static final int SELECT_PHOTO = 102;

public static final String ACTION_BAR_TITLE = "action_bar_title";

public File photo;

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

ActionBar actionBar = getSupportActionBar();

if (actionBar != null) {

actionBar.setDisplayHomeAsUpEnabled(true);

actionBar.setTitle(getIntent().getStringExtra(ACTION_BAR_TITLE));

}

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

getMenuInflater().inflate(R.menu.my_menu, menu);

return true;

}

@Override

public boolean onOptionsItemSelected(MenuItem item) {

switch (item.getItemId()) {

//If “gallery_action” is selected, then...//

case R.id.gallery_action:

//...check we have the WRITE_STORAGE permission//

checkPermission(WRITE_STORAGE);

break;

}

return super.onOptionsItemSelected(item);

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

super.onRequestPermissionsResult(requestCode, permissions, grantResults);

switch (requestCode) {

case WRITE_STORAGE:

//If the permission request is granted, then...//

if (grantResults.length > 0 && grantResults[0] == PackageManager.PERMISSION_GRANTED) {

//...call selectPicture//

selectPicture();

//If the permission request is denied, then...//

} else {

//...display the “permission_request” string//

requestPermission(this, requestCode, R.string.permission_request);

}

break;

}

}

//Display the permission request dialog//

public static void requestPermission(final Activity activity, final int requestCode, int msg) {

AlertDialog.Builder alert = new AlertDialog.Builder(activity);

alert.setMessage(msg);

alert.setPositiveButton(android.R.string.ok, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

dialogInterface.dismiss();

Intent permissonIntent = new Intent(Settings.ACTION_APPLICATION_DETAILS_SETTINGS);

permissonIntent.setData(Uri.parse("package:" + activity.getPackageName()));

activity.startActivityForResult(permissonIntent, requestCode);

}

});

alert.setNegativeButton(android.R.string.cancel, new DialogInterface.OnClickListener() {

@Override

public void onClick(DialogInterface dialogInterface, int i) {

dialogInterface.dismiss();

}

});

alert.setCancelable(false);

alert.show();

}

//Check whether the user has granted the WRITE_STORAGE permission//

public void checkPermission(int requestCode) {

switch (requestCode) {

case WRITE_STORAGE:

int hasWriteExternalStoragePermission = ActivityCompat.checkSelfPermission(this, Manifest.permission.WRITE_EXTERNAL_STORAGE);

//If we have access to external storage...//

if (hasWriteExternalStoragePermission == PackageManager.PERMISSION_GRANTED) {

//...call selectPicture, which launches an Activity where the user can select an image//

selectPicture();

//If permission hasn’t been granted, then...//

} else {

//...request the permission//

ActivityCompat.requestPermissions(this, new String[]{Manifest.permission.WRITE_EXTERNAL_STORAGE}, requestCode);

}

break;

}

}

private void selectPicture() {

photo = MyHelper.createTempFile(photo);

Intent intent = new Intent(Intent.ACTION_PICK, MediaStore.Images.Media.EXTERNAL_CONTENT_URI);

//Start an Activity where the user can choose an image//

startActivityForResult(intent, SELECT_PHOTO);

}

}At this point, your project should be complaining that it can’t resolve MyHelper.createTempFile. Let’s implement this now!

Resizing images with createTempFile

Create a new “MyHelper” class. In this class, we’re going to resize the user’s chosen image, ready to be processed by the Text Recognition API.

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.content.Context;

import android.database.Cursor;

import android.os.Environment;

import android.widget.ImageView;

import android.provider.MediaStore;

import android.net.Uri;

import static android.graphics.BitmapFactory.decodeFile;

import static android.graphics.BitmapFactory.decodeStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

public class MyHelper {

public static String getPath(Context context, Uri uri) {

String path = "";

String[] projection = {MediaStore.Images.Media.DATA};

Cursor cursor = context.getContentResolver().query(uri, projection, null, null, null);

int column_index;

if (cursor != null) {

column_index = cursor.getColumnIndexOrThrow(MediaStore.Images.Media.DATA);

cursor.moveToFirst();

path = cursor.getString(column_index);

cursor.close();

}

return path;

}

public static File createTempFile(File file) {

File directory = new File(Environment.getExternalStorageDirectory().getPath() + "/com.jessicathornsby.myapplication");

if (!directory.exists() || !directory.isDirectory()) {

directory.mkdirs();

}

if (file == null) {

file = new File(directory, "orig.jpg");

}

return file;

}

public static Bitmap resizePhoto(File imageFile, Context context, Uri uri, ImageView view) {

BitmapFactory.Options newOptions = new BitmapFactory.Options();

try {

decodeStream(context.getContentResolver().openInputStream(uri), null, newOptions);

int photoHeight = newOptions.outHeight;

int photoWidth = newOptions.outWidth;

newOptions.inSampleSize = Math.min(photoWidth / view.getWidth(), photoHeight / view.getHeight());

return compressPhoto(imageFile, BitmapFactory.decodeStream(context.getContentResolver().openInputStream(uri), null, newOptions));

} catch (FileNotFoundException exception) {

exception.printStackTrace();

return null;

}

}

public static Bitmap resizePhoto(File imageFile, String path, ImageView view) {

BitmapFactory.Options options = new BitmapFactory.Options();

decodeFile(path, options);

int photoHeight = options.outHeight;

int photoWidth = options.outWidth;

options.inSampleSize = Math.min(photoWidth / view.getWidth(), photoHeight / view.getHeight());

return compressPhoto(imageFile, BitmapFactory.decodeFile(path, options));

}

private static Bitmap compressPhoto(File photoFile, Bitmap bitmap) {

try {

FileOutputStream fOutput = new FileOutputStream(photoFile);

bitmap.compress(Bitmap.CompressFormat.JPEG, 70, fOutput);

fOutput.close();

} catch (IOException exception) {

exception.printStackTrace();

}

return bitmap;

}

}Set the image to an ImageView

Next, we need to implement onActivityResult() in our MainActivity class, and set the user’s chosen image to our ImageView.

import android.graphics.Bitmap;

import android.os.Bundle;

import android.widget.ImageView;

import android.content.Intent;

import android.widget.TextView;

import android.net.Uri;

public class MainActivity extends BaseActivity {

private Bitmap myBitmap;

private ImageView myImageView;

private TextView myTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

myTextView = findViewById(R.id.textView);

myImageView = findViewById(R.id.imageView);

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case WRITE_STORAGE:

checkPermission(requestCode);

break;

case SELECT_PHOTO:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

myBitmap = MyHelper.resizePhoto(photo, this, dataUri, myImageView);

} else {

myBitmap = MyHelper.resizePhoto(photo, path, myImageView);

}

if (myBitmap != null) {

myTextView.setText(null);

myImageView.setImageBitmap(myBitmap);

}

break;

}

}

}

}Run this project on a physical Android device or AVD, and give the action bar icon a click. When prompted, grant the WRITE_STORAGE permission and choose an image from the gallery; this image should now be displayed in your app’s UI.

Now we’ve laid the groundwork, we’re ready to start extracting some text!

Teaching an app to recognize text

I want to trigger text recognition in response to a click event, so we need to implement an OnClickListener:

import android.graphics.Bitmap;

import android.os.Bundle;

import android.widget.ImageView;

import android.content.Intent;

import android.widget.TextView;

import android.view.View;

import android.net.Uri;

public class MainActivity extends BaseActivity implements View.OnClickListener {

private Bitmap myBitmap;

private ImageView myImageView;

private TextView myTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

myTextView = findViewById(R.id.textView);

myImageView = findViewById(R.id.imageView);

findViewById(R.id.checkText).setOnClickListener(this);

}

@Override

public void onClick(View view) {

switch (view.getId()) {

case R.id.checkText:

if (myBitmap != null) {

//We’ll be implementing runTextRecog in the next step//

runTextRecog();

}

break;

}

}ML Kit can only process images when they’re in the FirebaseVisionImage format, so we need to convert our image into a FirebaseVisionImage object. You can create a FirebaseVisionImage from a Bitmap, media.Image, ByteBuffer, or a byte array. Since we’re working with Bitmaps, we need to call the fromBitmap() utility method of the FirebaseVisionImage class, and pass it our Bitmap.

private void runTextRecog() {

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(myBitmap);ML Kit has different detector classes for each of its image recognition operations. For text, we need to use the FirebaseVisionTextDetector class, which performs optical character recognition (OCR) on an image.

We create an instance of FirebaseVisionTextDetector, using getVisionTextDetector:

FirebaseVisionTextDetector detector = FirebaseVision.getInstance().getVisionTextDetector();Next, we need to check the FirebaseVisionImage for text, by calling the detectInImage() method and passing it the FirebaseVisionImage object. We also need to implement onSuccess and onFailure callbacks, plus corresponding listeners so our app gets notified whenever results become available.

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<FirebaseVisionText>() {

@Override

//To do//

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure

(@NonNull Exception exception) {

//Task failed with an exception//

}

});

}If this operation fails, then I’m going to display a toast, but if the operation is a success then I’ll call processExtractedText with the response.

At this point, my text detection code looks like this:

//Create a FirebaseVisionImage//

private void runTextRecog() {

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(myBitmap);

//Create an instance of FirebaseVisionCloudTextDetector//

FirebaseVisionTextDetector detector = FirebaseVision.getInstance().getVisionTextDetector();

//Register an OnSuccessListener//

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<FirebaseVisionText>() {

@Override

//Implement the onSuccess callback//

public void onSuccess(FirebaseVisionText texts) {

//Call processExtractedText with the response//

processExtractedText(texts);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

//Implement the onFailure calback//

public void onFailure

(@NonNull Exception exception) {

Toast.makeText(MainActivity.this,

"Exception", Toast.LENGTH_LONG).show();

}

});

}Whenever our app receives an onSuccess notification, we need to parse the results.

A FirebaseVisionText object can contain elements, lines and blocks, where each block typically equates to a single paragraph of text. If FirebaseVisionText returns 0 blocks, then we’ll display the “no_text” string, but if it contains one or more blocks then we’ll display the retrieved text as part of our TextView.

private void processExtractedText(FirebaseVisionText firebaseVisionText) {

myTextView.setText(null);

if (firebaseVisionText.getBlocks().size() == 0) {

myTextView.setText(R.string.no_text);

return;

}

for (FirebaseVisionText.Block block : firebaseVisionText.getBlocks()) {

myTextView.append(block.getText());

}

}

}Here’s the completed MainActivity code:

import android.graphics.Bitmap;

import android.os.Bundle;

import android.widget.ImageView;

import android.content.Intent;

import android.widget.TextView;

import android.widget.Toast;

import android.view.View;

import android.net.Uri;

import android.support.annotation.NonNull;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.text.FirebaseVisionText;

import com.google.firebase.ml.vision.text.FirebaseVisionTextDetector;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.android.gms.tasks.OnFailureListener;

public class MainActivity extends BaseActivity implements View.OnClickListener {

private Bitmap myBitmap;

private ImageView myImageView;

private TextView myTextView;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

myTextView = findViewById(R.id.textView);

myImageView = findViewById(R.id.imageView);

findViewById(R.id.checkText).setOnClickListener(this);

}

@Override

public void onClick(View view) {

switch (view.getId()) {

case R.id.checkText:

if (myBitmap != null) {

runTextRecog();

}

break;

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if (resultCode == RESULT_OK) {

switch (requestCode) {

case WRITE_STORAGE:

checkPermission(requestCode);

break;

case SELECT_PHOTO:

Uri dataUri = data.getData();

String path = MyHelper.getPath(this, dataUri);

if (path == null) {

myBitmap = MyHelper.resizePhoto(photo, this, dataUri, myImageView);

} else {

myBitmap = MyHelper.resizePhoto(photo, path, myImageView);

}

if (myBitmap != null) {

myTextView.setText(null);

myImageView.setImageBitmap(myBitmap);

}

break;

}

}

}

private void runTextRecog() {

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(myBitmap);

FirebaseVisionTextDetector detector = FirebaseVision.getInstance().getVisionTextDetector();

detector.detectInImage(image).addOnSuccessListener(new OnSuccessListener<FirebaseVisionText>() {

@Override

public void onSuccess(FirebaseVisionText texts) {

processExtractedText(texts);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure

(@NonNull Exception exception) {

Toast.makeText(MainActivity.this,

"Exception", Toast.LENGTH_LONG).show();

}

});

}

private void processExtractedText(FirebaseVisionText firebaseVisionText) {

myTextView.setText(null);

if (firebaseVisionText.getBlocks().size() == 0) {

myTextView.setText(R.string.no_text);

return;

}

for (FirebaseVisionText.Block block : firebaseVisionText.getBlocks()) {

myTextView.append(block.getText());

}

}

}Testing the project

Now it’s time to see ML Kit’s Text Recognition in action! Install this project on an Android device or AVD, choose an image from the gallery, and then give the “Check the text” button a tap. The app should respond by extracting all the text from the image, and then displaying it in a TextView.

Note that depending on the size of your image, and the amount of text it contains, you may need to scroll to see all of the extracted text.

You can also download the completed project from GitHub.

Wrapping up

You now know how to detect and extract text from an image, using ML Kit.

The Text Recognition API is just one part of the ML Kit. This SDK also offers barcode scanning, face detection, image labelling and landmark recognition, with plans to add more APIs for common mobile use cases, including Smart Reply and a high-density face contour API.

Which ML Kit API are you the most interested to try? Let us know in the comments below!