Affiliate links on Android Authority may earn us a commission. Learn more.

Phones don't need a NPU to benefit from machine learning

Published onNovember 3, 2017

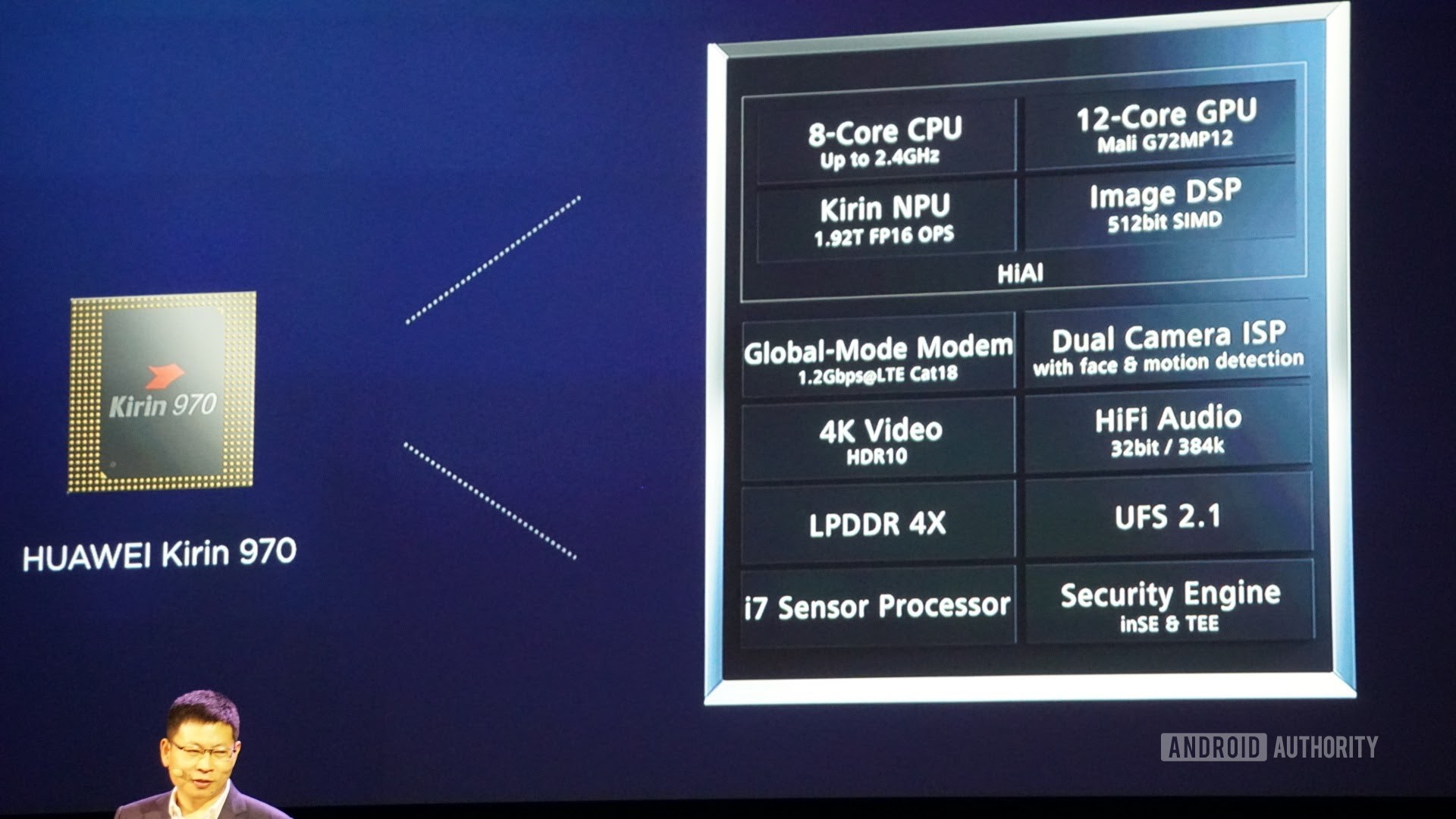

Neural Networks and Machine Learning are some of this year’s biggest buzzwords in the world of smartphone processors. HUAWEI’s HiSilicon Kirin 970, Apple’s A11 Bionic, and the image processing unit (IPU) inside the Google Pixel 2 all boast dedicated hardware support for this emerging technology.

The trend so far has suggested that machine learning requires a dedicated piece of hardware, like a Neural Processing Unit (NPU), IPU, or “Neural Engine”, as Apple would call it. However, the reality is these are all just fancy words for custom digital signal processors (DSP) — that is, hardware specialized in performing complex mathematical functions quickly. Today’s latest custom silicon has been specifically optimized around machine learning and neural network operations, the most common of which include dot product math and matrix multiply.

Despite what OEMs will tell you, there is a downside to this approach. Neural networking is still an emerging field and it’s possible that the types of operations best suited to certain use cases will change as research continues. Rather than future-proofing the device, these early designs could quickly become outdated. Investing now in early silicon is an expensive process, and one that will likely require revisions as the best mobile use cases become apparent.

Silicon designers and OEMs aren’t going to invest in these complex circuits for mid- or low-tier products at this stage, which is why these dedicated processors are currently reserved for only the most expensive of smartphones. New processor components from ARM, which are expected to debut in SoCs next year, will help accommodate more efficient machine learning algorithms without a dedicated processor, though.

2018 is promising for Machine Learning

ARM announced its Cortex-A75 and A55 CPUs and Mali-G72 GPU designs earlier in the year. While much of the launch focus was on the company’s new DynamIQ technology, all three of these new products are also capable of supporting more efficient machine learning algorithms too.

Neural Networks often don’t require very high accuracy data, especially after training, meaning that math can usually be performed on 16-bit or even 8-bit data, rather than large 32 or 64-bit entries. This saves on memory and cache requirements, and greatly improves memory bandwidth, which are already limited assets in smartphone SoCs.

As part of the ARMv8.2-A architecture for the Cortex-A75 and A55, ARM introduced support for half-precision floating point (FP16) and integer dot products (INT8) with NEON – ARM’s advanced single instruction multiple data architecture extension. The introduction of FP16 removed the conversion stage to FP32 from the previous architecture, reducing overhead and speeding up processing.

ARM’s new INT8 operation combines multiple instructions into a single instruction to improve latency. When including the optional NEON pipeline on on the A55, INT8 performance can improve up to 4x over the A53, making the core a very power efficient way to compute low accuracy machine learning math.

2018's mobile SoCs built around ARM's Cortex-A75, A55, and Mali-G72, will see machine learning improvements right out of the box.

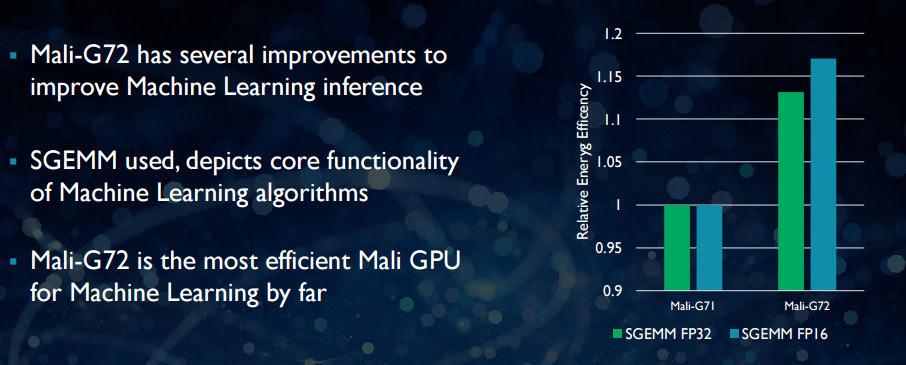

On the GPU side, ARM’s Bifrost architecture was specifically designed to facilitate system coherency. This means the Mali-G71 and G72 are able to share cache memory directly with the CPU, speeding up compute workloads by allowing the CPU and GPU to work more closely together. Given that GPUs are designed for processing huge amounts of parallel math, a close marriage with the CPU makes for an ideal arrangement for processing machine learning algorithms.

With the newer Mali-G72, ARM made a number of optimizations to improve math performance, including fused multiply-add (FMA) which is used to speed up dot product, convolutions, and matrix multiplication. All of which are essential for machine learning algorithms. The G72 also sees up to 17 percent energy efficiency savings for FP32 and FP16 instructions, which is an important gain in mobile applications.

In summary, 2018’s mobile SoCs built around ARM’s Cortex-A75, A55, and Mali-G72, including those in the mid-tier, will have a number of efficiency improvements for machine learning algorithms straight out of the box. Although no products have been announced yet, these improvements will almost certainly make their way to some Qualcomm, MediaTek, HiSilicon, and Samsung SoCs next year.

Compute libraries available today

While next generation technologies have been designed with machine learning in mind, today’s mobile CPU and GPUs can already be used to run machine learning applications. Tying ARM’s efforts together is its Compute Library. The library includes a comprehensive set of functions for imaging and vision projects, as well as machine learning frameworks like Google’s TensorFlow. The purpose of the library is to allow for portable code that can be run across various ARM hardware configurations.

CPU functions are implemented using NEON, which enables developers to re-compile them for their target architecture.The GPU version of the library consists of kernel programs written using the OpenCL standard API and optimized for Mali. The key take-away is machine learning doesn’t have to be reserved for closed platforms with their own dedicated hardware. The technology is already here for widely used components.

ARM isn’t the only company enabling developers to produce portable code for its hardware. Qualcomm also has its own Hexagon SDK to help developers make use of the DSP capabilities found in its Snapdragon mobile platforms. The Hexagon SDK 3.1 includes general matrix-matrix multiplication (GEMM) libraries for convolutional networks used in machine learning, which runs more efficiently on its DSP than on a CPU.

Qualcomm also has its Symphony System Manager SDK, which offers a set of APIs designed specifically around empowering heterogeneous compute for computer vision, image/data processing, and low level algorithm development. Qualcomm may be making use of a dedicated unit, but it’s also using its DSP for audio, imaging, video, and other common smartphone tasks.

So why use a dedicated processor?

If you’re wondering why any OEM would want to bother with a custom piece of hardware for neural networks after reading all of this, there’s still one big benefit to custom hardware: performance and efficiency. For example, HUAWEI boasts that its NPU inside the Kirin 970 is rated at 1.92 TFLOPs of FP16 throughput, that’s more than 3x what the Kirin 970’s Mali-G72 GPU can achieve (~0.6 TFLOPs of FP16).

Although ARM’s latest CPU and GPU boast a number of machine learning energy and performance improvements, dedicated hardware optimized for very specific tasks and a limited set of operations will always be more efficient.

In that sense, ARM lacks the efficiency offered by HUAWEI and other companies implementing their own custom NPUs. Again, an approach that covers cost effective implementations with a view to seeing how the machine learning industry settles before making its move could be wise. ARM hasn’t ruled out offering its own dedicated machine learning hardware for chip designers in the future if there’s enough demand. Jem Davies, previous head of ARM’s GPU division, is now heading up the company’s new machine learning division. It’s not clear exactly what they’re working on at this stage, though.

Importantly for consumers, improvements coming down the pipeline to next year’s CPU and GPU designs means even lower cost smartphones that forego the expense of a dedicated Neural Networking processor will see some notable performance benefits for machine learning. This will in turn encourage investment and development of more interesting use cases, which is a win-win for consumers. 2018 is going to be an exciting time for mobile and machine learning.