Affiliate links on Android Authority may earn us a commission. Learn more.

Why and how do OEMs cheat on benchmarking? - Gary explains

Published onFebruary 9, 2017

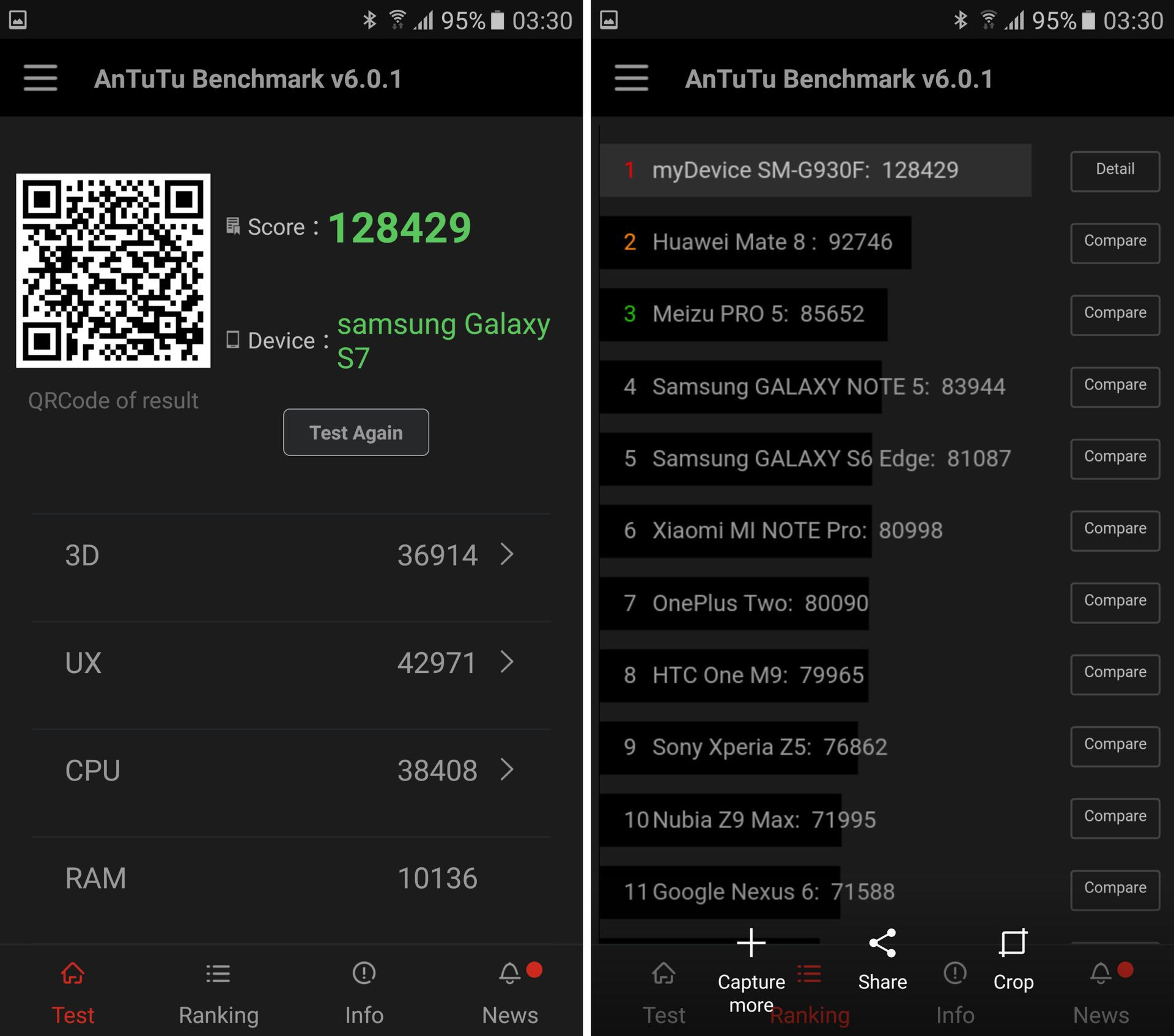

There is the saying that history repeats itself and it is certainly true when it comes to smartphone makers cheating on benchmarks. In the past Samsung has been accused of cheating on the Galaxy S4, then more accusations followed about other devices including the LG G2. This was all back in 2013. Things seemed to quieten down for a while until recently OnePlus and Meizu got busted for benchmark cheating. So why and how do OEMs cheat on benchmarking scores? Let me explain.

The smartphone market is highly competitive and it is all too easy for an OEM to lose market share, fail to make money or even go bankrupt because of a poorly received handset. To call it cutthroat is probably a slight exaggeration, but not by much! Therefore OEMs do everything they can to sell their devices. Of course the starting point is to build good handsets but after that there is the whole area of marketing.

Why

When it comes to smartphone reviews, the role of benchmarks is (perhaps unfortunately) highly important. Having the highest score on the popular benchmarks is seen as important by some marketing executives and as a result they will do whatever it takes to get that top ranking!

Broadly there are three types of smartphone buyers. First there is the person who doesn’t care about specs or benchmarks. They probably get their smartphone as part of a contract with their carrier and if the salesperson says the phone is “good” then that is all the endorsement they need. We are all like this in one way or another whenever we buy something outside of our domain of expertise or interest. The second type of buyer is the one who knows something about the tech. They understand what GB stands for, they know what a microSD card is, they understand screen resolutions and a bit about processor specifications. Such a buyer will be able to make an educated purchase and if presented with benchmark scores, especially in a comparison, will likely be able to appreciate the results. The third type of buyer is the geek, the person who has a passion about the tech and reads all the latest news, reviews and features.

For the second and third type of buyer benchmarks are an important statistic to help bring some clarity to the ever shifting smartphone landscape. The importance given to those benchmarks scores will differ from person to person, however the scores will have a impact on the prevailing opinion about one device or another.

There is also the trickle down effect. Many people will consult friends or family before making a smartphone purchase and while the buyer might not fully appreciate the nuances of a device’s specifications, the person giving the advise probably does. What this means is that metrics like benchmarks can ultimately influence every buying decision.

How

The sworn enemy of battery life is performance. You might think that sounds a bit drastic, but it is true. There is a formula to approximate the dynamic power consumed by a CPU that shows that the higher the clock speed or the higher the voltage then the more power is used. If you are interested, it is P=CV^2f which means that it is approximately proportional to the CPU frequency, and to the square of the CPU voltage.

But think about it this way. On a desktop PC you have a main power supply, a big heat sink and cooling fans. A typical Intel desktop CPU might dissipate somewhere between 50 to 100W of heat. This isn’t the case on mobile. Smartphones don’t have fans and they are powered by batteries. So, the constant struggle for SoC makers and smartphone builders is to make devices which perform as well as possible, without using much energy.

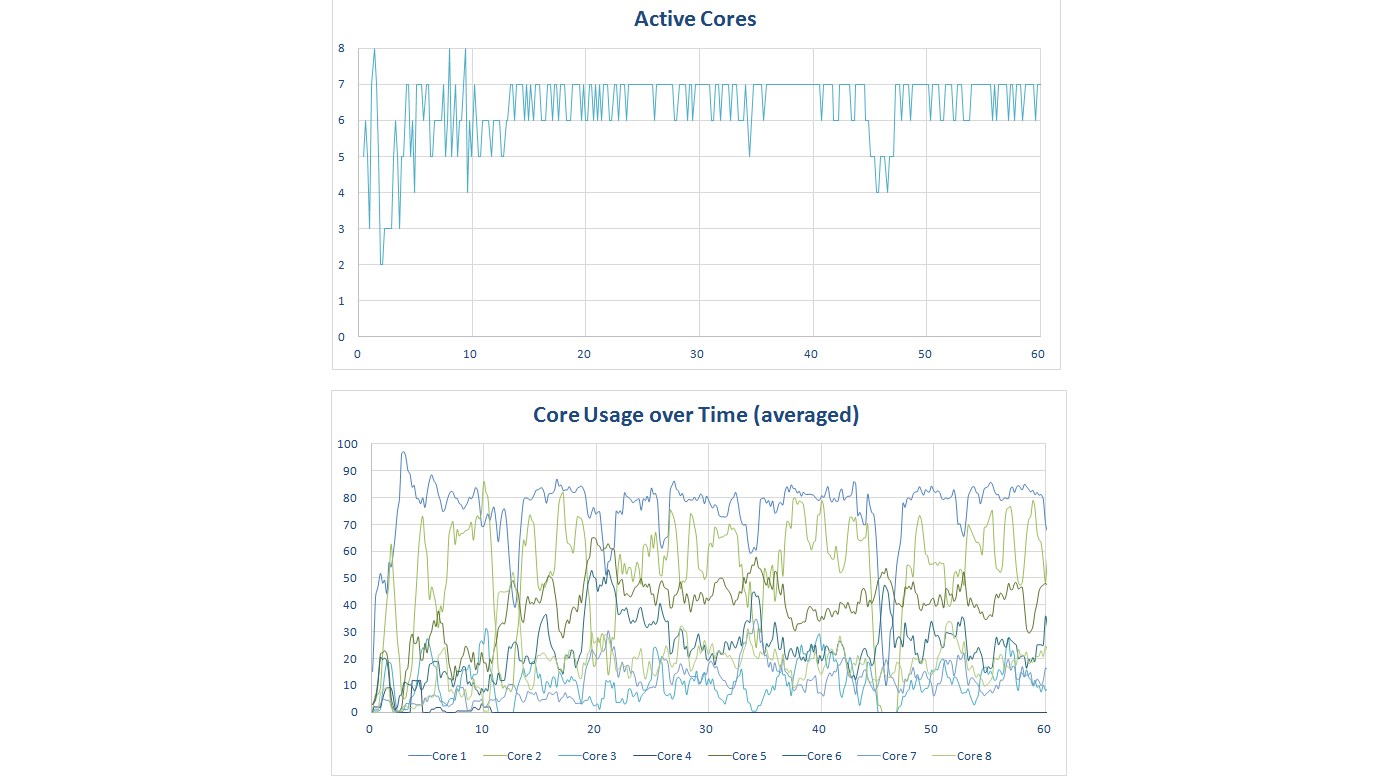

Of course every generation of processor pushes the boundaries and tries to get more performance for the same or less power, however the balancing act remains. Therefore when a smartphone maker builds a device there are various parameters that are set in the software and the hardware to maintain this balance between performance and battery life. The hardware parameters a pretty much fixed, but the software parameters can be tweaked, dynamically.

And this is what is happening when OEMs are being accused of cheating. It works like this: When the phone sees a well known benchmark running it can tweak the software so that the benchmark gets the maximum performance. This performance boost is only active while the benchmark is running and then things return to normal. If the phone remained in its full-throttle, maximum performance mode then the battery would soon be depleted and the device would get quite hot. But for just a minute or two, it isn’t a problem.

Every app has a unique name, something like uk.co.garysims.brightness.brightnessspark and you can search Google Play for apps according to their IDs. The ID for AnTuTu is com.antutu.ABenchMark and it is a relatively simple task for an OEM to add code to a device’s firmware that detects the popular benchmarks by looking for the IDs of the running apps. Companies like Primate Labs (makers of the popular benchmark suite Geekbench) can check for cheats by making a special version of their apps with a different ID, one that won’t be recognized by the firmware.

When the renamed app runs, it will run with the normal operating parameters and not the tweaked performance settings. If the results are significantly different then it shows that the device was treating the benchmark as a special case and not running it as it would other apps.

Is it actually cheating?

From the way that consumers react when an OEM is caught running benchmarks in special modes then it is clear that most people consider this cheating. I guess I do as well, but there is one important thing to remember, the scores from the boosted benchmarks are not fake or false in anyway, they are reporting the performance of the device. The firmware can’t inject a fake score into the app or something like that. The numbers are what the device actually achieved. But, while they might not be fake they are certainly artificial as the device can’t sustain such performance levels without overheating or rapidly draining the battery, which is why it is cheating.

It would be interesting if there was an agreed way to run benchmarks, maybe in a recognized “peak performance” mode available in Android that would allow OEMs to run benchmarks in one of several different settings. This could give us more transparency.

Wrap-up

For those of you that follow my system-on-a-chip showdown articles or other technical articles that I do, you will know that I often use my own benchmarks when testing device performance. It is precisely because of the cheating that I do this and it helps me ensure that my conclusions are just and fair.

The smartphone industry isn’t the only one which has been affected by software cheating, the motor industry has also been embroiled in scandals in recent times and I am sure this won’t be the last time we see headlines about cheating in our industry or in others.

What do you think, are these dirty tricks or acceptable practices? Please let me know in the comments below.