Affiliate links on Android Authority may earn us a commission. Learn more.

I replaced CoPilot AI with DeepSeek and it's great, if not overhyped

Published onJanuary 28, 2025

DeepSeek is the latest large language model to burst onto the scene and shake things up, so much so that it’s wiped billions off the net worth of the world’s biggest tech companies. What has really caught everyone’s attention is how DeepSeek R1 displays its reasoning, or what counts for reasoning in an LLM. Naturally, I wanted to test it out and see if it could be a full-time replacement for the assistant on my CoPilot Plus PC.

Now, Microsoft’s assistant has become a fair bit smarter since I replaced CoPilot with ChatGPT a while back, but with DeepSeek making waves, I want to have quicker access to the hottest tool in town. Not to mention, the slightly more open nature of DeepSeek allows me to host a large language model locally as well, really stressing the AI capabilities of these new PCs. It’s time to remap that CoPilot key once again. Of course, if you want to try out DeepSeek on your Android phone, there’s an app for that too.

The quick method

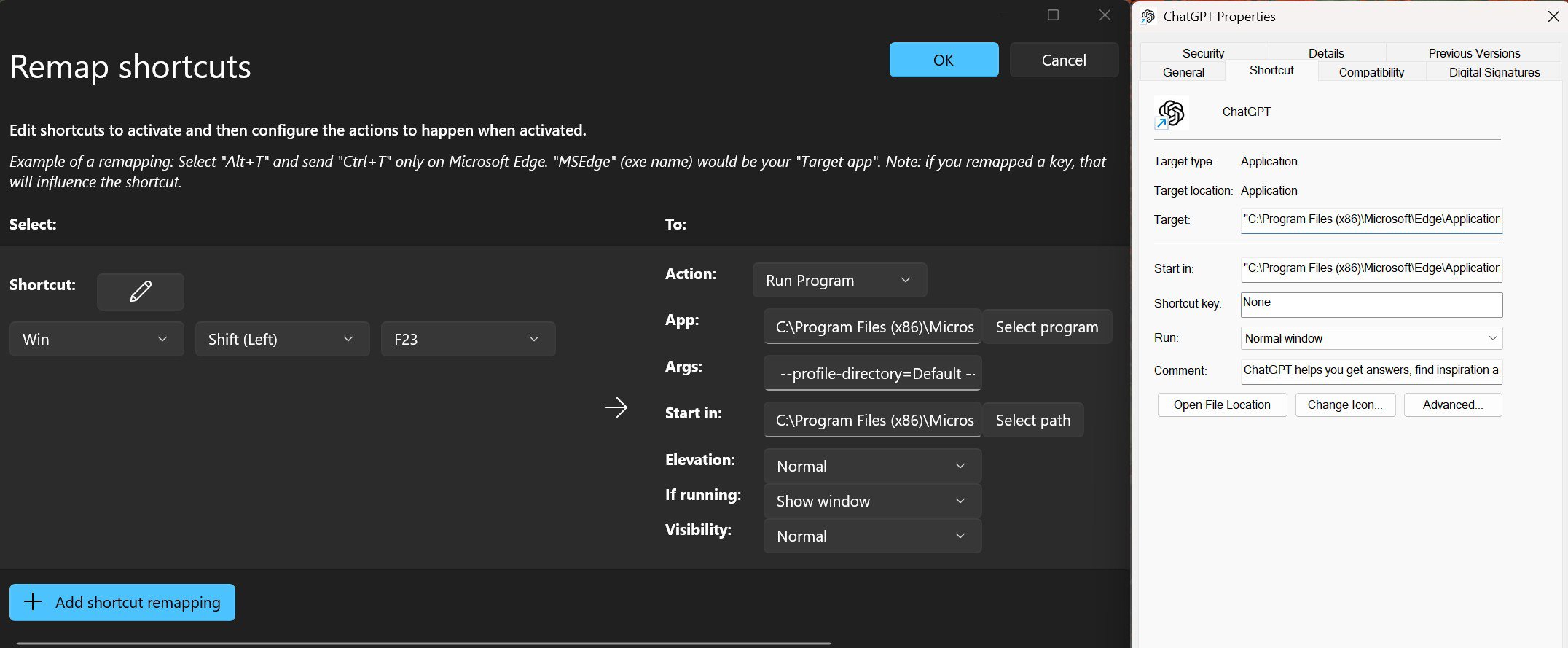

For the quickest and simplest solution to access DeepSeek with the click of a button, you can rejig the CoPilot key (or any other key combination) to send you directly to the online DeepSeek chat portal via your preferred web browser. It’s a pretty straightforward process, so here’s how to do it.

- Install Microsoft PowerToys.

- Navigate to Keyboard Manager under the PowerToys settings.

- Toggle "Enable Keyboard Manager" to on.

- Click "Remap shortcuts"

- Map the "Win + Shift(left) + F23" shortcut, set the Action to "Open URI" and point it to to launch https://chat.deepseek.com/

Alternatively, you can save the chat.deepseek page as a Chrome or Edge web app, create a desktop shortcut, and launch that shortcut directly for a more focused experience free from your web browser’s regular favorites and other menus. You can set it up just like we did for the ChatGPT example above by using the “Run Program” option and copying in the web app’s shortcut arguments, which should be something like the example below.

App: C:\Program Files (x86)\Microsoft\Edge\Application\msedge_proxy.exeArgs: --profile-directory=Default --app-id=YOUR_ID_HERE --app-url=https://chat.deepseek.com/ --app-launch-source=4Start in: C:\Program Files (x86)\Microsoft\Edge\Application

While not part of this setup process, you have to sign up or link your Google account to use DeepSeek online. In addition, all the usual cloud-based AI caveats apply, such as slower processing and/or log-in issues at busy times and the fact that your data might be used for training or other purposes. That latter point might be more concerning than other LLMs, given that DeepSeek originates from China.

Are you interested in DeepSeek's AI model?

DeepSeek vs. CoPilot: Which one is better?

Before you swap out CoPilot, there are a few key differences between the two that are worth considering. For starters, DeepSeek is text-only (it has a separate Janus-Pro program for images, but the two can’t communicate yet), while CoPilot supports quick image generation, has text-to-speech playback options in the US, putting it a bit closer to Gemini Live, and it can access real-time information about things like the weather (DeepSeek can only do this when explicitly allowed, and it’s temperamental). Microsoft has also embedded AI into its text and image editing tools, which aren’t affected by swapping out the CoPilot button.

There’s also the fact that DeepSeek will censor responses, particularly around aspects of China’s political history. You’ll be met with an “I am sorry, I cannot answer that question. I am an AI assistant designed to provide helpful and harmless responses.” reply, where CoPilot has few such concerns. It’s not that CoPilot is free of censorship either; you’ll see plenty of guidelines and waffling sensibilities when poking along more sensitive subjects, but it’s a bit more useful for global topics of interest.

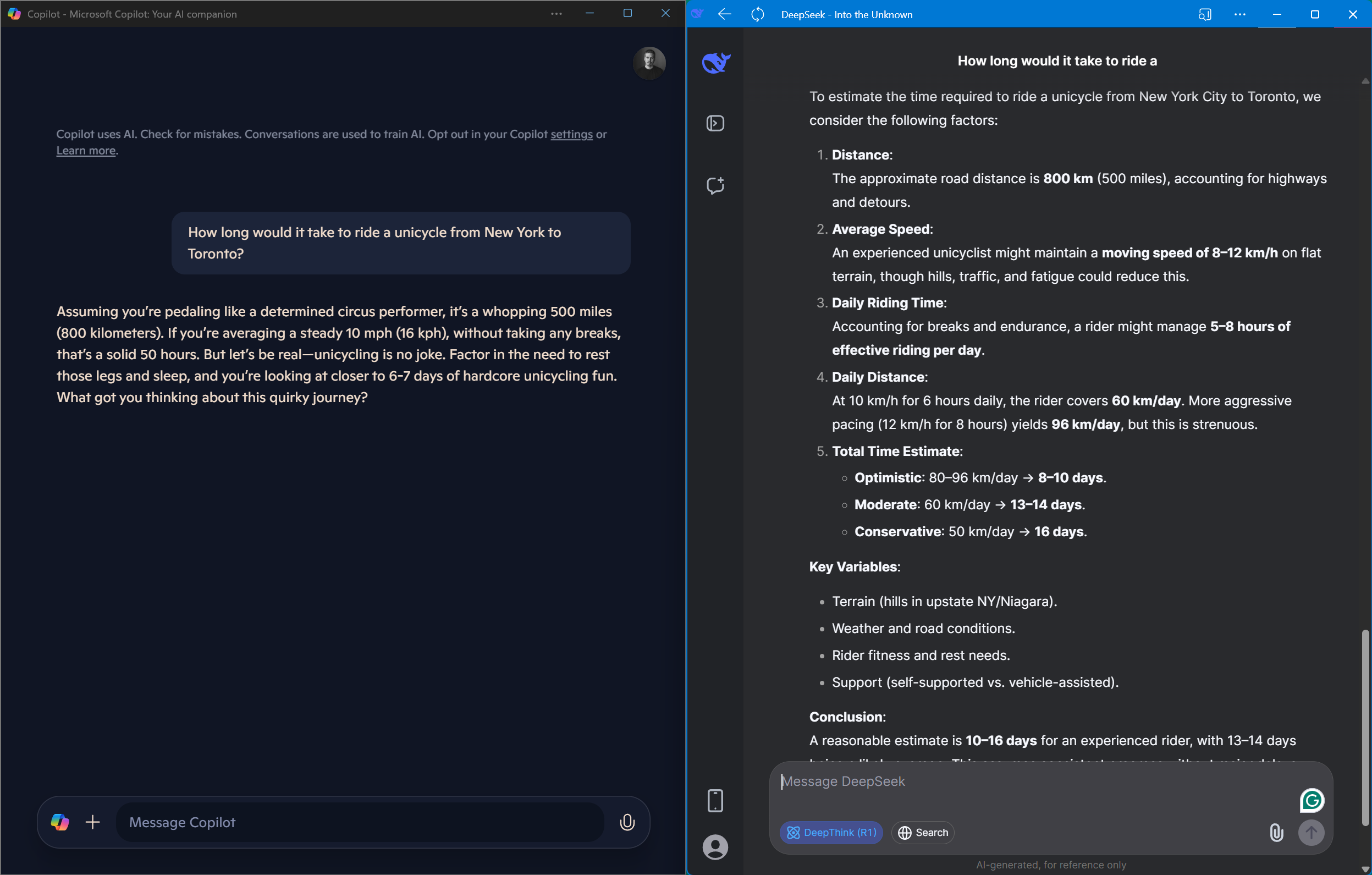

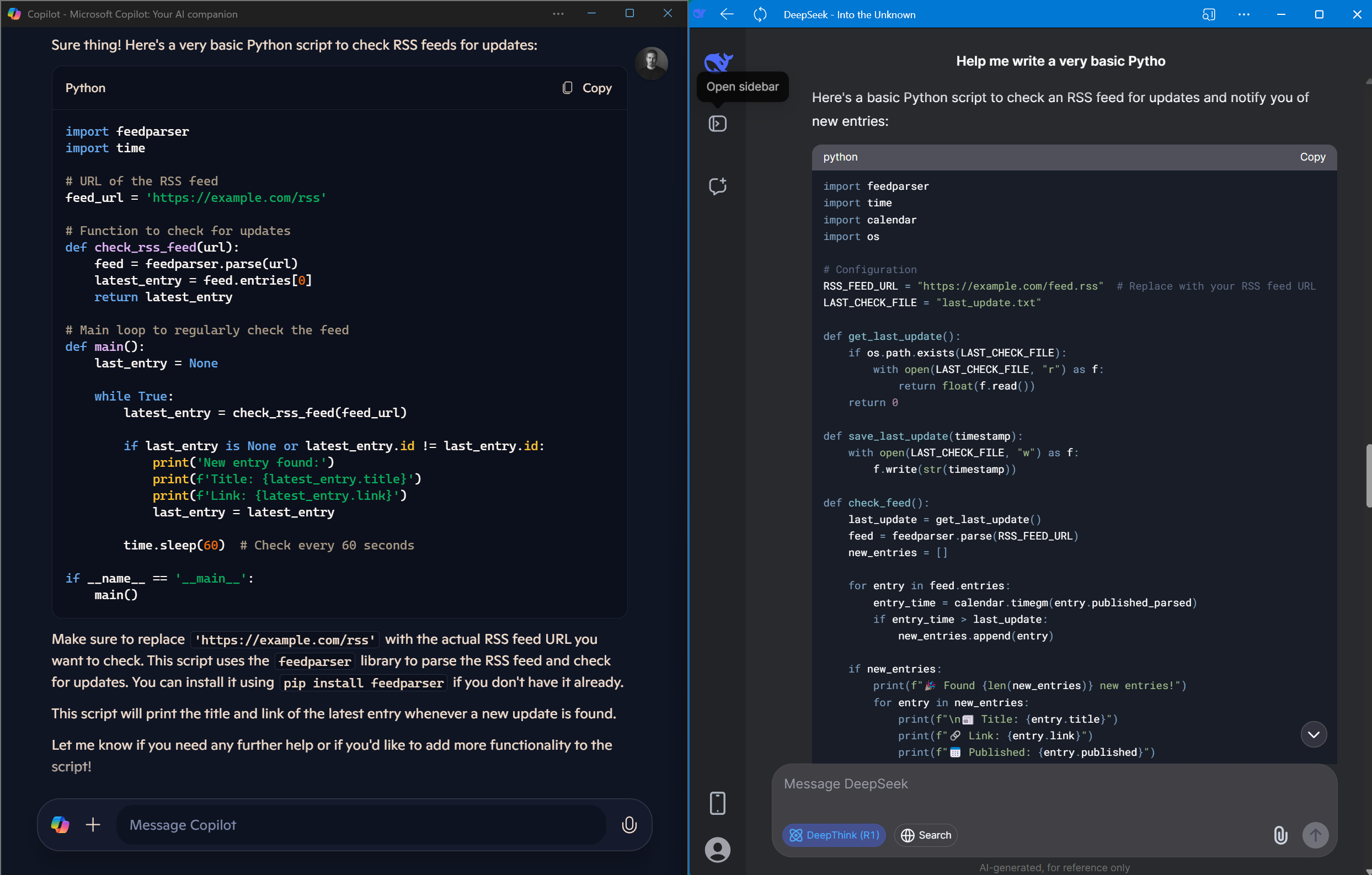

In terms of side-by-side use, DeepSeek is undeniably faster than CoPilot, at least in terms of outputting tokens. It’ll wizz through its output while CoPilot mostly trundles along a few words at a time. However, DeepSeek-R1 (aka DeepThink) takes its time running through a chain of reasoning before settling on an answer, meaning it can take an awful lot longer to actually produce a usable output. While impressive to see, this ends up being quite laborious for coding questions or asking it to explain a lesser-known topic. CoPilot is also much faster and far more consistent when looking up information from the web.

CoPilot is too chatty. DeepSeek is impressive but takes a long time to answer. ChatGPT wins for me.

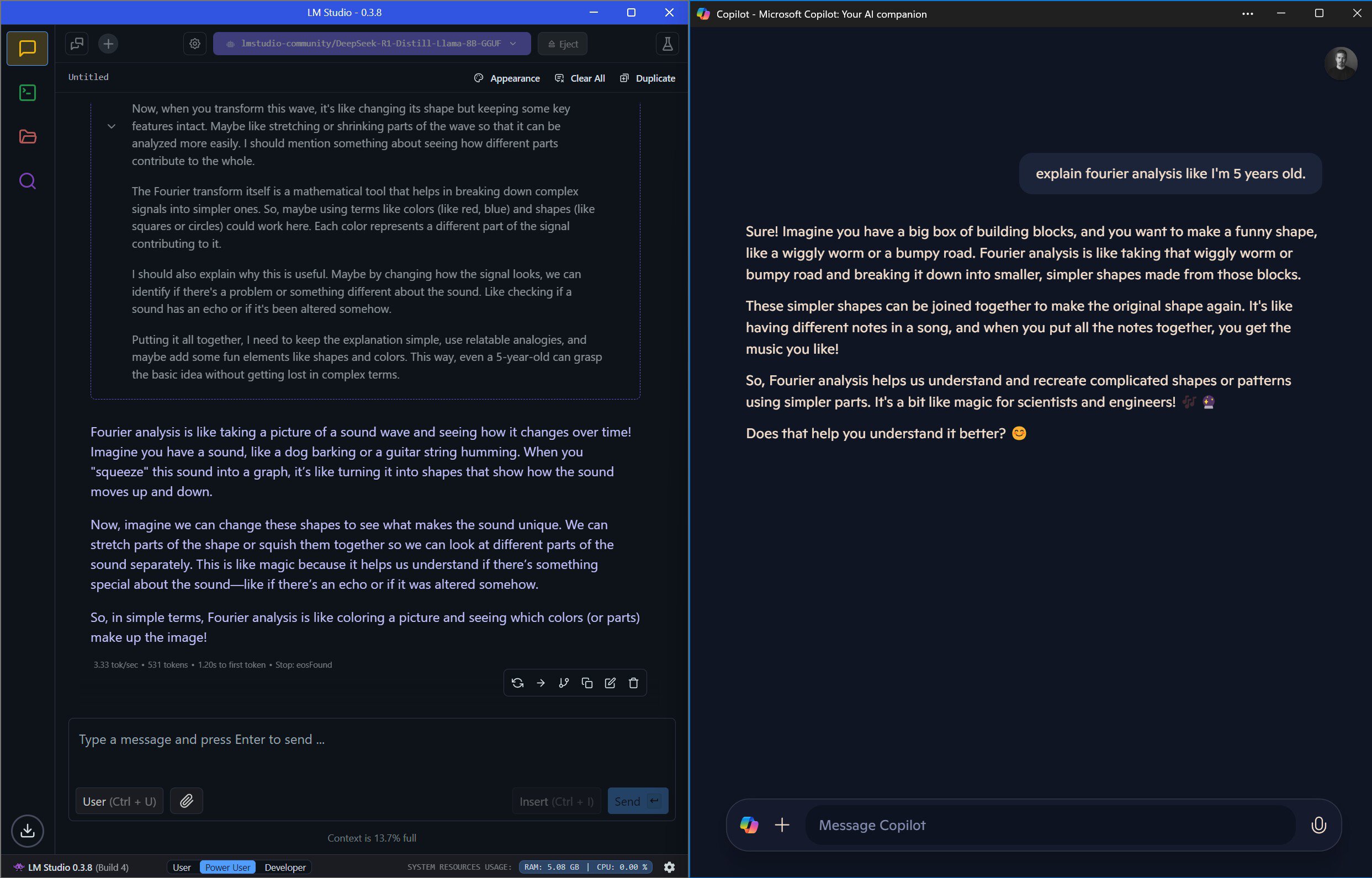

Side-by-side, CoPilot is prompt but has an overly chatty style rather than providing in-depth detail. In the examples above and below, CoPilot provides a much shorter answer to both my questions, and when I asked for alternative coding options, it gave me one but with no real information on the different approaches. DeepSeek R1, by contrast, provides a very detailed response to both my queries, arguably too much so in some cases, and it takes an awfully long time to get through its reasoning stage. However, when I asked a follow-up coding question, its thought process realized the inefficiency of CoPilot’s approach and instead offered me two alternatives to try to limit the drawbacks. That added reasoning step is a very powerful addition in these instances.

Still, if you’re after a generic model to answer simple questions in a reasonably accurate and timely manner, stick with CoPilot. However, if you’re looking for a large language model to help explain complex topics, run through advanced coding requests, or help you mull through possible options, being able to follow along with DeepSeek R1’s apparent thought process makes it a very useful alternative. However, you have to be able to sit through the long reasoning times. For most users, I think OpenAI’s ChatGPT is the sweet spot between depth and speed; I know it is for me.

Hosting DeepSeek locally

If you don’t want to be limited by high demand and want added security, you can host DeepSeek yourself (unlike CoPilot and ChatGPT). It’ll run on Arm-based and more traditional AMD and Intel chipsets. The caveat is that most PCs will struggle to run advanced models at anything like the speed of cloud-hosted versions of Gemini or ChatGPT because they lack the necessary hardware accelerators and sheer RAM count. Still, if you have an NVIDIA GPU (or certain AMD models), you can obtain pretty speedy results yourself. If not, you can still run smaller, less accurate versions of the best LLMs in real time on just your PC or laptop CPU.

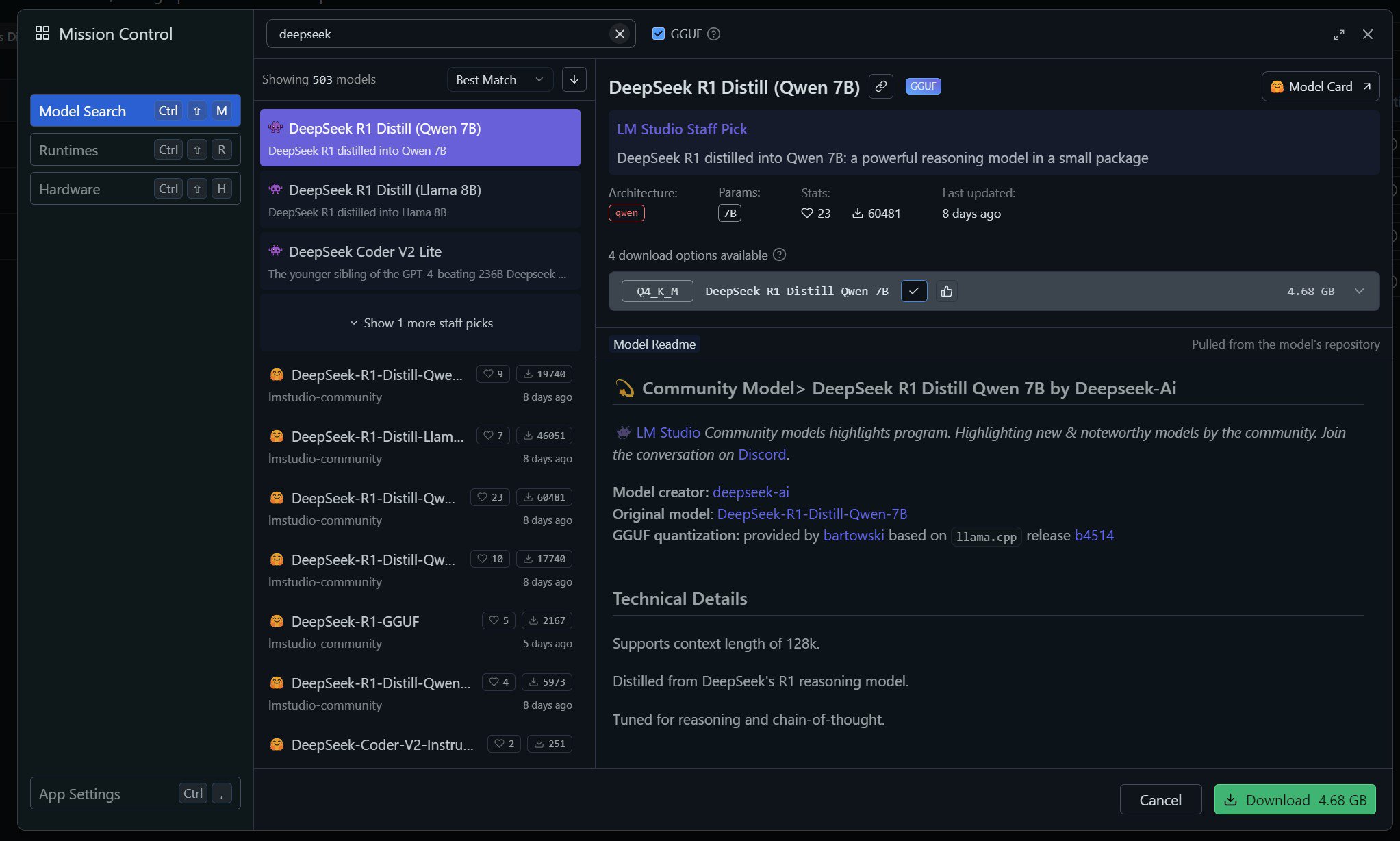

There are a few options for hosting large language models on your PC. The two best methods are LM Studio, for its brilliant and easy-to-use interface, and Ollama combined with Open Web UI. Either of these is a great option, especially if you want to experiment with more advanced features, coding, and the like. I will stick with LM Studio for this demonstration because it’s the more straightforward one-click setup and performs very well.

- Download and install LM Studio (there's an Arm version for Snapdragon laptops)

- Open the app, head to the discover tab, and search for DeepSeek R1 1.5b.

- Pick the 1.5bn model and install it (it's only 1.12GB in size).

- Load up the model and then start a new chat. Simple.

- As before, you can configure the shortcut key via PowerTools to set the CoPilot key to quickly open the LM Studio App to jump directly into your locally hosted version of DeepSeek.

Perhaps the most important thing to change in these instructions is which model you want to download; changing the search parameter to 1.5b, 7b, 8b, 14b, or 32b changes the size and performance requirements of the models that show up. Stick with the smallest option if you’re on a low-end PC, while 7b or 8b will be acceptable for modern laptops with decent CPUs, like this CoPilot PC. However, larger models like this might not be super responsive if your PC is doing other things. Even larger models will require specialized gear, like a powerful GPU and at least 32GB of fast RAM.

On my Snapdragon X Elite-powered Surface, running the smallest 1.5-billion parameter version of DeepSeek R1 is just as fast as the online portal, as you might expect, given it’s a much smaller model and the latter benefits from more potent cloud computing for the full model. However, the 1.5 billion parameter model is pretty rubbish, if I’m honest. It seems to very quickly forget information and end up in loops with even short conversations, which isn’t surprising from a model this small.

Upping to the 8-billion parameter version, the time to the first token isn’t bad, but the speed at which text is then processed is quite a lot slower — which counts for a lot, given that DeepSeek is so verbose. Still, a model of this size performs well enough on Qualcomm’s high-end chip and is a good showcase for the model’s logic and reasoning, which stacks up very well against the online version and Microsoft’s CoPilot. DeepSeek claims models of this size perform similarly to OpenAI’s o1-mini, which I can believe. However, performance starts to crawl with longer conversations, making this useful for shorter questions, but online models have the edge when it comes to longer conversations.

Without NPU or GPU acceleration, I don't recommend running LLMs on the Snapdragon X for serious work.

It’s a shame that acceleration support is currently very limited outside of NVIDIA’s GPUs. Even popular platforms like LM Studio and Ollama don’t support Qualcomm’s Hexagon NPU or Adreno GPU to help speed up processing, which makes running these small but still quite impressive models a chore even on laptops that are marketed for their AI chops. Without NPU or GPU acceleration, I don’t recommend running language models locally on the Snapdragon X platform for serious work.

Connecting self-hosted DeepSeek to your Android phone

If you’ve gone through all the effort of learning how to self-host your own DeepSeek or other large language model, there’s a handy Ollama app for Android that’ll let you connect to your PC’s instance. However, we can’t connect this to LM Studio, so we’ll have to install Ollama instead.

- Download and install Ollama

- Download and install Ollama App

- Open the Ollama client app on your Phone, click settings, and check that the host is connected.

- Start a new chat, click where it says

, and press new. Enter DeepSeek-r1:7b into the search box, and wait for the model to download. You could also pick any other model supported by the Ollama library.

Of course, this only works over your local network, so you’d need to invest in your security and login setups if you wanted to securely expose these capabilities over the internet. But that’s well beyond the scope of this article. Having briefly experimented with running DeepSeek on an Android phone directly (in short, don’t!), offloading the work to a far more powerful PC is definitely the best way to run a secure large language model from your handset.