Affiliate links on Android Authority may earn us a commission. Learn more.

Squeeze play: compression in video interfaces

Published onDecember 18, 2017

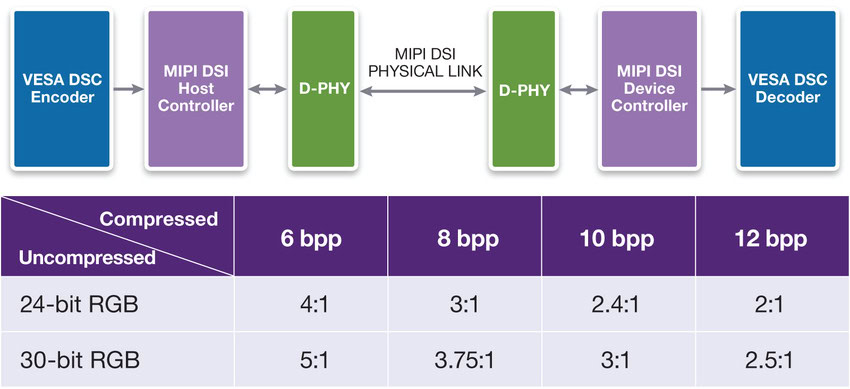

In 2014 the Video Electronics Standards Association (VESA) introduced the 1.0 version of its Display Stream Compression (DSC) specification, the first standard system for compressing video specifically intended for use with hardwired display interfaces. The DSC standard was also endorsed by the MIPI Alliance, paving the way for widespread use in mobile devices and other applications beyond VESA’s original PC-centric focus.

Last year, version 1.2 was published, extending the feature set to include the 4:2:0 and 4:2:2, YCbCr formats commonly seen in digital television, and the group continues to develop and extend DSC’s capabilities and features.

But why the need for compression in the first place? Is it a good thing overall? Simply put, DSC’s adoption is driven by the seemingly-insatiable appetite for more pixels, greater bit depth, and ever-increasing refresh rates. While the real need for some of these is debatable, there’s no argument that, especially in mobile devices, there’s a need to deliver high-quality, high-definition images while consuming the bare minimum of power. That leads to the need for compression.

A 1920 x 1080 image – considered just a moderate “resolution” these days – at a 60 Hz refresh rate and using 24-bit per pixel RGB encoding requires transmitting almost 3 gigabits of information every second between source and display, and that’s not even counting the inevitable overhead. Move up to “8K” video, as is coming to the market now, and that rate goes up geometrically. 48 billion bits of information need to move every second. That’s fast enough to fill a 1 TB drive in well under three minutes.

Digital interface standards like DisplayPort and HDMI have done an admirable job of keeping up with this growing appetite for data capacity. DisplayPort 1.4 is capable of over 32 Gbits/sec., and future versions are expected to push that to 40 Gbits and higher. But these increases come at a price; all else being equal, faster transmission rates always take more power, on top of the generally higher power requirements of higher-resolution displays. Something has to give.

Compression is actually a pretty old idea, and it’s based on the fact that data (and especially image data) generally contains a lot of unnecessary information; there’s a high degree of redundancy.

Let’s say I point an HDTV camera at a uniformly white wall. It’s still sending out that three gigabits of data every second, even though you might as well be sending a simple “this frame is the same as the last one” message after the first one has been sent. Even within that first frame, if the picture is truly just a uniform white, you should be able to get away with sending just a single white pixel and then indicating, somehow, “don’t worry about anything else – they all look like that!” The overwhelming majority of that 3 Gbits/sec data torrent is wasted.

In mobile devices, compression standards give us the means for connecting high-res external displays— like VR headsets— without chewing through the battery or needing a huge connector.

In a perfect situation we could eliminate everything but that single pixel of information and still wind up with a picture that would be identical to the original: a perfectly uniform white screen. This would be a case of completely lossless compression — if we can assume that “perfect” situation. What eliminating redundancy does, though, in addition to reducing the amount of data you need to transmit, is to make it all that much more important that the data you are sending gets through unchanged. In other words, you’ve made your video stream much more sensitive to noise. Imagine what happens if, in sending that one pixel’s worth of “white” that’s going to set the color for the whole screen, a burst of noise knocks out all the blue information. You wind up with red and green, but no blue, which turns our white screen yellow. Since we’ve stopped sending all those redundant frames, it stays that way until a change in the source image causes something new to be sent.

The goal is to come up with a compression system that is visually lossless

So compression, even “mathematically lossless” compression, can still have an impact on the image quality at the receiving end. The goal is to come up with a compression system that is visually lossless, meaning it results in images indistinguishable from the uncompressed video signal by any human viewer. Careful design of the compression system can enable this while still allowing a significant reduction in the amount of data sent.

Imagine that instead of a plain white image, we’re sending typical video; coverage of a baseball game, for instance. But instead of sending each pixel of every frame, we send every other pixel. Odd pixels on one frame, and even pixels on the next. I’ve just cut the data rate in half, but thanks to the redundancy of information across frames, and the fact that I’m still maintaining a 60 Hz rate, the viewer never sees the difference. The “missing” data is made up, too rapidly to be noticed. That’s not something that’s actually used in any compression standard, as far as I know, but it shows how a simple “visually lossless” compression scheme might work.

If you’re familiar with the history of video, that example may have sounded awfully familiar. It’s very close to interlaced transmission, which used in the original analog TV systems. Interlacing can be understood as a crude form of data compression. It’s not really going to be completely visually lossless; some visible artifacts would still be expected (especially when objects moving within the image). But even such a simple system would still give surprisingly good results while saving a lot of interface bandwidth.

VESA’s DSC specification is a good deal more sophisticated, and produces truly visually lossless results in a large number of tests. The system can provide compression on the order of 3:1, easily permitting “8K” video streams to even be carried over earlier versions of DisplayPort or HDMI. It does this via a relatively simple yet elegant algorithm that can be implemented in a minimum of additional circuitry, keeping the power load down to something easily handled in a mobile product — possibly even providing a net savings over running the interface at the full, uncompressed rate.

If you’re worried about any sort of compression still having a visible effect on your screen, consider the following. Over-the-air HDTV broadcasts are possible only because of the very high degree of compression that was built into the digital TV standard. Squeezing a full-HD broadcast, even one in which the source is an interlaced format like “1080i,” requires compression ratios on the order of 50:1 or more. The 1.5 Gbits per second of a 1080i, 60 Hz video stream had to be shoehorned into a 6 MHz channel (providing at best a little more than a 19 megabit-per-second capacity). HTDV broadcasts very typically work with less than a single bit per pixel in the final compressed data stream as it’s sent over the air, resulting in a clear, sharp HD image on your screen. When unusually high noise levels come up, the now-familiar blocky “compression artifacts” of digital TV pop up, but this really doesn’t happen all that often. Proprietary systems such as broadcast satellite or cable TV can use even heavier compression, and as a result show these sorts of problems much more frequently.

In the better-controlled environment of a wired digital interface, and with the much milder compression ratios of DSC, images transmitted using this system will probably be visually perfect. In mobile devices, compression standards such as these will give us the means for connecting high-res external displays— like VR headsets— without chewing through the battery or needing a huge connector.

You’ll very likely never even know it’s there.