Affiliate links on Android Authority may earn us a commission. Learn more.

Phones we caught cheating benchmarks in 2018

Published onDecember 31, 2018

Smartphone companies cheating benchmarks is a story as old as smartphones themselves. Ever since phones started crunching through Geekbench, AnTuTu, or any other test, manufacturers have been trying to win by any method possible.

We had Gary Sims from Gary Explains walk through why and how OEMs cheat back in February last year, and it appears the process described then is the same today, generously called “benchmark optimization.”

So what’s happening? Certain companies appear to hardcode their devices to offer maximum possible performance when a benchmark app test is detected.

How is a benchmark identified? Android Authority understands that both app names and detection of performance demands are important — so an app called “Geekbench” that is demanding maximum performance is enough for the smartphone to put aside normal battery life conservation and heat dissipation techniques. It’s a complicated area, but what’s clear is that there’s a difference that can be tested.

This isn't the real life behavior that you get day-in, day-out.

Everything running flat out and pushing past normal limitations isn’t the real life behavior that you get day-in, day-out. What’s real, and what’s not? We worked hard to find out.

What we did to find the number benders

In our Best of Android 2018 testing, we worked with our friends at Geekbench to configure a stealth Geekbench app. We don’t know the exact details as to what changed, but we trust Geekbench when they say they cloaked the app. And the results shown in our performance testing prove it.

It might surprise you to know this method caught out at least six different phones, including devices made by HUAWEI, HONOR, OPPO, HTC, and Xiaomi. Not all devices on the list showed cheating behavior during both single-core and multi-core tests; the HTCU12 Plus and Xiaomi Mi 8 only show significant decreases during the multi-core test.

We found up to a 21% discrepancy between the normal benchmark result and the stealth version.

The lowest result identified beyond signal noise was a three percent jump in scores, but we found up to a 21 percent leap in two devices: the HUAWEI P20 Pro and HONOR Play. Hmm!

Here are graphs of the results, showing regular Geekbench scores versus the stealth Geekbench scores from the phones that detected the app and modified their behavior. For reference, we included in the chart below a phone that doesn’t appear to be cheating, to give you an idea of what the difference between runs should look like. We picked the Mate 20 from HUAWEI.

These results are the averages of five benchmark runs, all of which had slight percentage differences, as you see in the Mate 20 detail. Cheaters do best in the regular score (in yellow), and drop back when they don’t recognize benchmarking (blue is the stealth result).

First the single core result:

Then the multi-core results:

Look at those drops! Remember, you want the same performance when running any graphics-intensive game, any performance-demanding app, and not just the benchmark app one with the trademark name.

HUAWEI shows significant discrepancies on the list, but not with the latest Mate 20.

There are some big opportunists on display, along with some smaller discrepancies by the likes of the HTC U12 Plus and the Xiaomi Mi 8.

We also see the HUAWEI Mate 20 (our reference device) results are fine, despite HUAWEI/Honor’s obvious push to show the best possible benchmark performance on the P20, P20 Pro, and HONOR Play. That’s likely because HUAWEI added a setting called Performance Mode on the Mate 20 and Mate 20 Pro. When this setting is toggled on, the phone runs at its full capacity, without any constraints to keep the device cool or save battery life. In other words, the phone treats all apps as benchmark apps. By default, Performance Mode is disabled on the Mate 20 and Mate 20 Pro, and most users will want to keep it disabled in order to get the best experience. HUAWEI added the option after some of its devices were delisted from the 3DMark benchmark database, following a report from AnandTech.

Moving on, let’s take a look at a chart showing which benchmarks results were more heavily inflated, percentage-wise:

As you can see, HTCand Xiaomi played around with small, less than five percent boosts. The P20 range, the HONOR Play, and the notably ambitious OPPO R17 Pro (packing the Qualcomm Snapdragon 710) put their thumb on the scale much more heavily. OPPO really went for it with the single-core scores.

Cheating is as old as time

These sorts of tests have caught out most manufacturers over the years, or at least brought accusations of cheating, from the Samsung Galaxy S4 to the LG G2 back in 2013, to more recent naughtiness from OnePlus and Meizu. OPPO even spoke with us about why its benchmark results were so artificial in November:

When we detect the user is running applications like games or running 3DMark benchmarks that require high performance, we allow the SoC to run at full speed for the smoothest experience. For unknown applications, the system will adopt the default power optimization strategy.

Oppo’s explanation suggests it can detect apps that “require high performance,” but when the app isn’t given a benchmark-related name and is given some stealth updates, those same apps no longer appear to require the same special treatment. That means you better hope OPPO can detect the game you want to play at maximum performance, or you’ll get a drop in grunt of up to 25 percent on the OPPO R17 Pro, at least.

But not everyone cheats

During Best of Android 2018, we tested 30 of the most powerful and modern Android devices. The devices we talked about above cheated, but that still leaves 24 devices that fought fair and square. Besides our reference device, the Mate 20 (and the Mate 20 Pro), the list includes the Samsung Galaxy Note 9, Sony Xperia XZ2, vivo X21, LG G7 ThinQ, Google Pixel 3 XL, OnePlus 6T, and the Xiaomi Mi A2, to name a few.

The inclusion of the OnePlus 6T on the “nice list” is worth highlighting — last year, the company was caught gaming Geekbench and other benchmark apps. Fortunately, OnePlus seems to have abandoned the practice. Along with HUAWEI’s addition of Performance Mode as a user-accessible toggle, this makes us hopeful that fewer and fewer OEMs will resort to shady tactics when it comes to benchmarks.

Benchmarks are getting smarter: Speed Test G

We’ve known for some time that benchmarks don’t tell us the full story, and that’s where “real-world” tests come in. These followed the idea you could start smartphones, run through the same apps, load in and load out, and test which ones would do best over a given set of app runs and loops through a controlled process. The problem with these kinds of tests is that they are fundamentally flawed, as Gary Sims has pointed out in great detail.

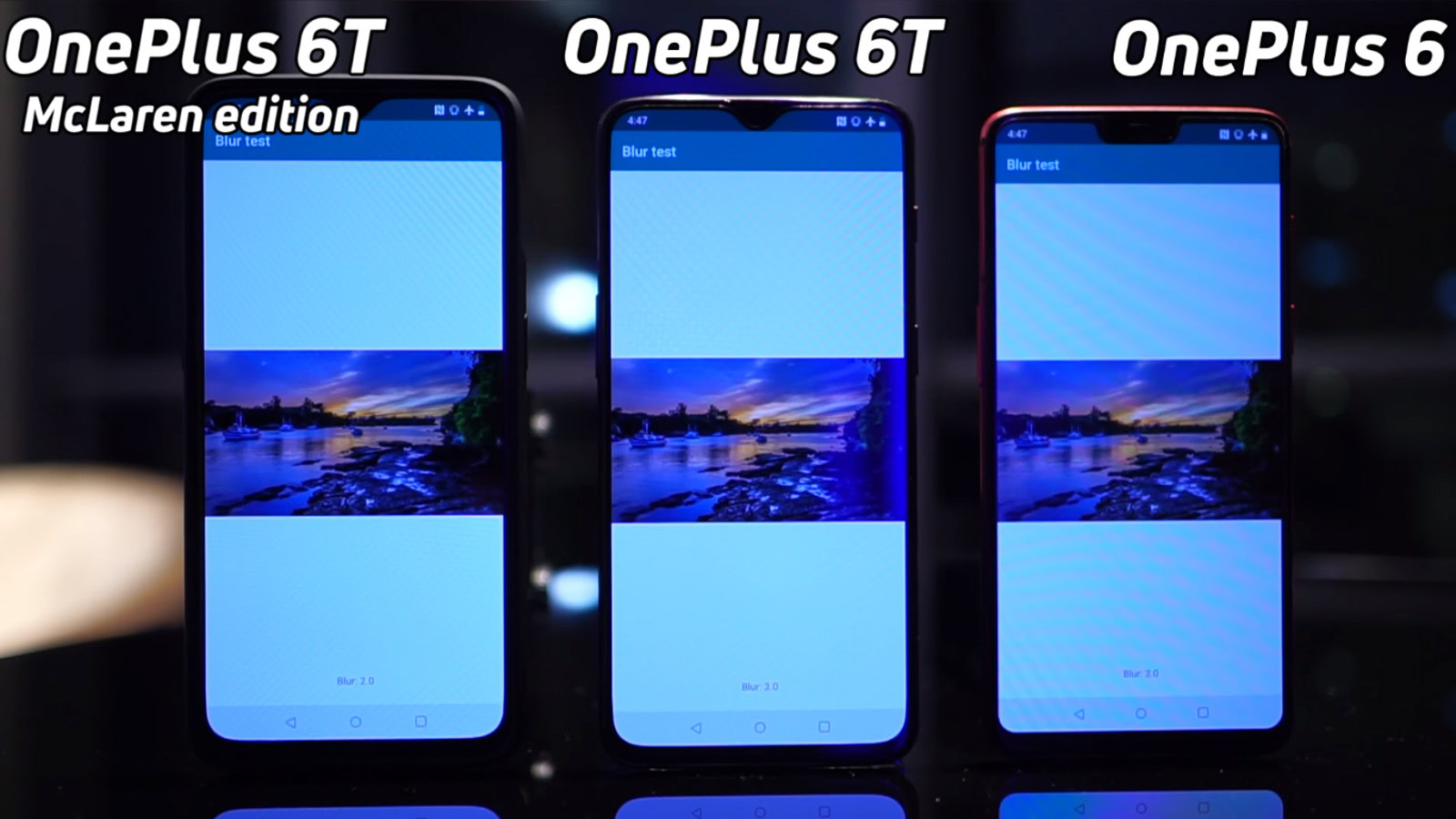

That’s why Gary Sims created Speed Test G, a specially crafted Android app that offers a more genuine and realistic real-world set of problems and tests that importantly cannot be gamed. It’s already showing amazing results and clearing up lots of confusion about what makes a phone “fast” or “powerful” — for example, the OnePlus 6, 6T and 6T McLaren Edition (with more RAM than the rest) all returned the exact same Speed Test G result.

That’s because all three devices fundamentally have the same internals, except for the additional RAM. While extra RAM might sound nice, it doesn’t actually solve many performance problems. Gary’s test doesn’t perform the traditional app reload cycle (where more RAM typically shows its value) because the Linux kernel’s RAM management algorithm is complex, which means it hard to measure reliably.

You have to wonder: how many apps does the average user need to keep in RAM, and for how long? Of course, that won’t stop Lenovo from bringing out a phone in less than a month with 12GB of RAM. Save some for the rest of us!

In any case, we’re greatly appreciative of our friends at Geekbench for helping us with a stealth benchmark app to ensure we found the truest results possible.