Affiliate links on Android Authority may earn us a commission. Learn more.

What is a GPU and how does it work? - Gary explains

Besides the CPU, one of the most important components in a System-On-a-Chip is the Graphical Processing Unit, otherwise known as the GPU. However for many people the GPU is shrouded in mystery. You might know it has something to do with 3D gaming, but beyond that maybe you don’t really understand what is going on. With that in mind, let’s take a peek and see what is behind the curtain.

[related_videos title=”Gary Explains series:” align=”right” type=”custom” videos=”689971,684167,683935,682738,681421,679133″]The GPU is a special piece of hardware that is really fast at doing certain types of math calculations, especially floating point, vector and matrix operations. It can convert 3D model information into a 2D representation while applying different textures and lighting effects etc.

3D models are made up of small triangles. Each corner of the triangle is defined using an X, Y, and Z coordinate, which is known as a vertex. To make a triangle you need three vertexes. When building complex models vertexes can be shared between triangles, meaning that if your model has 500 triangles, it probably won’t have 1500 vertexes.

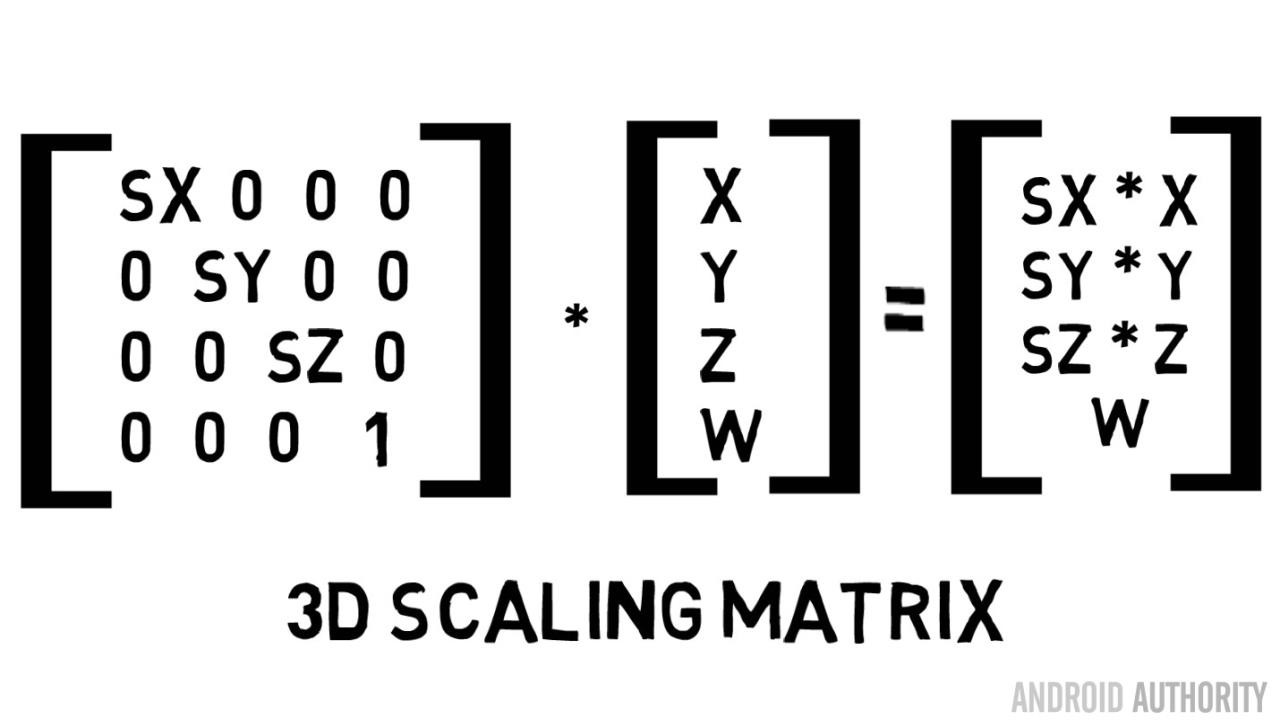

To transpose an 3D model from the abstract to a position inside your 3D world, three things need to happen to it. It needs to be moved, called a translation; it can be rotated, about any of the three axis; and it can be scaled. Together these actions are known as a transformation. Without getting into lots of complicated maths, the best way to process transformations is by using 4 by 4 matrices.

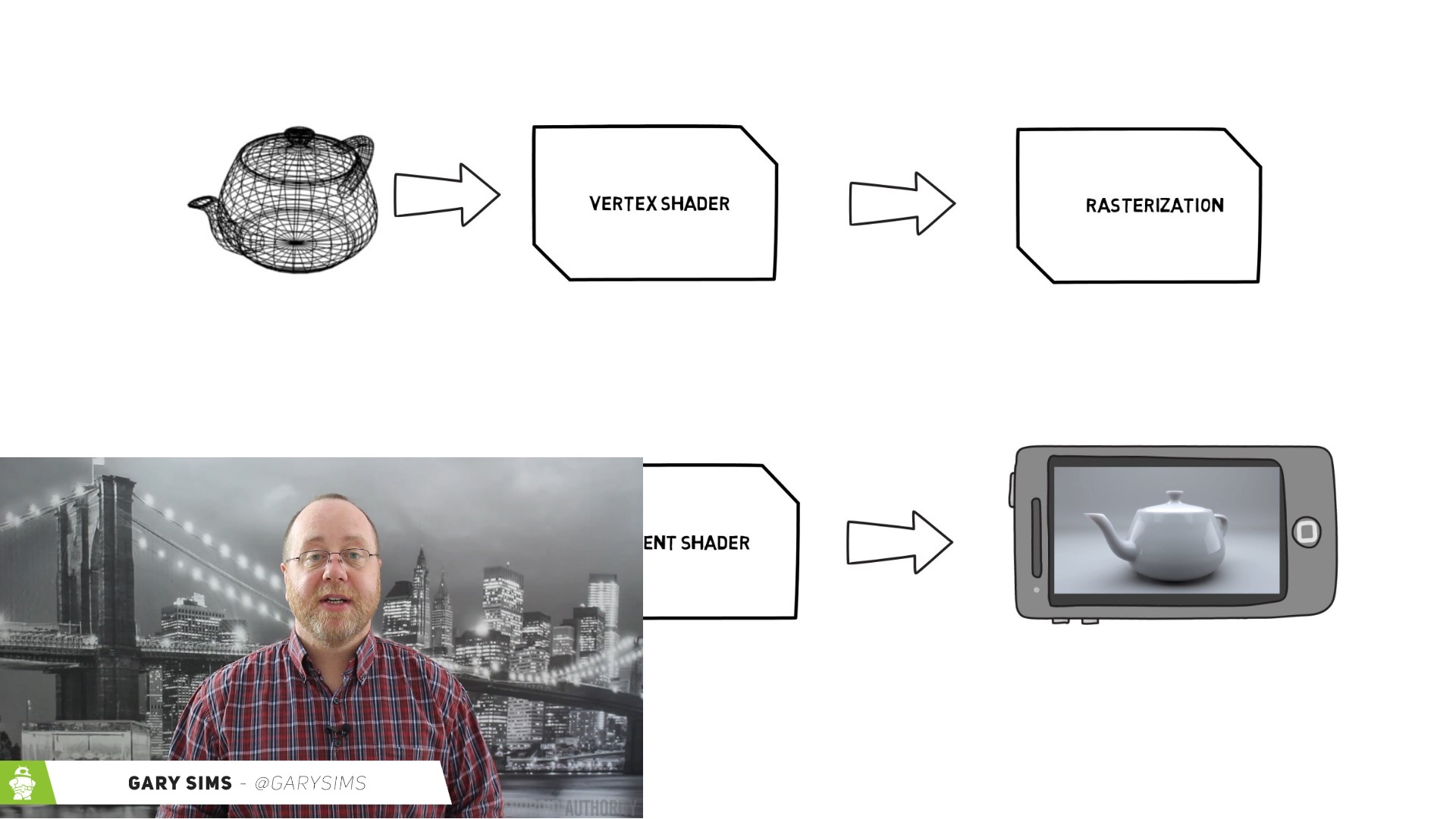

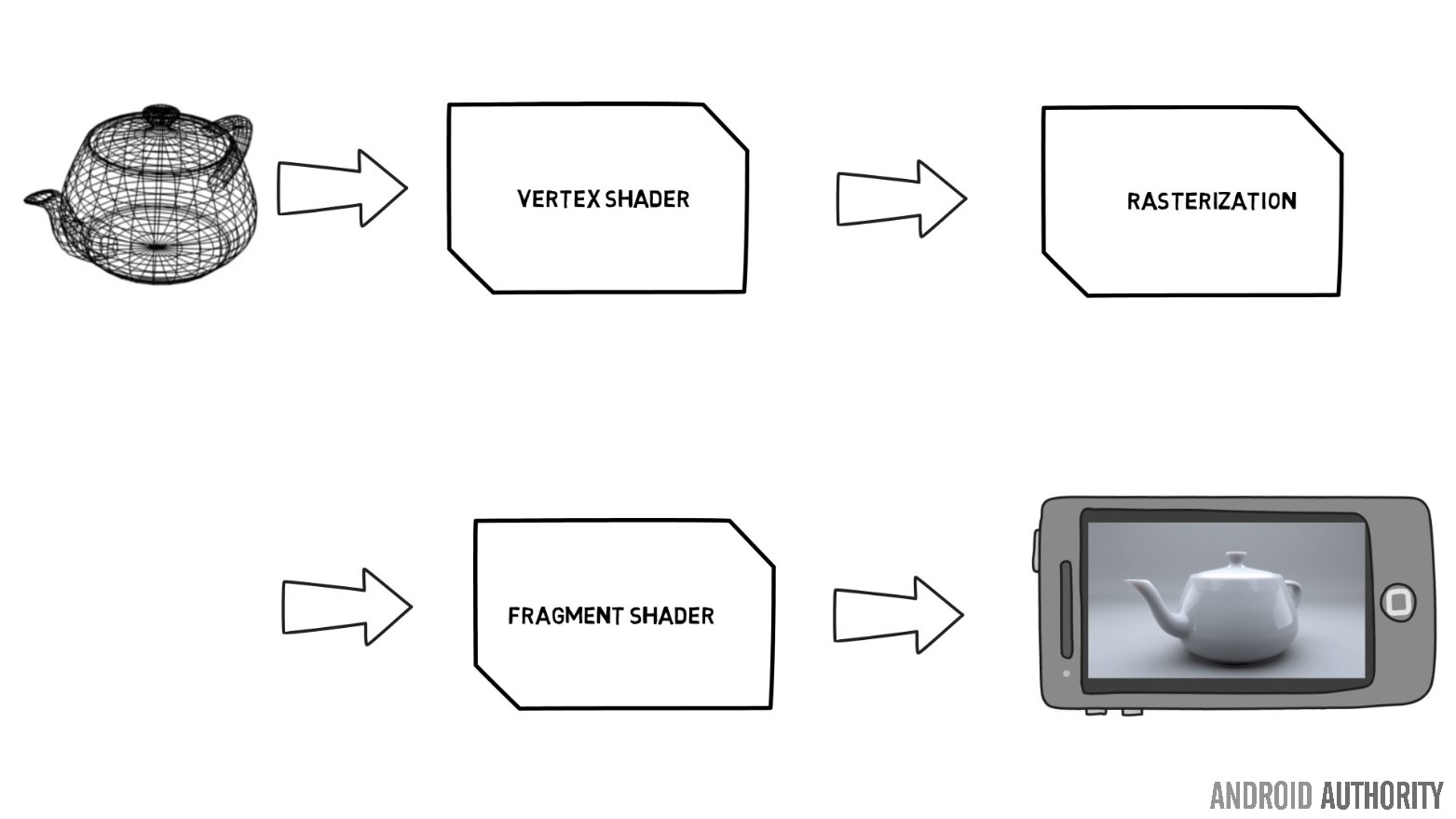

The journey from 3D modeling information to a screen full of pixels begins and ends in a pipeline. Known as the rendering pipeline it is the sequence of steps that the GPU takes to render the scene. In the old days the rendering pipeline was fixed and couldn’t be changed. Vertex data was fed into the start of the pipeline and then processed by the GPU and a frame buffer dropped out of the other end, ready for sending to the display. The GPU could apply certain effects to the scene, however they were fixed by the GPU designers and offered a limited number of options.

Programmable shaders

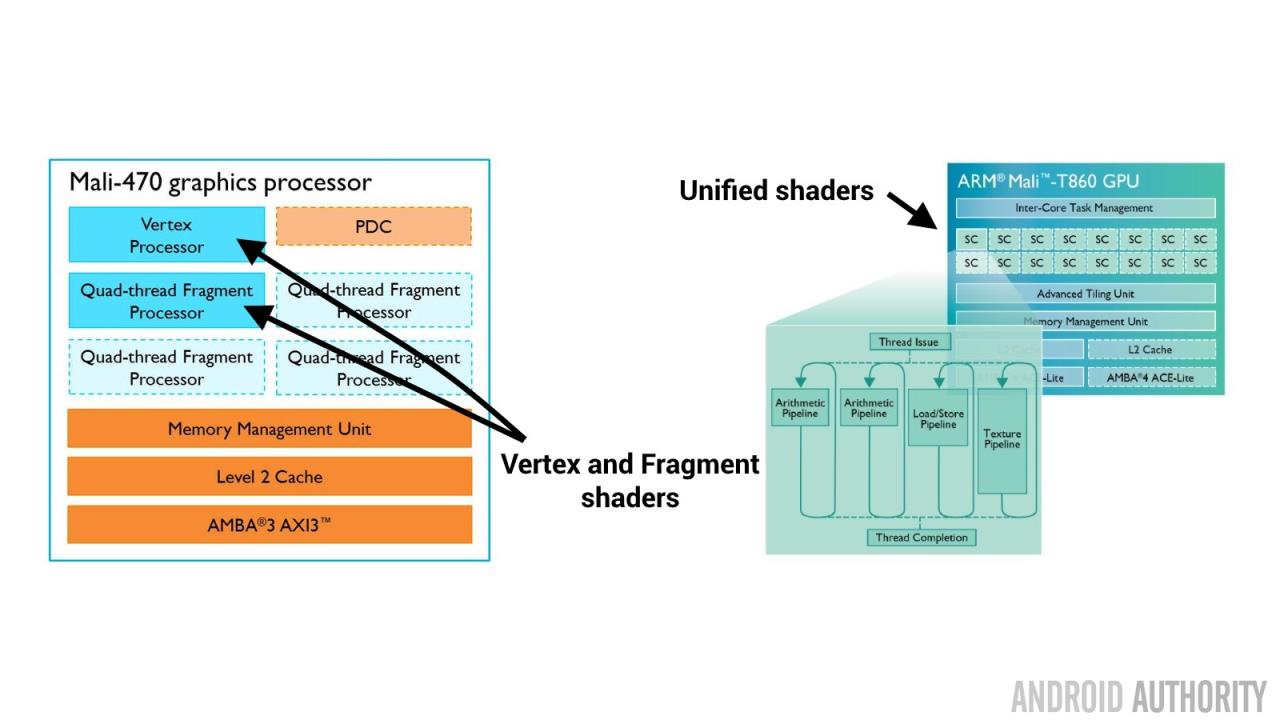

However at around the time of Android’s conception, GPUs on the desktop had grown to allow parts of the rendering pipeline to be programmed. This eventually came to mobile with the publication of the OpenGL ES 2.0 standard. These programmable parts of the pipeline are known as shaders, and the two most important shaders are the vertex shader and the fragment shader.

The vertex shader is called once per vertex. So if you have a triangle to be rendered then the vertex shader is called three times, one for each corner. For simplicity we can imagine that a fragment is a pixel on the screen, and therefore the fragment shader is called for every resulting pixel.

The two shaders have different roles. The vertex shader is primarily used to transform the 3D model data into a position in the 3D world as well as map the textures or the light sources, again using transformations. The fragment shader is used to set the color of the pixel, for example by applying the color to the pixel from a texture map.

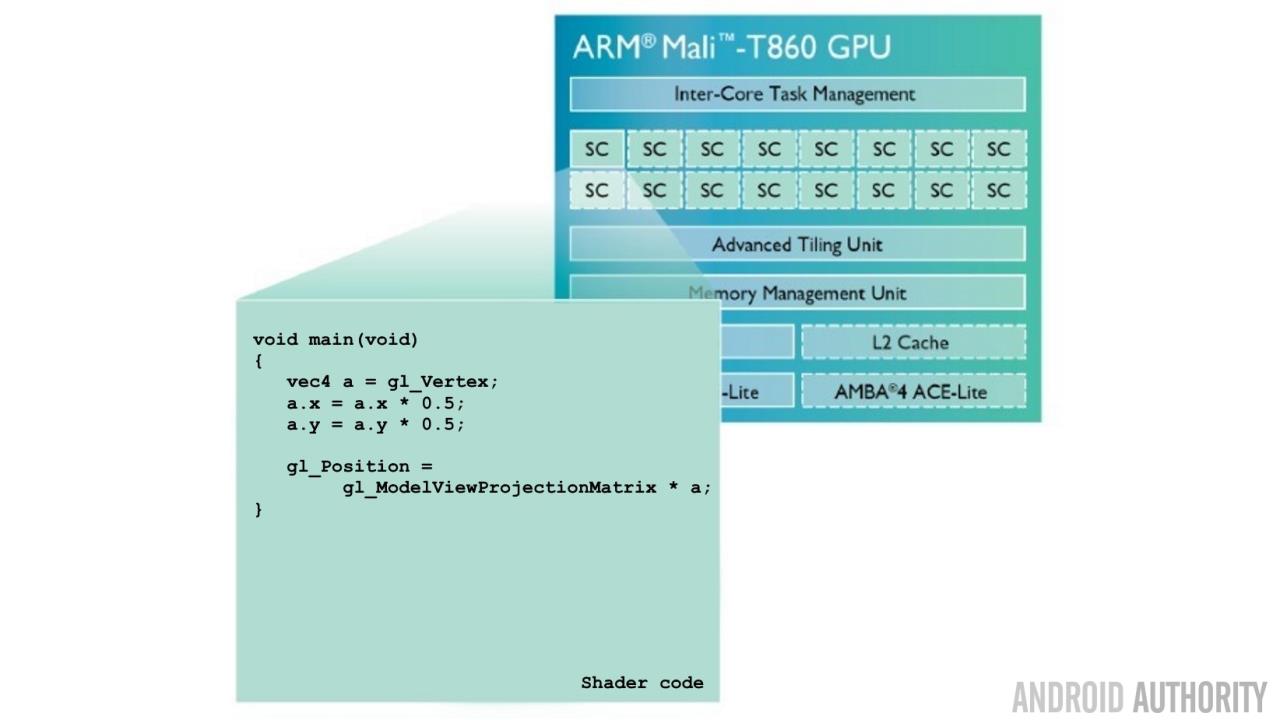

If you noticed each vertex is handled independently from the other vertexes. The same is also true for the fragments. What this means is that the GPU could run the shaders in parallel, and in fact, that is what it does. The vast majority of mobile GPUs have more than one shader core. By shader core we mean a self contained unit which can be programmed to perform shader functions. There are some marketing issues here about what one GPU company calls a shader compared to another.

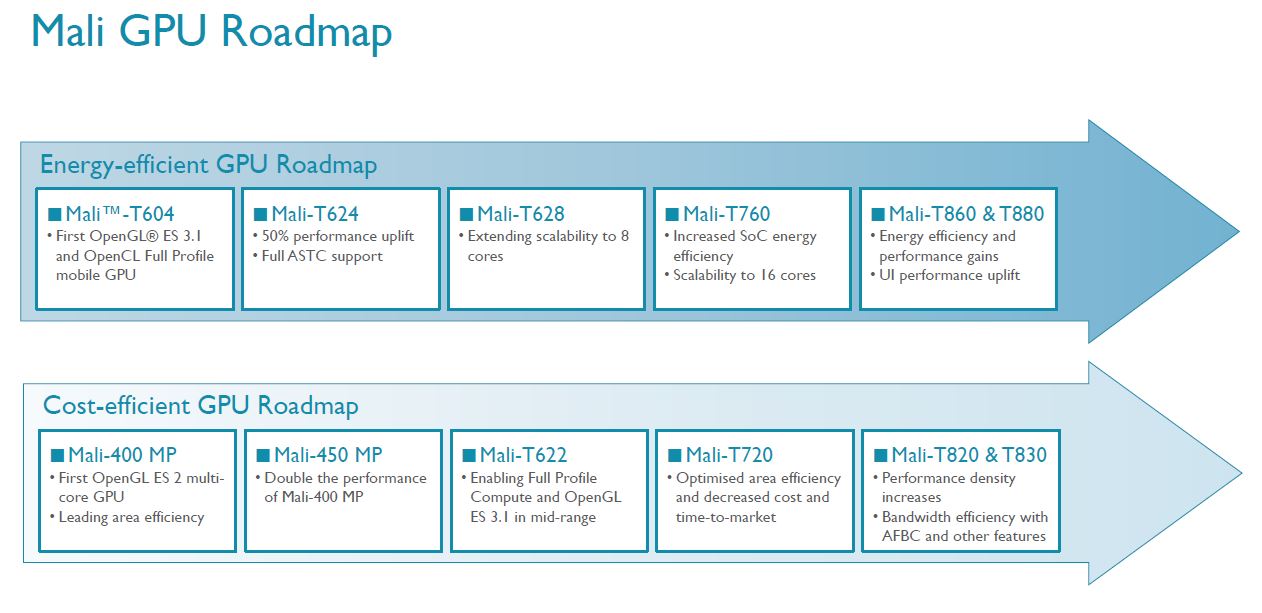

For ARM Mali GPUs, the number of shader cores is denoted by the “MPn” suffix at the end of the GPU name, e.g. Mali T880MP12, which means 12 shader cores. Inside each core there is a complex pipeline which means that new shader operations are being issued while others are being completed, plus there may be more than one arithmetic engine inside each core meaning the core can perform more than one operation at a time. ARM’s Midgard Mali GPU range (which includes the Mali T600, T700 and T800 series) can issue one instruction per pipe per clock, so for a typical shader core it can issue up to four instructions in parallel. That is per shader core, and the Midgard GPUs can scale up to 16 shader cores.

This all means that the GPU works in a highly parallel manner, which is very different to to a CPU, which is sequential by nature. However there is a small problem. The shader cores are programmable, which means that the functions performed by each shader are determined by the app developer and not by the GPU designers. This means that a badly written shader can cause the GPU to slow down. Thankfully most 3D game developers understand this and do their very best to optimize the code running on the shaders.

The advantages of programmable shaders for 3D game designers is enormous, however it does present some interesting problems for GPU designers as now the GPU needs to act in a similar way to a CPU. It has instructions to run, which need to be decoded and executed. There are also flow control issues as shader code can perform ‘IF’ statements or iterate loops, and so on. This means that the shader core becomes a small compute engine able to perform any task programmed into it, it might not be as flexible as a CPU, however it is advanced enough that it can perform useful, non-graphic related tasks.

GPU Computing

Which brings us to GPU computing, where the highly parallel nature of the GPU is used to perform lots of small, mathematical tasks simultaneously. The current growth areas for GPU computing are machine learning and computer vision. As the possible uses of GPU computing expand, the role of the GPU will expand and its position is being elevated from a slave of the CPU to a full partner.

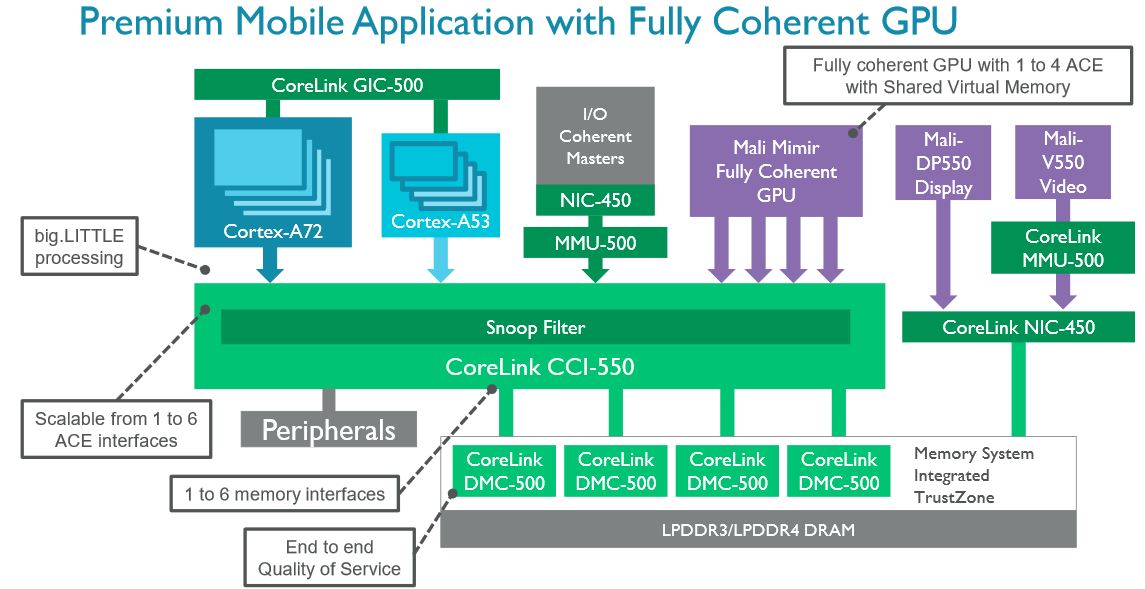

In October 2015 ARM released details of its latest SoC interconnect product called the CoreLink CCI-550. The role of the interconnect is to tie the CPU, the GPU, the main memory and the various memory caches together. As part of that announcement ARM mentioned a new GPU code-named Mimir that is fully coherent. In this context fully coherent means that if the GPU needs something from the cache memory, even something that the CPU has recently changed, the GPU gets the same data as the CPU, without having to go to main memory. The CCI-550 also allows the CPU and GPU to share the same memory, which removes the need to copy data between CPU and GPU buffers.

Unified shaders and Vulkan

One of the biggest changes between OpenGL ES 2.0 and OpenGL ES 3.0 (and the equivalent DirectX versions) was the introduction of the Unified Shader Model. If you look at this model diagram of the Mali-470 you will see that this OpenGL ES 2.0 compatible GPU has two types of shaders called a “Vertex Processor” and a “Fragment Processor”, these are the vertex and fragment shaders we have mentioned previously.

The Mali-470 has one vertex shader and up to 4 fragment shaders. But if you look at the diagram for Mali-T860 you can see that it supports up to 16 unified shaders, shaders that can act as vertex shaders or fragment shaders. What this means is that the problem of shaders sitting idle (because they are of the wrong type) is eliminated.

The next big thing in terms of 3D graphic APIs is Vulkan. It was released in February 2016 and it brings two important innovations. First, by reducing driver overheads and improving multi-threaded CPU usage, Vulkan is able to deliver notable performance improvements. Second, it offers a single unified API for desktop, mobile, and consoles. Vulkan supports Windows 7, 8 and 10, SteamOS, Android, and a selection of desktop Linux distributions. The first Android smartphone to support Vulkan was the Samsung Galaxy S7.

Power

If you have seen a modern graphics card for a PC you will know that they are big. They have large fans, complicated cooling systems, some even need their own power connection directly from the power supply. In fact, the average graphics card is bigger than most smartphones and tablets! The biggest difference between GPUs in desktops or consoles and GPUs in smartphones is power. Smartphones run on batteries and they have a limited the “thermal budget”. Unlike desktop GPUs, they can’t just burn through power and produce lots of heat.

However, as consumers we are demanding more and more sophisticated graphics from our mobile devices. So one of the biggest challenges for mobile GPU designers, is not adding support for the latest 3D API, but rather producing high performance graphics processing without producing too much heat and without draining the battery in mere minutes!

Wrap-up

To sum up, mobile 3D graphics is all based on triangles. Each corner of the triangle is called a vertex. Vertexes need to be processed so the model can be moved, scaled, etc. Inside the GPU is a programmable execution unit called a Shader Core. Game designers can write code which runs on that core to process the vertexes however the programmer desires. After the vertex shader comes a process called rasterization, which converts the vertexes in pixels (fragments). Finally those pixels are sent to the pixel shader to set their color.

Enjoyed this? Check out the Gary Explains series:

High capacity microSD cards and Android

Java vs C app performance

Processes and threads

What is cache memory?

What is a kernel?

What is root?

Assembly language and machine code

OIS – Optical Image Stabilization

Developers who write 3D games (and apps) can program the vertex shader and the pixel shader to process the data according to their needs. Because the shaders a programmable it also means that GPUs can be used for other highly parallel tasks other than 3D graphics, including machine learning and computer vision.