Affiliate links on Android Authority may earn us a commission. Learn more.

How to use ChatGPT for free: A step-by-step guide

Published onMay 18, 2024

Whether you need to write a resume or come up with a witty poem, ChatGPT is likely the best AI chatbot for the job. It can produce natural-sounding text that reads as if a human wrote it. And the best part? It’s completely free to use, except for an optional ChatGPT Plus subscription that grants you extra features. So without wasting any more time, here’s a quick guide on how to use ChatGPT and extract the most value out of it.

To use ChatGPT, first navigate to its website or download the free app on your smartphone. You can start chatting without logging in, although we'd recommend creating an account for the chat history feature. Simply enter some text (also known as a prompt) and tap Send. The chatbot will reply within a few seconds.

JUMP TO KEY SECTIONS

How to sign up for ChatGPT

You can now use ChatGPT for free without signing up for an account. Simply navigate to chat.openai.com, type in a message, and hit Enter or click the Send button. Keep in mind that your chats won’t be saved without an account, nor will you be able to use features like custom instructions.

To unlock all of ChatGPT’s features, you’ll need an OpenAI account. What’s OpenAI, you ask? It’s the San Francisco-based startup that created ChatGPT and DALL-E, the popular AI image generator. Luckily, creating an account is free and doesn’t take much time. Here’s what you need to do:

- Navigate to the ChatGPT website using a web browser like Google Chrome. If you’re using a smartphone, you can simply download the ChatGPT app for Android and iOS.

- Tap or click on the Sign up button.

- In the next step, enter your email address and set a password. You can also opt to continue via your Google or Microsoft account for easier, password-less logins.

- On the next page, enter your name and birthday.

- Regardless of the option you chose in the third step, you’ll need to verify your phone number to use ChatGPT. While most online platforms only require email verification, ChatGPT asks for a valid phone number as well.

- Finally, accept the terms and conditions that tell you a bit about how ChatGPT works. You’re ready to start chatting.

If you ever need to return, navigate to chat.openai.com again or open the app on your phone. As for how to interact with the chatbot, keep reading for detailed instructions, including a few bonus tips.

How to use ChatGPT for free: Web, Android, and iOS

Assuming you’ve followed the previous section, you should now have access to ChatGPT’s chat interface. As you can see in the screenshot above, it’s rather straightforward — you’ll find a history bar hidden to the left and an empty text box at the bottom of your screen. The latter is where we’ll type our prompts and communicate with the chatbot.

ChatGPT’s main draw lies in its ability to engage in back-and-forth dialog like a human. So with that in mind, just enter whatever comes to your mind without shaping the query. You could start with a greeting, issue a command, or ask a question. Here are a few ChatGPT prompts I’ve used over the past few weeks for inspiration:

- Explain retrograde and prograde motion in the context of space missions.

- Generate a poem about an AI chatbot that went rogue

- Write an email to a colleague asking if they’re free to schedule a meeting for this Thursday. I’d like to discuss the new client with them and talk about how we can meet their needs.

- Write the HTML and JavaScript code for a website that shows the live trading price of Google’s stock.

Once you get a response, you can also continue with a follow-up message. The bot will remember everything and reply with that context in mind. For example, ask ChatGPT to write an email in one prompt and then say something along the lines of “Please make it sound less formal”. Keep in mind that ChatGPT has a 4,096 character limit, which includes both your prompt and the chatbot’s response. That’s pretty generous for most cases, though.

ChatGPT can answer just about any question, but it does have some limitations.

OpenAI has trained the chatbot on billions of text samples, so it knows a lot about the world and can even speak multiple languages. However, it has a knowledge cut-off of 2021, so don’t expect accurate answers on current affairs. That said, there are plenty of great ChatGPT hacks to make the most of its productivity potential.

One ChatGPT hack that I’d like to point out in particular is that you can have full-fledged voice chats with the chatbot if you use the official mobile app. Simply start a new chat and tap the headphones icon and speak your prompt. An upcoming update will also bring

ChatGPT struggles with complex reasoning and understanding and will likely fail if you ask it to solve math questions, for example. I’d personally recommend Wolfram Alpha instead as it can solve everything from basic quadratic equations to complex integrals.

Is ChatGPT free to use? What does ChatGPT Plus add?

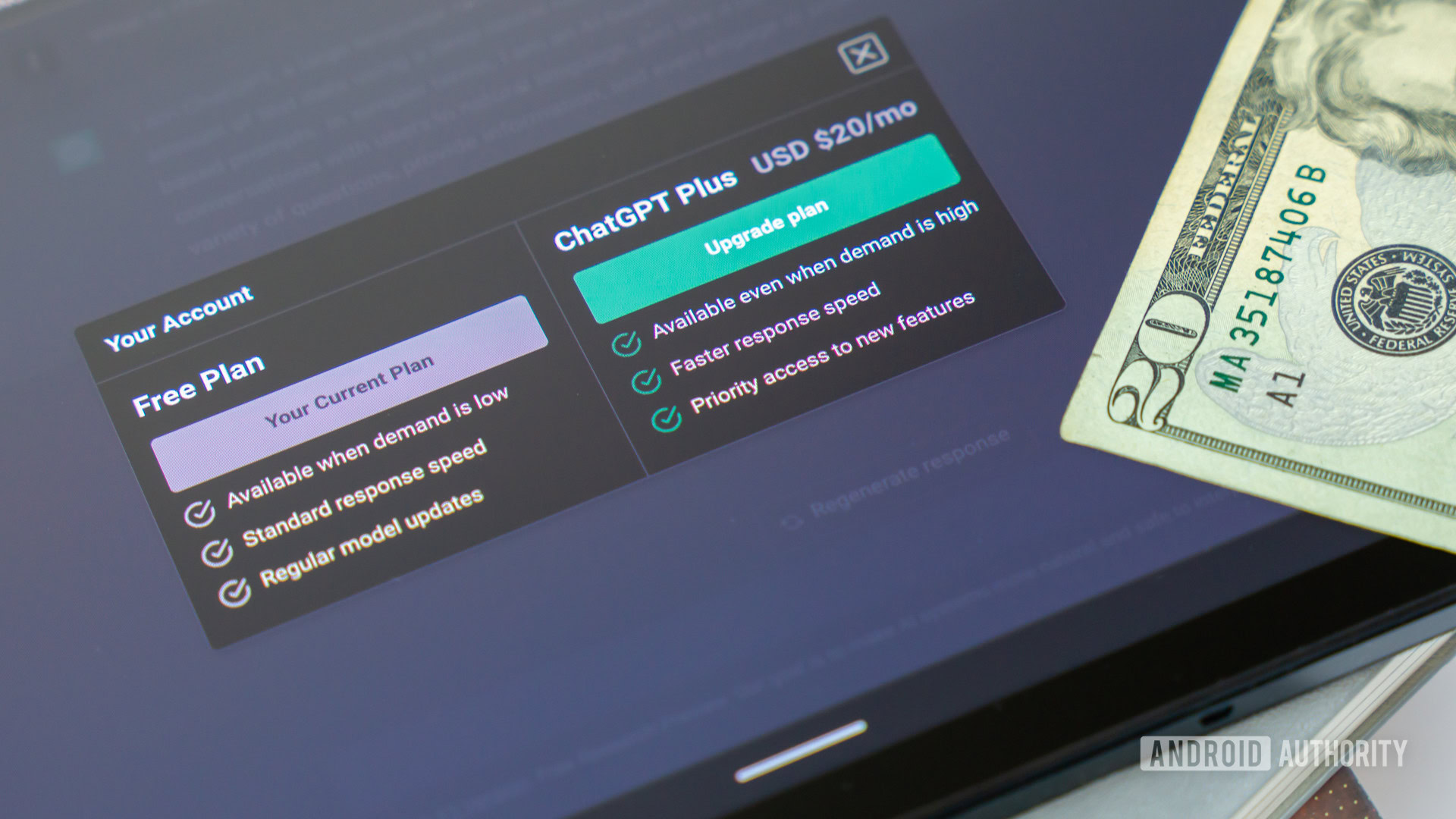

ChatGPT Plus is an optional subscription that costs $20 per month. Arguably the biggest feature you get is full access to the GPT-4o language model. As a free user, you only get limited access to GPT-4o, which means you can send a certain number of messages every hour. It’s still a big upgrade compared to the last-gen GPT-3.5, but you can only get full access to the new model if you pay up. This includes the impressive voice chat mode, where you can have back-and-forth conversations with the chatbot.

You can also improve the chatbot’s logical and mathematical capabilities via custom GPTs if you pay for a subscription. The free version of ChatGPT tends to get new features several months or years after they debut for paying users. Based on this trend, we may also see the company’s upcoming GPT-5 language model roll out exclusively to ChatGPT Plus users.

FAQs

To use ChatGPT for free, simply visit chat.openai.com and start chatting. We also recommend signing up for a free OpenAI account as it will allow you to save chats for future reference

Yes, you can use ChatGPT via its official website without an account. However, you will lose out on features like chat history and voice conversations if you’re not logged in

No, you cannot use the ChatGPT API for free. However, OpenAI has dropped its pricing for GPT-3.5, which has made it more affordable.

Yes, ChatGPT is safe to use, but it saves your data for future training. With this in mind, it’s important to not divulge any sensitive information.