Affiliate links on Android Authority may earn us a commission. Learn more.

What is pixel binning? Everything to know about this photographic technique

Published onFebruary 28, 2023

The past few years have seen the term “pixel binning” regularly pop up when talking about smartphone photography. The term doesn’t exactly conjure up excitement, but it’s a feature powering loads of phones today. It’s improving your photos dramatically.

What is pixel binning? Join us as we look at one of the more popular smartphone photography features on the market.

The importance of pixels or photosites

To understand pixel binning, we must understand what a pixel actually is in this context. The pixels in question are also known as photosites, and they’re physical elements on a camera sensor that capture light to produce images.

Pixel size is usually measured in microns (a millionth of a meter), with anything at or below one micron considered small. For example, the Samsung Galaxy S23 Ultra has a primary camera with 0.6-micron pixels, the Google Pixel 7 Pro has 1.2-micron pixels, and the OnePlus 11 has 1.0-micron pixels.

You generally want your pixels to be large, as a larger pixel can capture more light than a smaller pixel. The ability to capture more light means better image quality both in a dark pub or at dusk, when light is at a premium. The problem is smartphone camera sensors have to be small to fit into today’s svelte frames — unless you don’t mind a gargantuan camera bump.

Having a smaller smartphone sensor means the pixels also have to be small, unless you use fewer pixels (i.e., a lower-resolution sensor). The other approach is to use more pixels (i.e., a higher resolution sensor), but you’ll either have to increase the size of the sensor or shrink the pixels even more. Shrinking the pixels will have an adverse effect on low-light capabilities. That’s where pixel binning can make a difference.

The pixel-binning approach

To sum it up in one sentence, pixel-binning is a process that combines data from four pixels into one. This way, a camera sensor with tiny 0.9-micron pixels will produce results comparable to a camera with 1.8-micron pixels.

Think of the camera sensor as a yard, and the pixels/photosites as buckets collecting rain in the yard. You can either place loads of small buckets in the yard, or several big buckets instead. Pixel-binning is essentially the equivalent of combining all the small buckets into one gigantic bucket when needed.

The biggest downside of this technique is your resolution is effectively divided by four when taking a pixel-binned shot. That means a binned shot from a 48MP camera is actually 12MP. A 64MP camera takes 16MP binned snaps. Likewise, a binned shot on a 16MP camera is only 4MP. All that said, there is much more to camera phones than megapixels. You may not need too many megapixels.

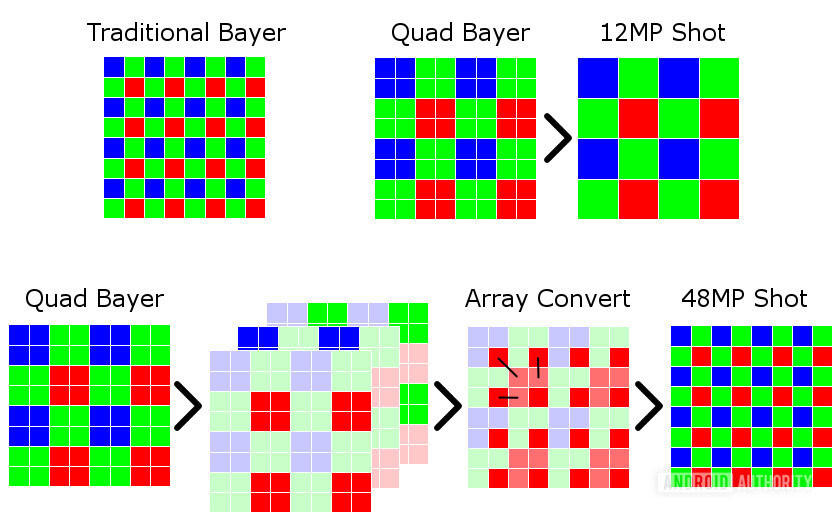

Pixel binning is generally possible thanks to the use of a quad-bayer filter on camera sensors. A bayer filter is a color filter used in all digital camera sensors, sitting atop the pixels/photosites and capturing an image with red, green, and blue colors.

Your standard bayer filter comprises 50% green filters, 25% red filters, and 25% blue filters. According to the photography resource Cambridge Audio in Color, this arrangement is meant to imitate the human eye, which is sensitive to green light. Once the user shoots the image, the camera interpolates and processes it to produce a final, full-color image.

A quad-bayer filter groups these colors in clusters of four, then uses software-based array conversion processing to enable pixel binning. The cluster arrangement delivers extra light information during the array conversion process. This is better than simply interpolating/upscaling to 48MP or 64MP.

Check out the image above to look at how the quad-bayer filter works. Notice how the grouping of the various colors differs from the traditional bayer filter? You’ll also notice it still manages to offer 50% green filters, 25% red filters, and 25% blue filters.

By adopting a quad-bayer filter and pixel-binning, you get the advantage of super-high-resolution shots during the day and lower-resolution, pixel-binned photos at night. These binned night-time pictures should be brighter and offer reduced noise over the regular full-resolution snap.

Pixel binning is a way for manufacturers to offer loads of megapixels without adversely affecting low-light performance too much.

In the last years, we’ve also seen the emergence of nine-in-one pixel binning (dubbed nona-binning) on some 108MP and higher camera sensors. This is very similar to the four-in-one binning outlined above, but combines data from nine adjacent pixels into one. So a 108MP camera with 0.8-micron pixels can deliver images comparable to a camera with 2.4-micron pixels.

As is the case with four-in-one pixel binning, nine-in-one pixel binning also results in the final image being way below the sensor’s native resolution. Where four-in-one pixel binning sees the output resolution divided by four, a nona-binned shot is divided by nine.

Another downside to pixel-binning, in general, is that the color resolution (and therefore color accuracy) will theoretically suffer. Algorithms enhance colors to fill in these color accuracy gaps to ensure accurate results for the final image.

Who is using pixel binning right now?

If a manufacturer has a phone with a very high MP count, then it’s very likely to use pixel binning. Prominent devices include the Samsung Galaxy S23 series, Google Pixel 7 series, OnePlus 11, Xiaomi 13 series, and many others.

We’ve also seen many brands adopt pixel-binning on their selfie cameras. These usually use 20MP, 24MP, 32MP, and even 44MP sensors on the front. They usually let users switch between pixel-binned and full-resolution modes.

In the past, we saw phones like the LG V30s even tout four-in-one pixel binning for the 16MP rear camera. Unfortunately, this means you’re left with a 4MP final image, resulting in a considerable drop in resolvable detail. Higher resolution cameras are more suitable for pixel binning (especially on rear-facing cameras), as the output resolution isn’t low.

Diminishing returns?

We have one question on our minds: when does it become a case of diminishing returns? How small can a camera sensor’s pixels go, and how many megapixels can manufacturers cram into a tiny smartphone sensor, before pixel-binning doesn’t make a difference?

Well, in our tests we found that the Galaxy S23 Ultra’s 200MP HP2 sensor is still showing impressive results. Even if it has tiny 0.6-micron pixels.

Samsung says its 200MP sensors are capable of two types of pixel binning. It can either do four-in-one binning to deliver a shot comparable to a 50MP 1.28-micron pixel camera, or it can do 16-in-one binning to churn out an image equivalent to a 12.5MP 2.56-micron pixel camera.

Samsung has shown nine-in-one pixel binning can work on its 108MP phones. It still delivers detailed low-light images that take the fight to rival phones. But the firm’s 108MP phones all have the same pixel size (and therefore similar light sensitivity in theory) as mainstream 48MP and 64MP sensors. So it could have a significant challenge on its hands to ensure its first 200MP camera sensor, with those significantly smaller pixels, can deliver the goods when the sun goes down.

Even if 200MP+ camera sensors don’t see much adoption, today’s 48MP, 50MP, and 108MP cameras already deliver impressive image quality. When we combine this with ever-improving image processing smarts, better ultrawide cameras, more polished zoom, and better silicon, the future of smartphone photography is still looking good.

n

Pixel binning is excellent, but good smartphone camera photography is all about getting beautiful shots most of the time. It takes more than pixel binning to accomplish such consistency. Things like computational photography, multiple camera setups, super resolution, night mode, HDR, and other implementations help. You can take a look at our list of the best camera phones if you want to take stunning images.