Affiliate links on Android Authority may earn us a commission. Learn more.

YouTube tries harder, removes 8.3 million videos in 3 months

April 24, 2018

- The brand new YouTube Quarterly Moderation report gives us an idea of how YouTube is doing in the fight against inappropriate content.

- According to YouTube, it removed 8.3 million videos in the last quarter of 2017. Most of those removals were by machines.

- Going forward, YouTube will report its moderation activity for all to see.

It looks like the constant criticism YouTube receives relating to the amount of inappropriate content on the platform has finally gotten through to the company. In its first quarterly moderation report, YouTube stated that it removed 8.3 million videos during the last three months of 2017.

The bulk of the videos removed were spam or adult content, and 6.7 million of those videos – or roughly 81 percent of the total – were first flagged by machines rather than humans. And, of the 6.7 million videos flagged by robots, 76 percent were removed before anyone watched them even once.

The information comes from YouTube’s blog, which going forward will release a quarterly report on its content moderation. According to YouTube, “this regular update will help show the progress we’re making in removing violative content from our platform. By the end of the year, we plan to refine our reporting systems and add additional data, including data on comments, speed of removal, and policy removal reasons.”

YouTube also wants to get you involved in the moderation. It is rolling out a Reporting History dashboard to all YouTube users that they can access to see the status of any content they’ve flagged. Hopefully, users seeing the progress of their flags will encourage them to keep flagging content that violates YouTube’s terms.

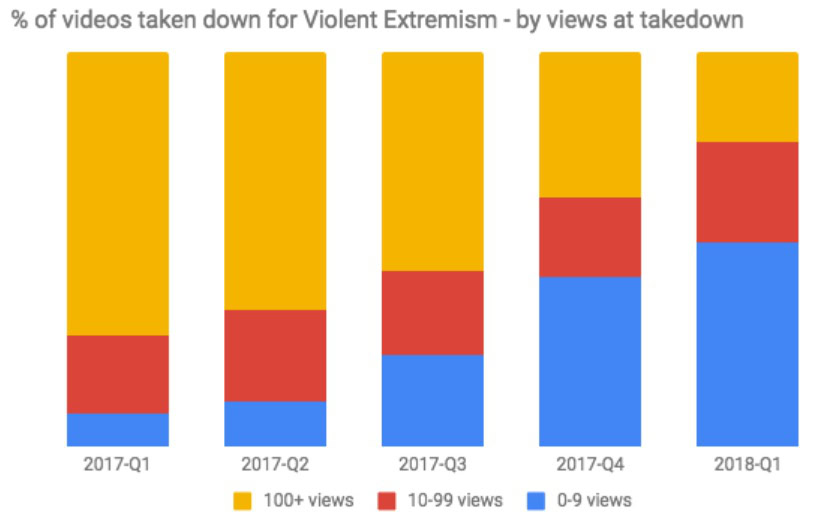

The most important category of videos that need to be removed from the platform is those involving violent extremism. To emphasize how important it is to YouTube that these videos don’t ever get popular on the platform, the company provided this chart which shows how more and more violent videos are taken down before they even get to 10 views:

YouTube’s problems with moderation came to a head last year when disturbing content promoted towards children started to appear on both YouTube and the YouTube Kids app. The videos depicted kid-friendly characters like Peppa Pig, Disney princesses, and Marvel superheroes acting out violent and sexually suggestive themes.

This quarterly moderation report is a direct response to those issues, and will hopefully give people (especially parents) a better idea of what YouTube is doing to combat the abuse of its platform.